www.theguardian.com/technology/2...

We didn’t forget to make sexualised imagery of children illegal.

It is the “Child Trafficking and Pornography Act, 1998”.

Section 1, above, defines the illegal imagery.

Section 5 defines the an offence

We didn’t forget to make sexualised imagery of children illegal.

It is the “Child Trafficking and Pornography Act, 1998”.

Section 1, above, defines the illegal imagery.

Section 5 defines the an offence

The service offered is creating images which meet the definition of “child pornography” as defined in Section 1, “irrespective of how it is produced”.

This is the commercial product the company is are offering.

The service offered is creating images which meet the definition of “child pornography” as defined in Section 1, “irrespective of how it is produced”.

This is the commercial product the company is are offering.

1) flat wrong on the facts

2) flat wrong on the law

3) flat wrong on the impulse to shield a company from the consequences of its own actions in offering the creation of CSAM-as-a-service

This is the sort of morally bankrupt statement that should have its speaker be booed off every street

Media Minister Patrick O'Donovan: "At the end of the day, it's the choice of a person to make these images... technology is moving so fast... even if the law is changed there's no doubt about... the advances are far faster than law is able to respond..."

1) flat wrong on the facts

2) flat wrong on the law

3) flat wrong on the impulse to shield a company from the consequences of its own actions in offering the creation of CSAM-as-a-service

This is the sort of morally bankrupt statement that should have its speaker be booed off every street

If you’re planning to protest, here’s how to safeguard your digital security.

This is an extreme form of remote sexual harassment that can now be used to target anyone, regardless of platform, in seconds. Despite news stories focusing mostly on CSAM it's still unchecked and rampant on Twitter.

I woke up in the new year to discover that some guy on here had saved photos I took on New Year’s Eve and used Grok to remove my clothes. I know this because he showed me.

This is an extreme form of remote sexual harassment that can now be used to target anyone, regardless of platform, in seconds. Despite news stories focusing mostly on CSAM it's still unchecked and rampant on Twitter.

www.windowscentral.com/microsoft/mi...

www.windowscentral.com/microsoft/mi...

"My son thinks ChatGPT is the coolest train loving person in the world. The bar is set so high now I am never going to be able to compete with that.”

@404media.co asked me what I thought about this shit.

www.404media.co/ai-generated...

@404media.co asked me what I thought about this shit.

www.404media.co/ai-generated...

"He's not a hero."

"He's a scumbag."

"He shouldn't be celebrated."

No, no. I'm not saying that about Charlie Kirk. Charlie Kirk said that about George Floyd.

Just in case anyone's interested in what he thought was fair to say about someone who was killed on camera.

"He's not a hero."

"He's a scumbag."

"He shouldn't be celebrated."

No, no. I'm not saying that about Charlie Kirk. Charlie Kirk said that about George Floyd.

Just in case anyone's interested in what he thought was fair to say about someone who was killed on camera.

But Gretchen Felker-Martin's first Red Hood issue gets recalled, and the rest of the run pulped, because she

*checks notes*

wasn't sad that Kirk got shot?

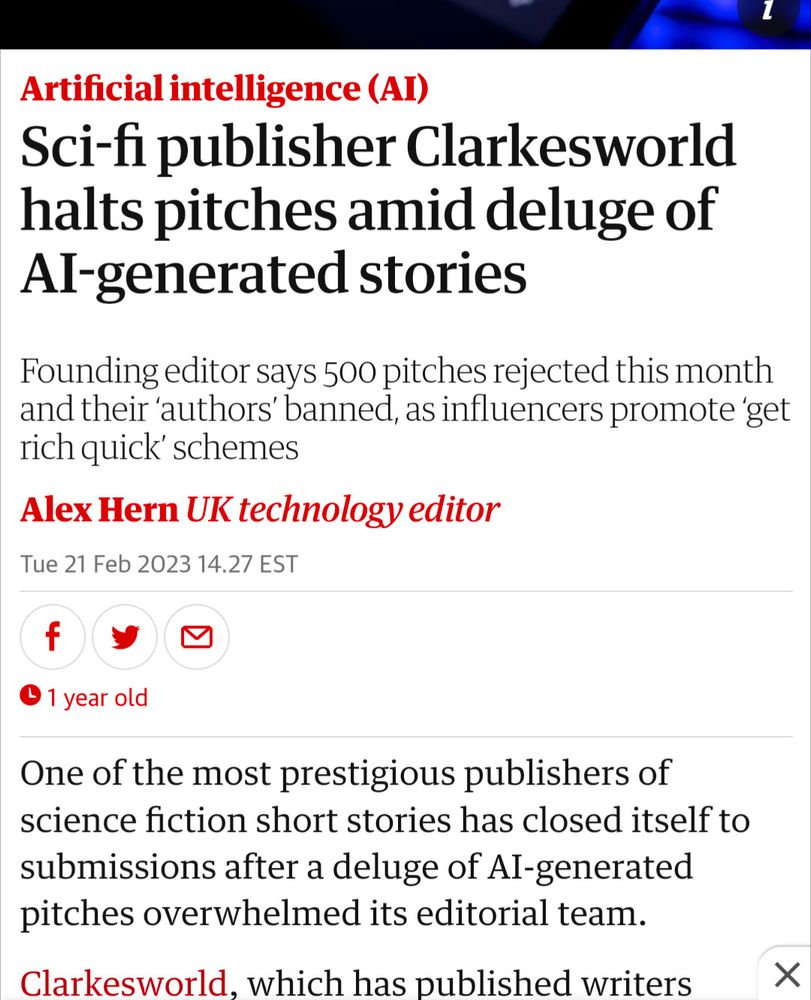

screenshots are from 2023 and 2024 this has gotten worse across the board

screenshots are from 2023 and 2024 this has gotten worse across the board

Was It Something The Democrats Said?

A Response to Third Way’s Political Language Memo

open.substack.com/pub/dcinboxi...