Computational Neuroscience + AI @ IBM Research | 📍NYC | https://ito-takuya.github.io

@natmachintell.nature.com

#ML #AI #MLSky

1/n

Reposted by Stephen M. Walt, Joanna Bryson, Marc Lynch , and 80 more Stephen M. Walt, Joanna Bryson, Marc Lynch, Tobias Bunde, Anupam Chander, Rebecca Solnit, Jennifer Bussell, Richard S.J. Tol, Dorothy Bishop, Brian J. Enquist, Richard E. Lenski, Andreas J. Bäumler, Tobias Kuemmerle, Will Jennings, Jon Pierre, Stefan Rahmstorf, Peter Thorne, Brendan Nyhan, Timon McPhearson, Linda J. Skitka, Du Toit, Julie L. Lockwood, Elena Litchman, Scott L. Greer, John Horgan, Steve Peers, Christopher Wright, Michael A. Clemens, Matthias Doepke, Lewis R. Gordon, Michael J. Allen, Martin Tomko, David W. Kerstetter, Marianne Hundt, Juan Cole, Cathy N. Davidson, Gernot Wagner, Jerry H. Ratcliffe, Edgar Morgenroth, Tuomas Mattila, Michael Kevane, Devon Greyson, Smith, Jonathan Hopkin, Michael W. Kraus, Silvia Secchi, Aaron Sojourner, Vincent Noël, Lisa Janicke Hinchliffe, William A. Allen, Robert B. Reich, Michael D. McDonald, Craig R. McClain, Jason Lyall, Michael Müller, Michael Jones‐Correa, Mark Rice, Anthony Burke, Juanjo Medina, Arlene Stein, Jack Stilgoe, James Connelly, Georg Weizsäcker, Ted Temzelides, Richard M. Carpiano, Nathan Richardson, Guy Pe’er, Hisham Zerriffi, Tim Stephens, Blair Fix, Karen O’Leary, Taku Ito, Sylvain Genevois, Nicole Guenther Discenza, Olúfẹ́mi Táíwò, Dietmar Fehr, Karin Wulf, Juliet Johnson, Vincent Foucher, Jeannette Sutton, David R. Shumway, Elise Thomas, Carly D. Ziter

www.nature.com/immersive/d4...

Reposted by Brian A. Nosek, Joshua S. Weitz, Juan Rocha , and 2 more Brian A. Nosek, Joshua S. Weitz, Juan Rocha, Taku Ito, Vojtěch Brlík

Take a look—the numbers are staggering. By me, @dangaristo.bsky.social, Jeff Tollefson, @kimay.bsky.social, & help from @noamross.net @scott-delaney.bsky.social

Reposted by Taku Ito

Reposted by Joanna Bryson, Taku Ito, Gilles Louppe

We found embeddings like RoPE aid training but bottleneck long-sequence generalization. Our solution’s simple: treat them as a temporary training scaffold, not a permanent necessity.

arxiv.org/abs/2512.12167

pub.sakana.ai/DroPE

Reposted by Taku Ito

Reposted by Taku Ito

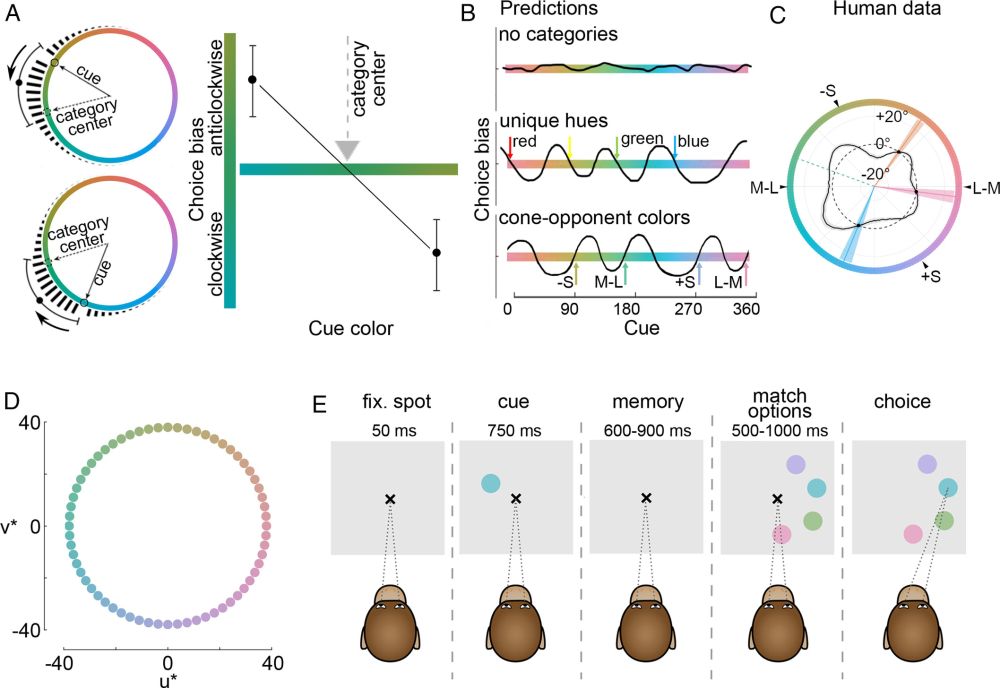

www.nature.com/articles/s42...

Reposted by Taku Ito

Reposted by Larry W. Hunter, Taku Ito

🍰Reinforcement Learning environments for LLMs

🐎Speculative and non-auto regressive generation for LLMs

interested/curious? DM or email ramon.astudillo@ibm.com

Reposted by Taku Ito

mikexcohen.substack.com/p/why-i-left...

Reposted by Taku Ito

go.nature.com/4nMUgYz

Reposted by Alessandro Gozzi, Taku Ito

Blog: research.ibm.com/blog/ai-algo... arXiv: arxiv.org/abs/2411.05943

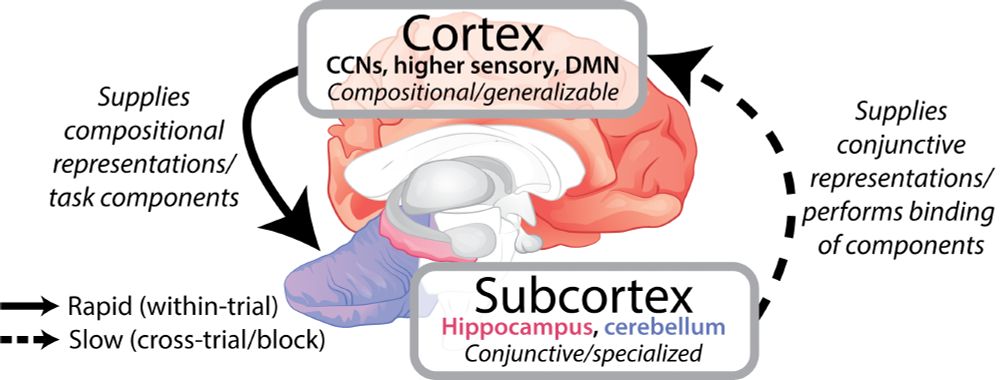

3/n

2/n

Reposted by Taku Ito

Reposted by Todd M. Gureckis, Taku Ito

From childhood on, people can create novel, playful, and creative goals. Models have yet to capture this ability. We propose a new way to represent goals and report a model that can generate human-like goals in a playful setting... 1/N

Reposted by Taku Ito

Check it out: arxiv.org/pdf/2502.03350

Reposted by Sarah Mitchell, Taku Ito

Reposted by Taku Ito

Reposted by Taku Ito

Reposted by Taku Ito

Reposted by Taku Ito

Test-time compute paradigm seems really fruitful.

Reposted by Taku Ito

www.nature.com/articles/s41...

Reposted by Taku Ito

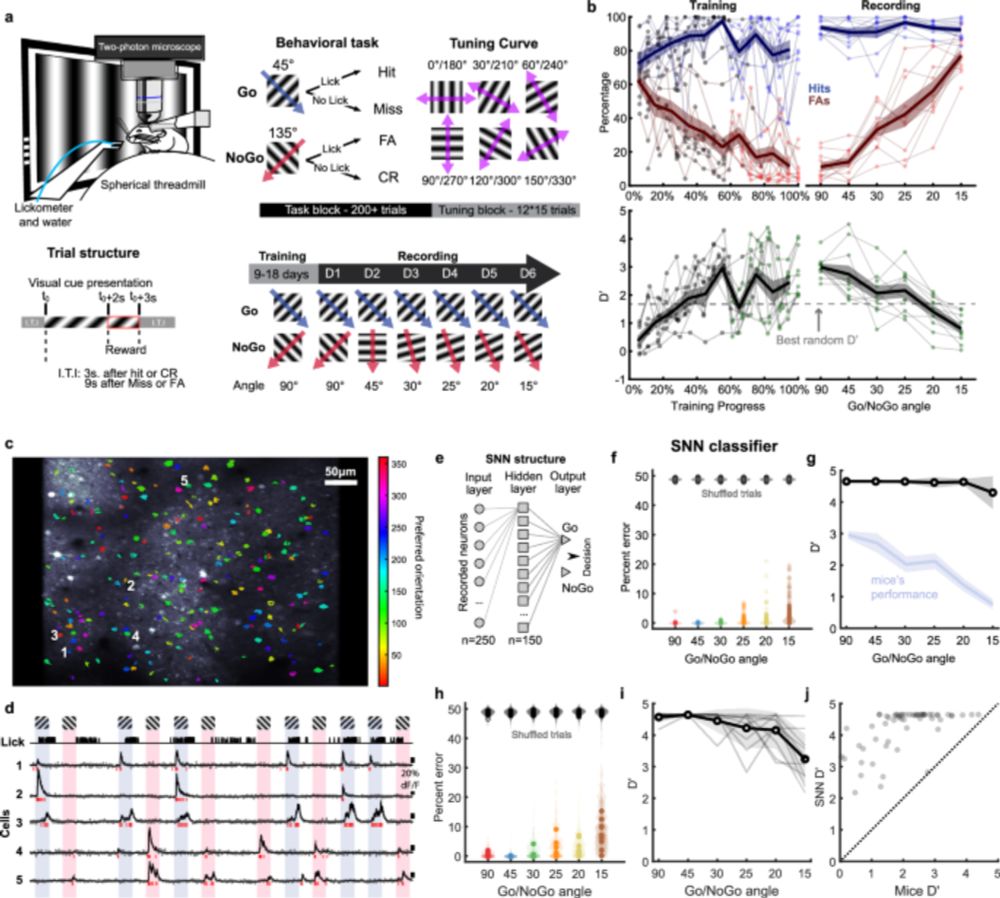

www.biorxiv.org/content/10.1...

🧠👩🏻🔬🧪🧵

#neuroskyence

1/

Reposted by Taku Ito

docs.google.com/document/d/1...

If only more folks in AI were gentle and introspective like this...

Reposted by Taku Ito

My Famous Deep Learning Papers list (that I use in teaching) does not include any new ideas from the last year.

papers.baulab.info

Which single new paper would you add?

Reposted by Taku Ito

⏰ When: TODAY, Thu, Dec 12, 4:30 p.m. – 7:30 p.m. PST

📍 Where: East Exhibit Hall A-C, #3705

📄 What: Geometry of Naturalistic Object Representations in Recurrent Neural Network Models of Working Memory

Hope to see you there!

@bashivan.bsky.social @takuito.bsky.social

Reposted by Stanislas Dehaene, Francisco C. Pereira, Taku Ito

"A polar coordinate system represents syntax in large language models",

📄: Paper arxiv.org/abs/2412.05571

🪧: Poster tomorrow: neurips.cc/virtual/2024...

🧵: Thread 👇