Website: adhirajghosh.github.io

Twitter: https://x.com/adhiraj_ghosh98

🗓️Wed, July 30, 11-12:30 CET

📍Hall 4/5

I’m also excited to talk about lifelong and personalised benchmarking, data curation and vision-language in general! Let’s connect!

🗓️Wed, July 30, 11-12:30 CET

📍Hall 4/5

I’m also excited to talk about lifelong and personalised benchmarking, data curation and vision-language in general! Let’s connect!

🗓️Wed, July 30, 11-12:30 CET

📍Hall 4/5

I’m also excited to talk about lifelong and personalised benchmarking, data curation and vision-language in general! Let’s connect!

Just trying something new! I recorded one of my recent talks, sharing what I learned from starting as a small content creator.

youtu.be/0W_7tJtGcMI

We all benefit when there are more content creators!

Just trying something new! I recorded one of my recent talks, sharing what I learned from starting as a small content creator.

youtu.be/0W_7tJtGcMI

We all benefit when there are more content creators!

Stay tuned for the official leaderboard and real-time personalised benchmarking release!

If you’re attending ACL or are generally interested in the future of foundation model benchmarking, happy to talk!

#ACL2025NLP #ACL2025

@aclmeeting.bsky.social

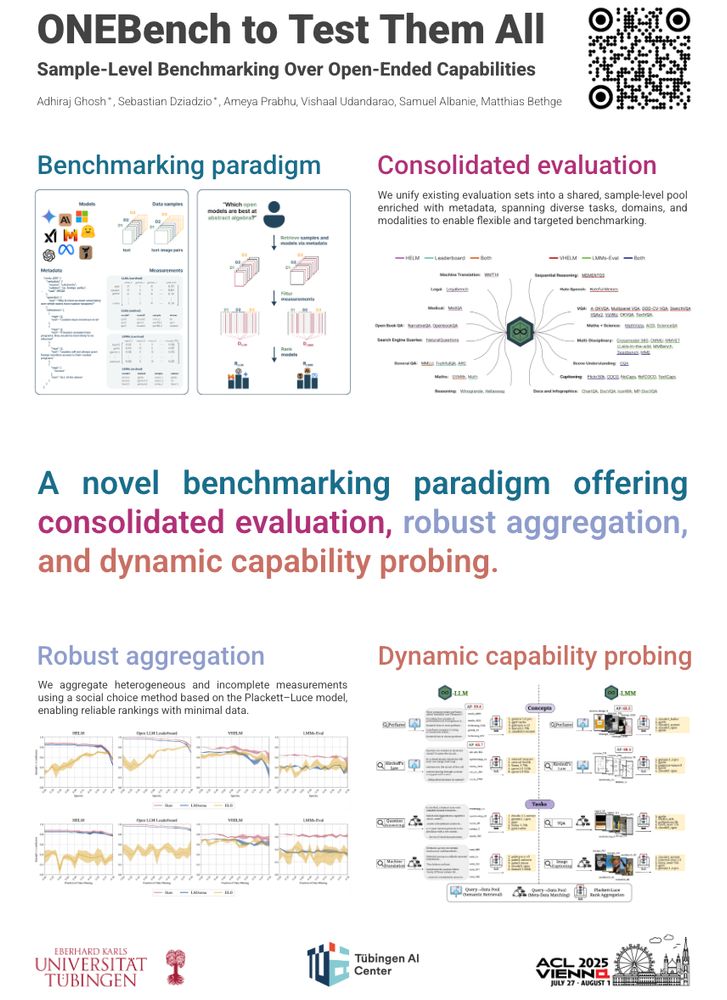

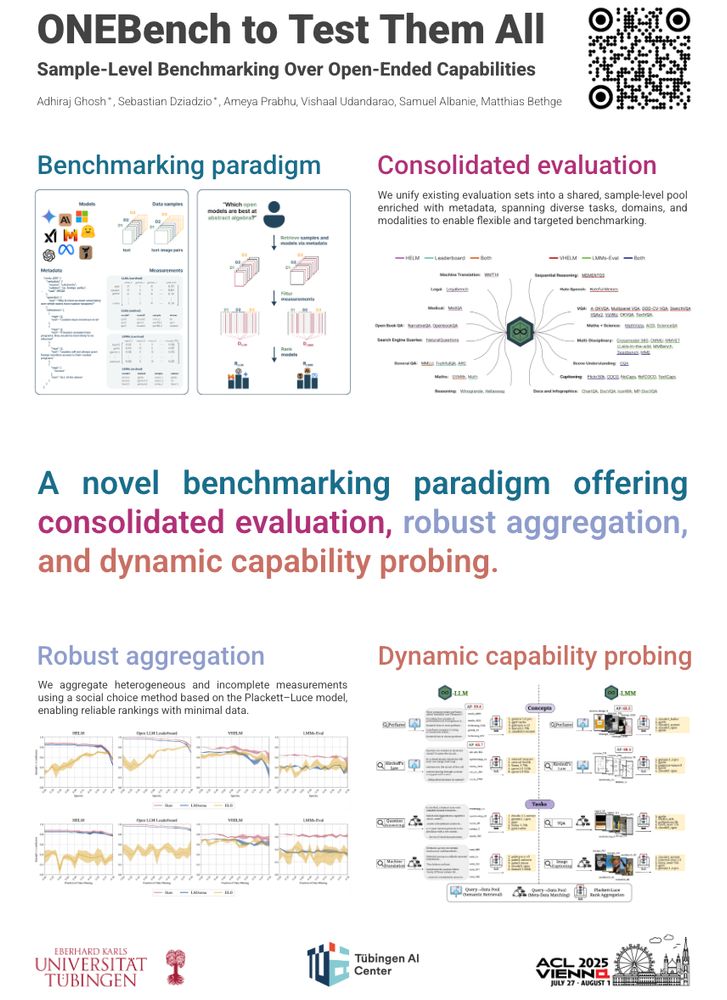

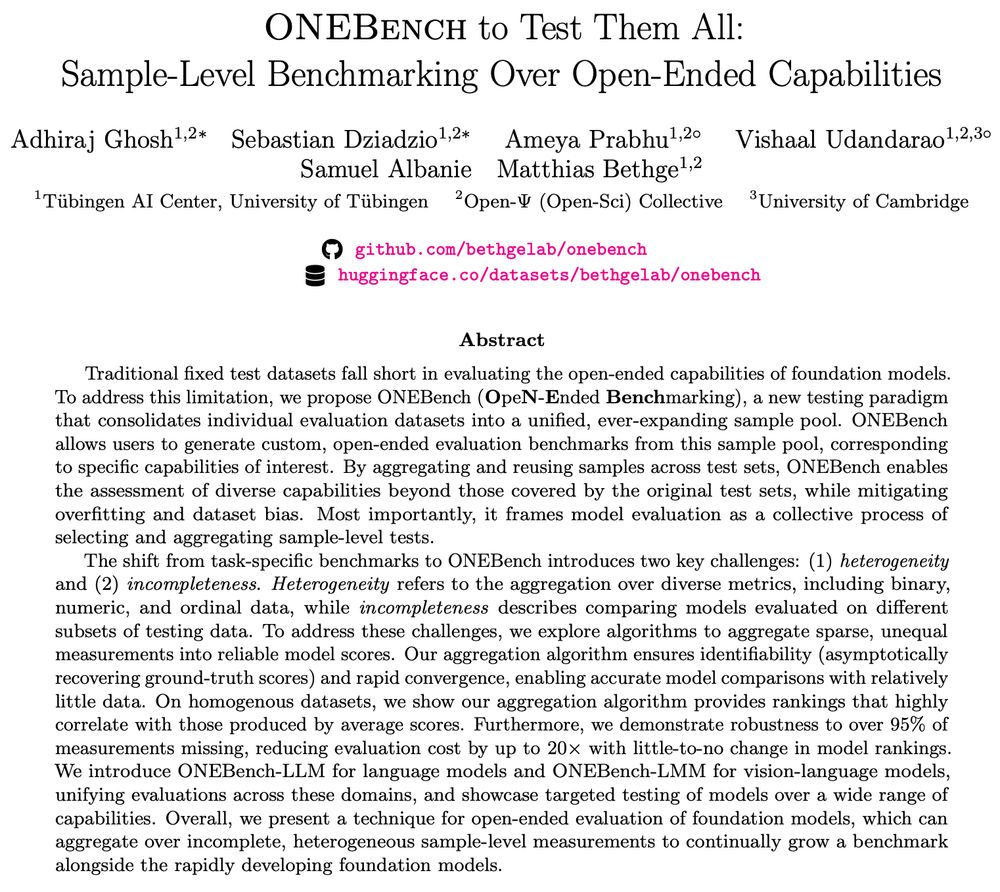

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

Stay tuned for the official leaderboard and real-time personalised benchmarking release!

If you’re attending ACL or are generally interested in the future of foundation model benchmarking, happy to talk!

#ACL2025NLP #ACL2025

@aclmeeting.bsky.social

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

As always, it was super fun working on this with @prasannamayil.bsky.social

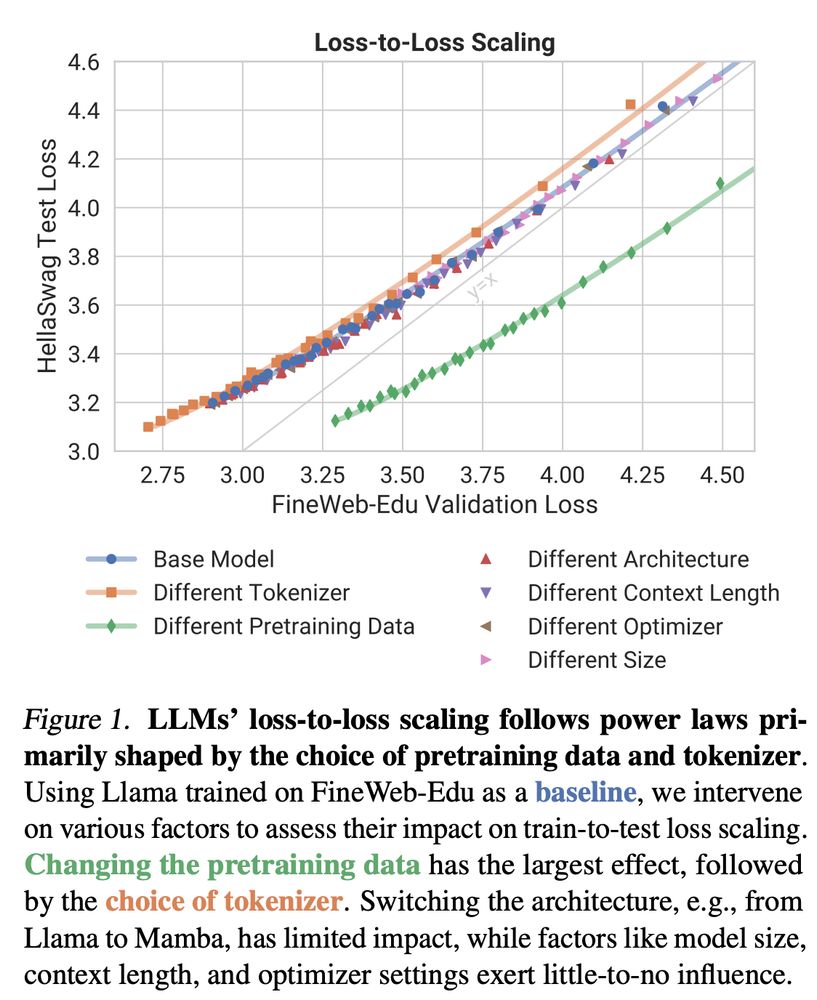

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

As always, it was super fun working on this with @prasannamayil.bsky.social

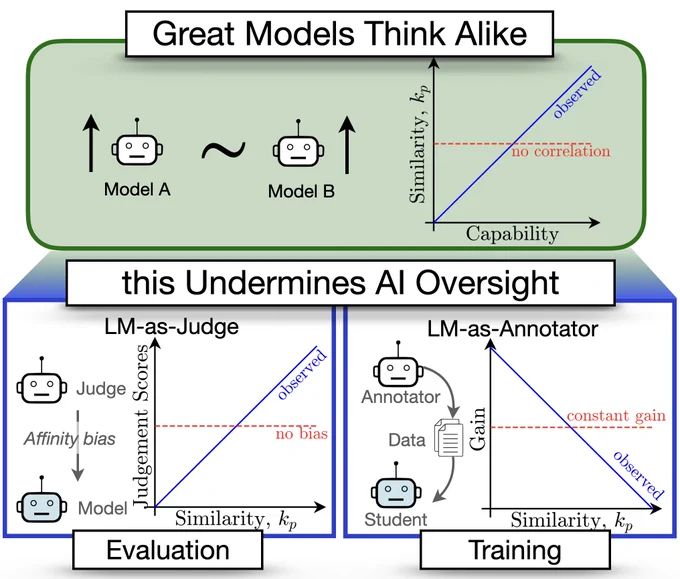

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

blog.apoorvkh.com/posts/projec...

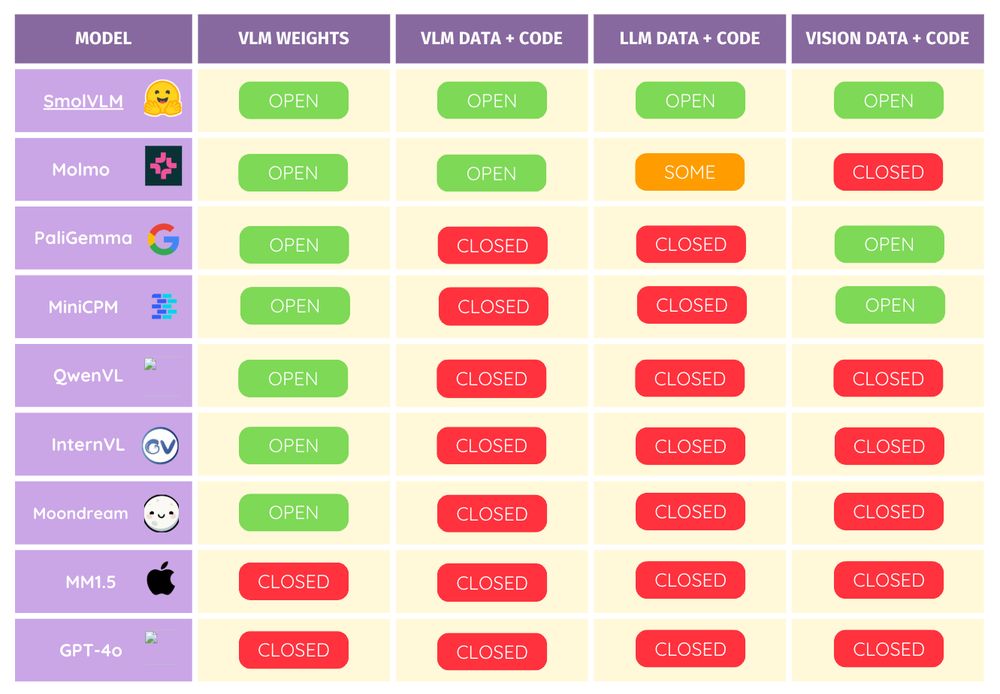

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

CECE enables interpretability and achieves significant improvements in hard compositional benchmarks without fine-tuning (e.g., Winoground, EqBen) and alignment (e.g., DrawBench, EditBench). + info: cece-vlm.github.io

CECE enables interpretability and achieves significant improvements in hard compositional benchmarks without fine-tuning (e.g., Winoground, EqBen) and alignment (e.g., DrawBench, EditBench). + info: cece-vlm.github.io

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

@adhirajghosh.bsky.social and

@dziadzio.bsky.social!⬇️

Sample-level benchmarks could be the new generation- reusable, recombinable & evaluate lots of capabilities!

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

@adhirajghosh.bsky.social and

@dziadzio.bsky.social!⬇️

Sample-level benchmarks could be the new generation- reusable, recombinable & evaluate lots of capabilities!

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

go.bsky.app/TENRRBb

go.bsky.app/TENRRBb