Come and explore how representational similarities behave across datasets :)

📅 Thu Jul 17, 11 AM-1:30 PM PDT

📍 East Exhibition Hall A-B #E-2510

Huge thanks to @lorenzlinhardt.bsky.social, Marco Morik, Jonas Dippel, Simon Kornblith, and @lukasmut.bsky.social!

Come and explore how representational similarities behave across datasets :)

📅 Thu Jul 17, 11 AM-1:30 PM PDT

📍 East Exhibition Hall A-B #E-2510

Huge thanks to @lorenzlinhardt.bsky.social, Marco Morik, Jonas Dippel, Simon Kornblith, and @lukasmut.bsky.social!

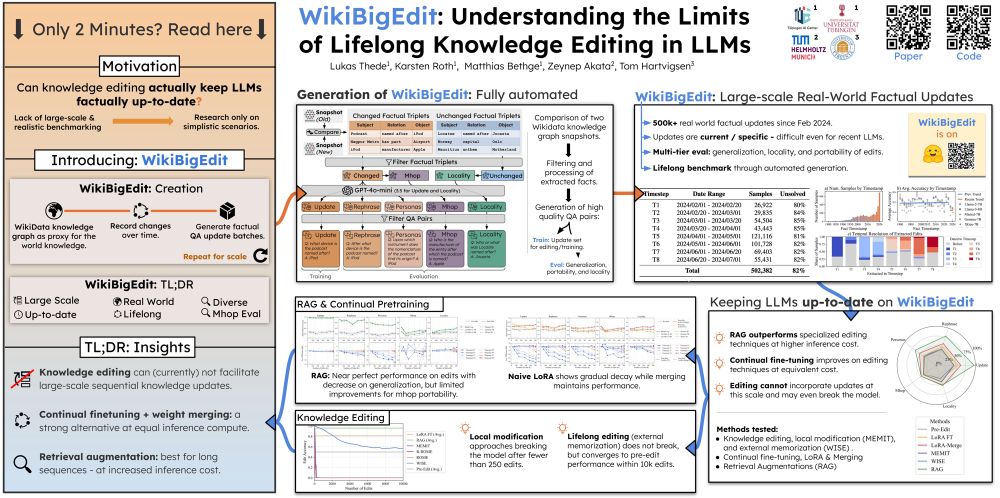

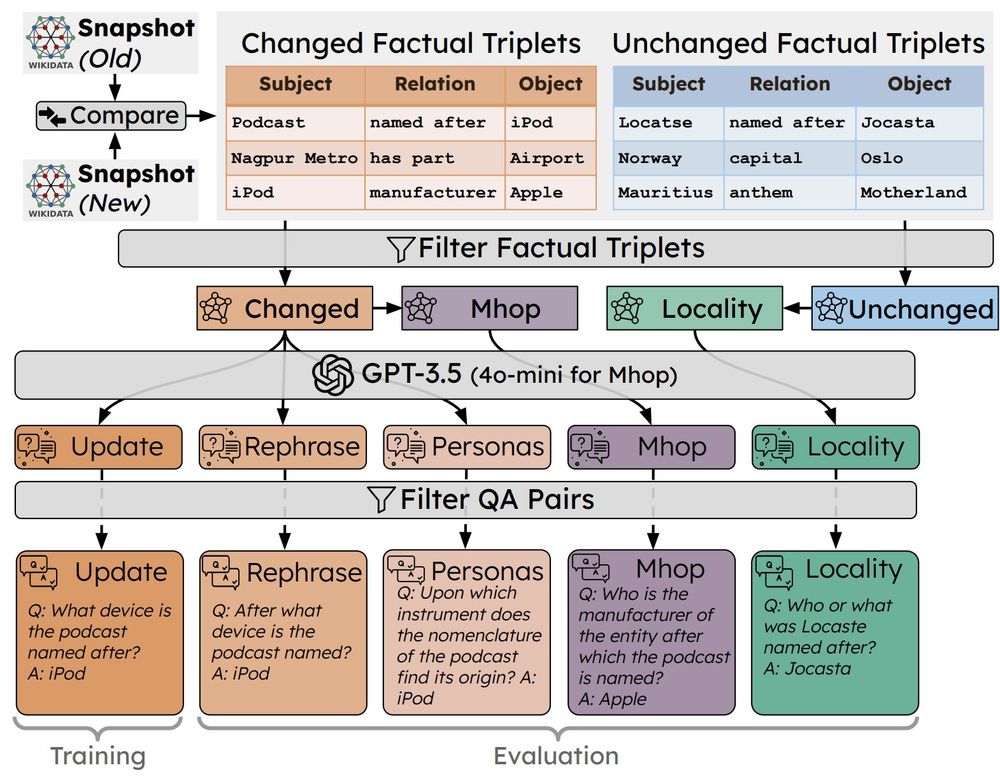

How can LLMs really keep up with the world?

Come by E-2405 on July 15th (4:30–7:00pm) to check out WikiBigEdit – our new benchmark to test lifelong knowledge editing in LLMs at scale.

🔗 Real-world updates

📈 500k+ QA edits

🧠 Editing vs. RAG vs. CL

How can LLMs really keep up with the world?

Come by E-2405 on July 15th (4:30–7:00pm) to check out WikiBigEdit – our new benchmark to test lifelong knowledge editing in LLMs at scale.

🔗 Real-world updates

📈 500k+ QA edits

🧠 Editing vs. RAG vs. CL

Join CRC1233 "Robust Vision" (Uni Tübingen) to build benchmarks & evaluation methods for vision models, bridging brain & AI. Work with top faculty & shape vision research.

Apply: tinyurl.com/3jtb4an6

#NeuroAI #Jobs

Join CRC1233 "Robust Vision" (Uni Tübingen) to build benchmarks & evaluation methods for vision models, bridging brain & AI. Work with top faculty & shape vision research.

Apply: tinyurl.com/3jtb4an6

#NeuroAI #Jobs

We’re thrilled to celebrate Jae Myung Kim, who will defend his PhD on 25th June! 🎉

Jae Myung began his PhD at @unituebingen.bsky.social as part of the ELLIS & IMPRS-IS programs, advised by @zeynepakata.bsky.social and collaborating closely with Cordelia Schmid.

We’re thrilled to celebrate Jae Myung Kim, who will defend his PhD on 25th June! 🎉

Jae Myung began his PhD at @unituebingen.bsky.social as part of the ELLIS & IMPRS-IS programs, advised by @zeynepakata.bsky.social and collaborating closely with Cordelia Schmid.

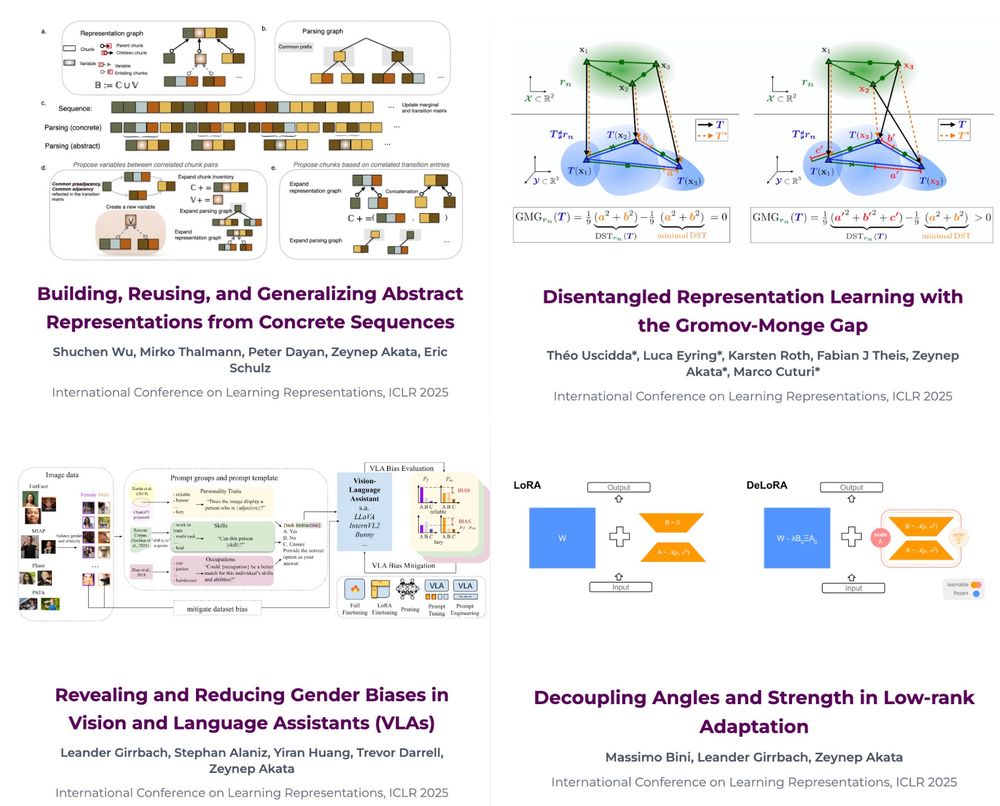

The EML group is presenting 4 exciting papers — come say hi at our poster sessions! 👇Let's chat!

More details in the thread — see you there! 🌟

The EML group is presenting 4 exciting papers — come say hi at our poster sessions! 👇Let's chat!

More details in the thread — see you there! 🌟

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

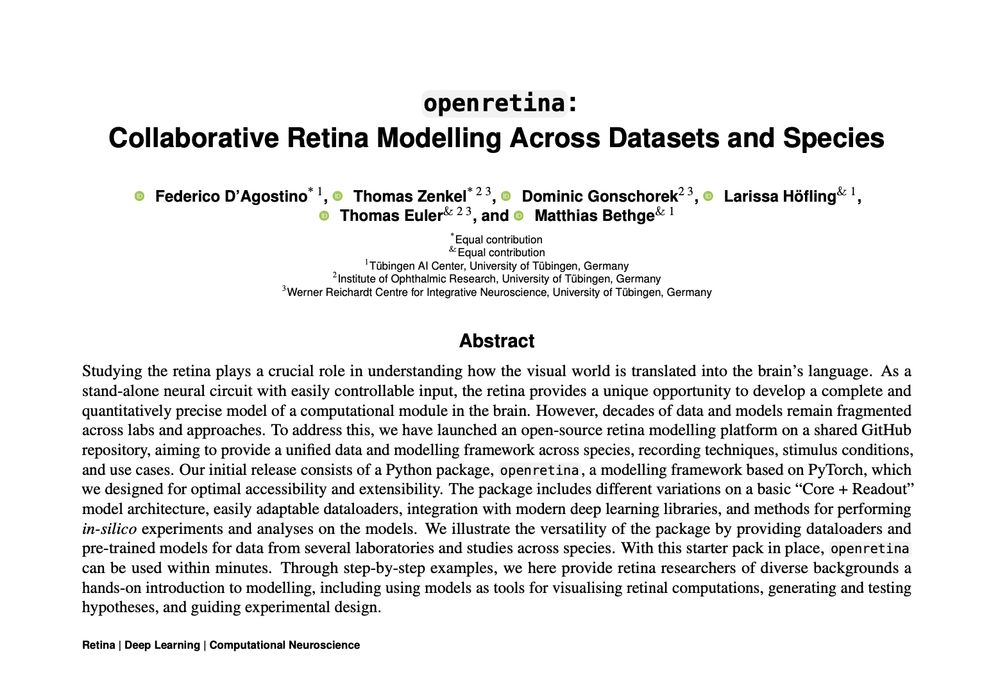

We’ve just launched openretina, an open-source framework for collaborative retina modeling across datasets and species.

A 🧵👇 (1/9)

We’ve just launched openretina, an open-source framework for collaborative retina modeling across datasets and species.

A 🧵👇 (1/9)

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...

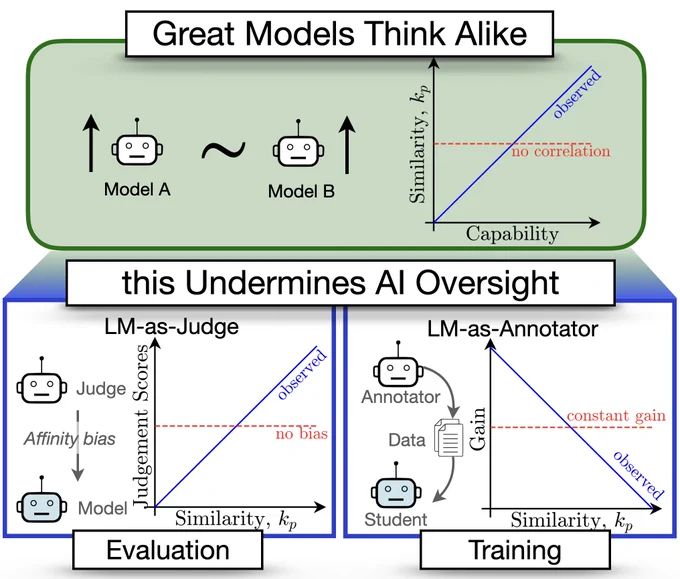

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

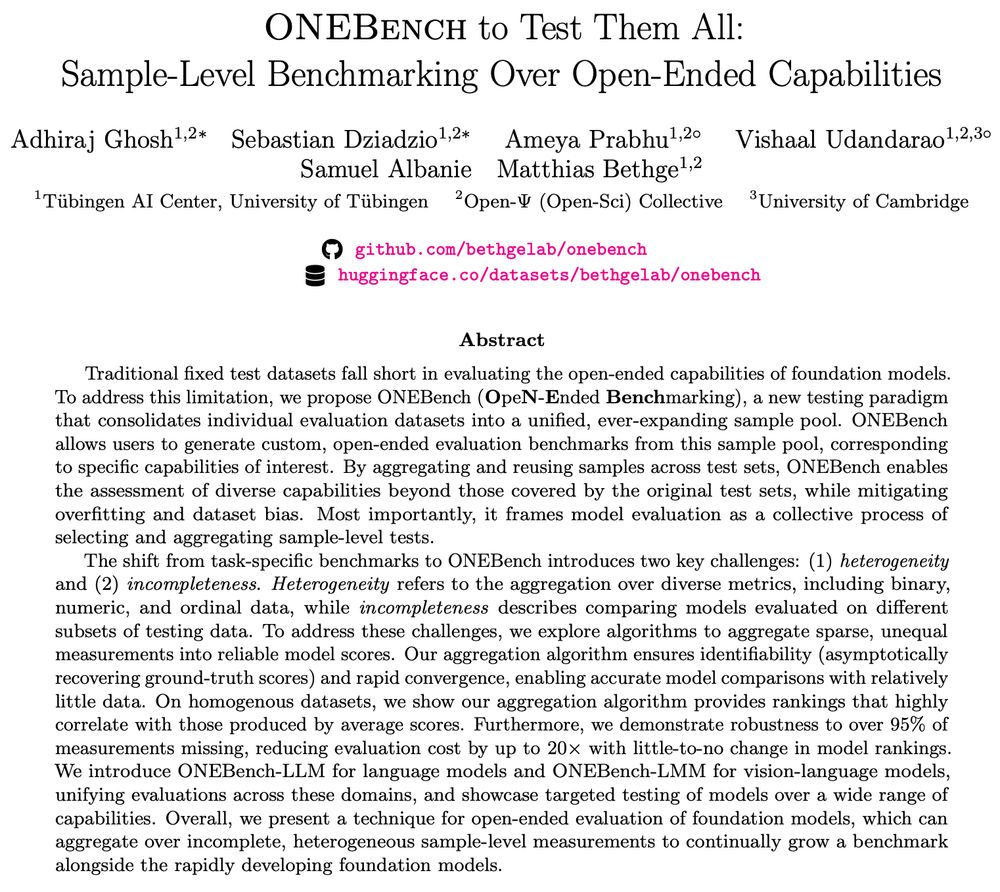

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇