🔗 karroth.com

Turns out you can, and here is how: arxiv.org/abs/2411.15099

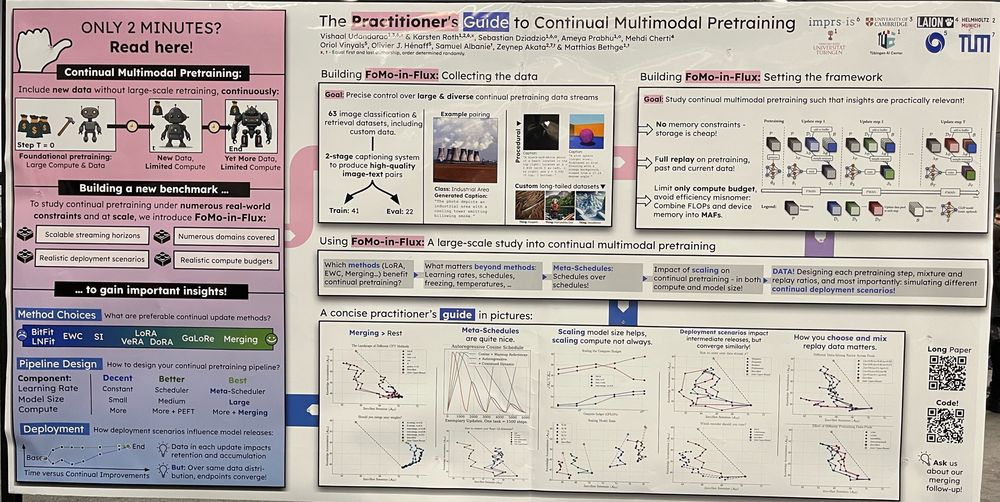

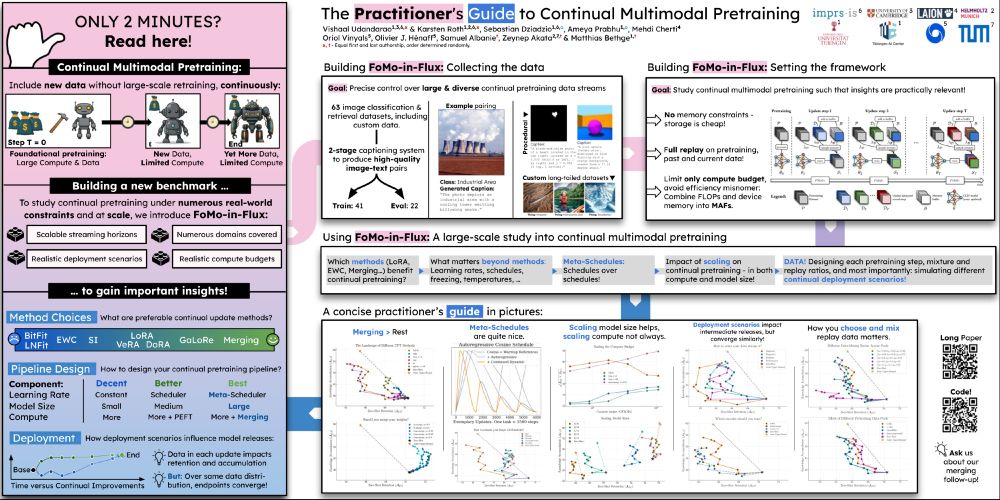

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Biggest thanks to @zeynepakata.bsky.social and Oriol Vinyals for all the guidance, support, and incredibly eventful and defining research years ♥️!

Biggest thanks to @zeynepakata.bsky.social and Oriol Vinyals for all the guidance, support, and incredibly eventful and defining research years ♥️!

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

with Théo Uscidda, Luca Eyring, @confusezius.bsky.social, Fabian J Theis, Marco Cuturi

📄 Decoupling Angles and Strength in Low-rank Adaptation

with Massimo Bini, Leander Girrbach

with Théo Uscidda, Luca Eyring, @confusezius.bsky.social, Fabian J Theis, Marco Cuturi

📄 Decoupling Angles and Strength in Low-rank Adaptation

with Massimo Bini, Leander Girrbach

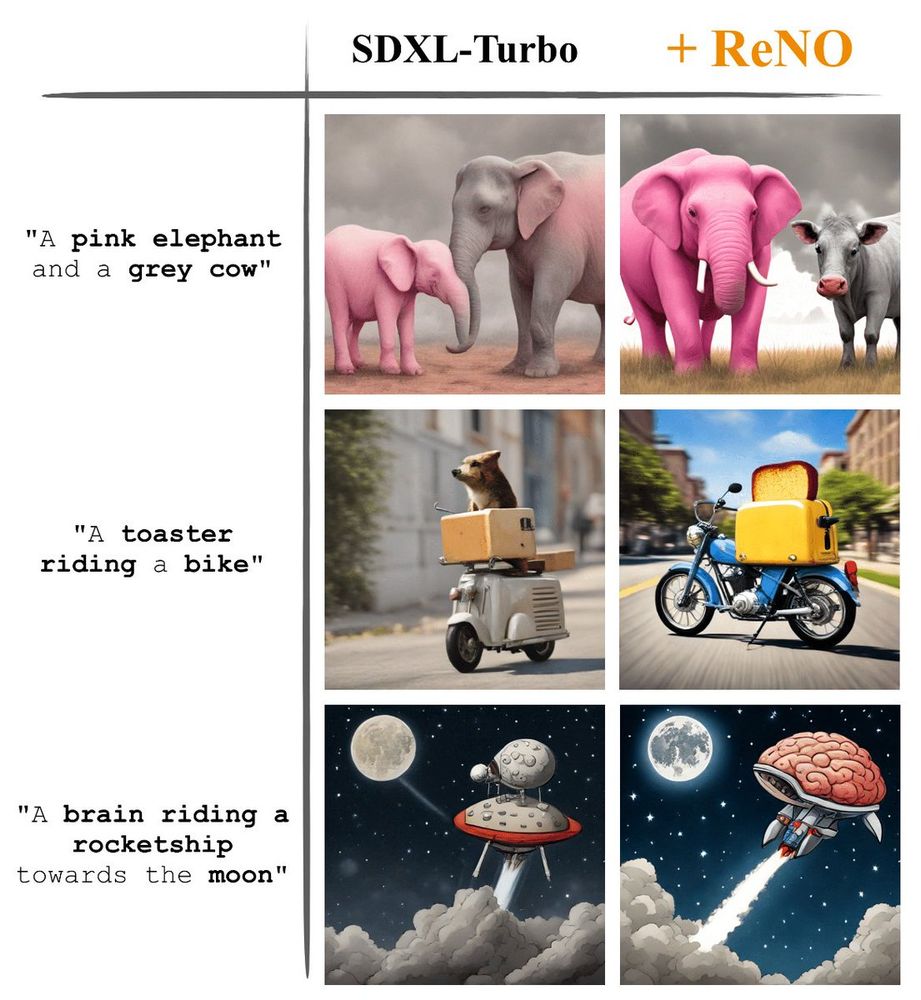

We show that with our ReNO, Reward-based Noise Optimization, one-step models consistently surpass the performance of all current open-source Text-to-Image models within the computational budget of 20-50 sec!

#NeurIPS2024

We show that with our ReNO, Reward-based Noise Optimization, one-step models consistently surpass the performance of all current open-source Text-to-Image models within the computational budget of 20-50 sec!

#NeurIPS2024

We comprehensively and systematically investigate this in our new work, check it out!

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

We comprehensively and systematically investigate this in our new work, check it out!

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

🌍 Know someone on the list? bit.ly/3ZJd9Cz

Tag them in a reply with congratulations.

🌍 Know someone on the list? bit.ly/3ZJd9Cz

Tag them in a reply with congratulations.

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

Context-Aware Multimodal Pretraining

Now on ArXiv

Can you turn vision-language models into strong any-shot models?

Go beyond zero-shot performance in SigLixP (x for context)

Read @confusezius.bsky.social thread below…

And follow Karsten … a rising star!

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Context-Aware Multimodal Pretraining

Now on ArXiv

Can you turn vision-language models into strong any-shot models?

Go beyond zero-shot performance in SigLixP (x for context)

Read @confusezius.bsky.social thread below…

And follow Karsten … a rising star!

Captions go above the tables, but otherwise aesthetically very pleasing.

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Captions go above the tables, but otherwise aesthetically very pleasing.

We find simple changes to multimodal pretraining are sufficient to yield outsized gains on a wide range of few-shot tasks.

Congratulations @confusezius.bsky.social on a very successful internship!

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

We find simple changes to multimodal pretraining are sufficient to yield outsized gains on a wide range of few-shot tasks.

Congratulations @confusezius.bsky.social on a very successful internship!

Fun project with @confusezius.bsky.social, @zeynepakata.bsky.social, @dimadamen.bsky.social and

@olivierhenaff.bsky.social.

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Fun project with @confusezius.bsky.social, @zeynepakata.bsky.social, @dimadamen.bsky.social and

@olivierhenaff.bsky.social.

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

#ELLISforEurope #AI #ML #CrossBorderCollab #PhD

#ELLISforEurope #AI #ML #CrossBorderCollab #PhD