🔗 karroth.com

Biggest thanks to @zeynepakata.bsky.social and Oriol Vinyals for all the guidance, support, and incredibly eventful and defining research years ♥️!

Biggest thanks to @zeynepakata.bsky.social and Oriol Vinyals for all the guidance, support, and incredibly eventful and defining research years ♥️!

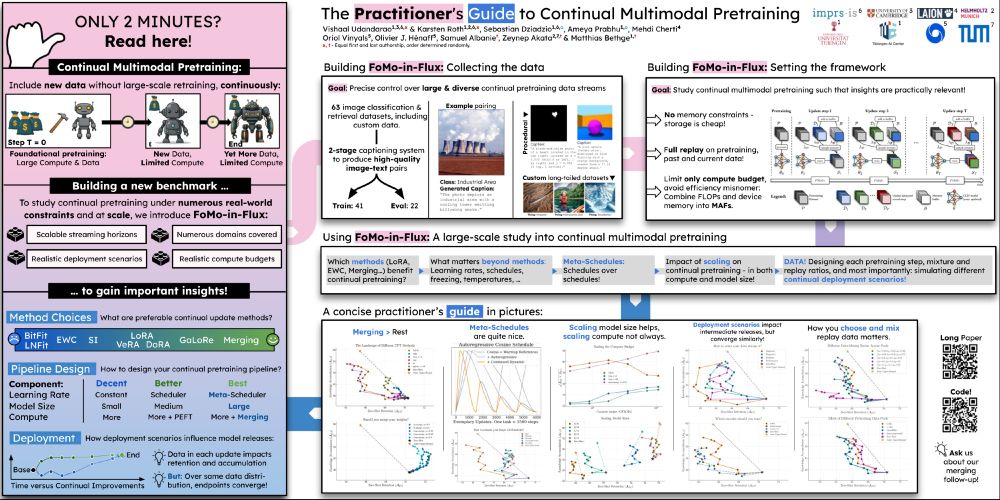

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

LIxP also maintains the strong zero-shot transfer of CLIP and SigLIP backbones across model sizes (S to L) and data (up to 15B), and allows up to 4x sample efficiency at test time, and up to +16% performance gains!

LIxP also maintains the strong zero-shot transfer of CLIP and SigLIP backbones across model sizes (S to L) and data (up to 15B), and allows up to 4x sample efficiency at test time, and up to +16% performance gains!

We teach models what to expect at test-time in few-shot scenarios.

We teach models what to expect at test-time in few-shot scenarios.

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread: