Benchmarking | LLM Agents | Data-Centric ML | Continual Learning | Unlearning

drimpossible.github.io

ELLIOT is a €25M #HorizonEurope project launching July 2025 to build open, trustworthy Multimodal Generalist Foundation Models.

30 partners, 12 countries, EU values.

🔗 Press release: apigateway.agilitypr.com/distribution...

ELLIOT is a €25M #HorizonEurope project launching July 2025 to build open, trustworthy Multimodal Generalist Foundation Models.

30 partners, 12 countries, EU values.

🔗 Press release: apigateway.agilitypr.com/distribution...

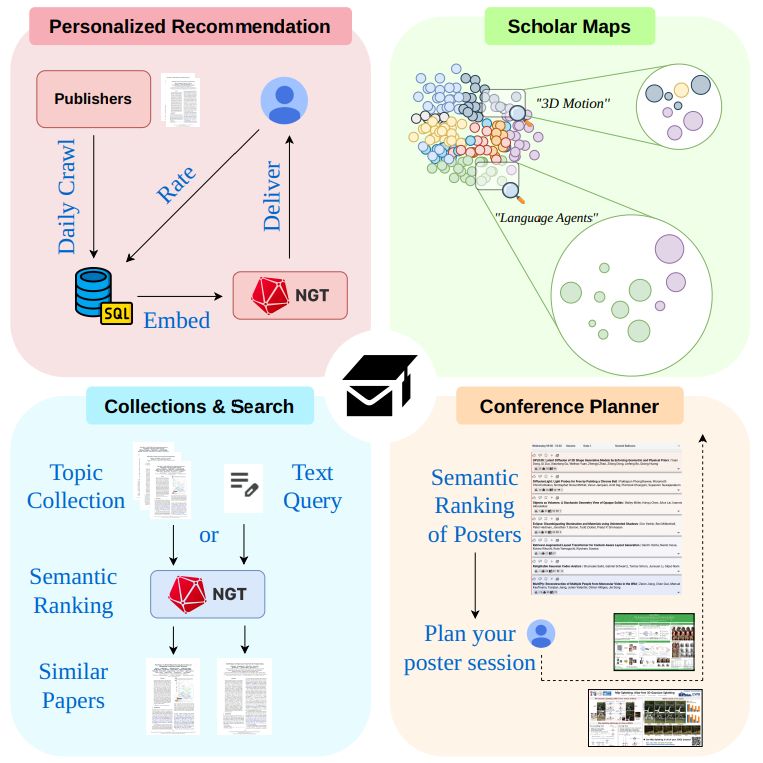

📬 Scholar Inbox is your personal assistant for staying up to date with your literature. It includes: visual summaries, collections, search and a conference planner.

Check out our white paper: arxiv.org/abs/2504.08385

#OpenScience #AI #RecommenderSystems

📬 Scholar Inbox is your personal assistant for staying up to date with your literature. It includes: visual summaries, collections, search and a conference planner.

Check out our white paper: arxiv.org/abs/2504.08385

#OpenScience #AI #RecommenderSystems

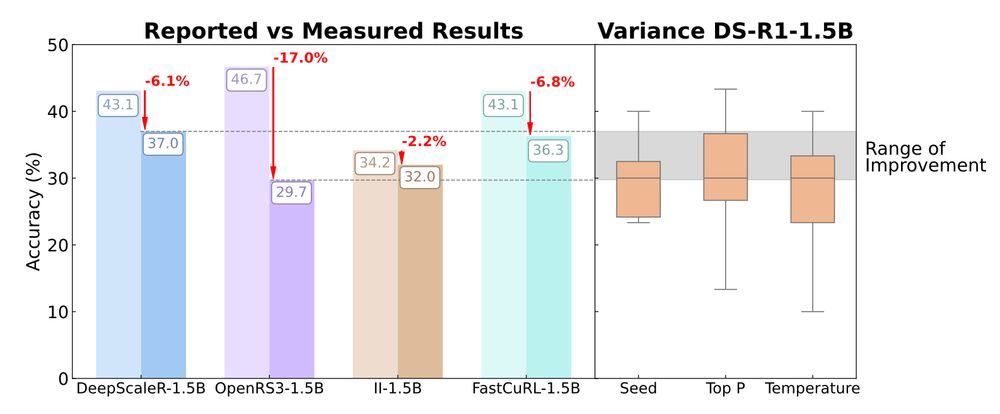

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

But what would it actually take to support this in practice at the scale and speed the real world demands?

We explore this question and really push the limits of lifelong knowledge editing in the wild.

👇

Details: sites.google.com/view/eval-fo...

#CVPR2025

Details: sites.google.com/view/eval-fo...

#CVPR2025

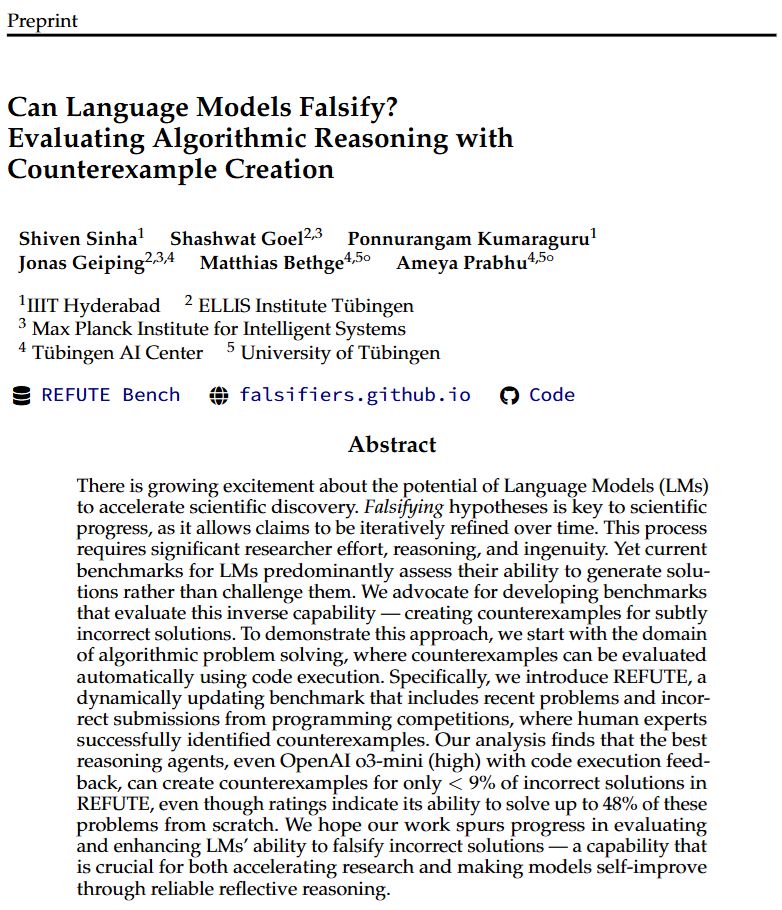

Could form an AI Brandolini's Law: "Capability needed to refute bullshit is far larger than that needed to generate it"

Could form an AI Brandolini's Law: "Capability needed to refute bullshit is far larger than that needed to generate it"

@cvprconference.bsky.social ! 🚀

🔍 Topics: Multi-modal learning, vision-language, audio-visual, and more!

📅 Deadline: March 14, 2025

📝 Submission: cmt3.research.microsoft.com/MMFM2025

🌐 sites.google.com/view/mmfm3rd...

@cvprconference.bsky.social ! 🚀

🔍 Topics: Multi-modal learning, vision-language, audio-visual, and more!

📅 Deadline: March 14, 2025

📝 Submission: cmt3.research.microsoft.com/MMFM2025

🌐 sites.google.com/view/mmfm3rd...

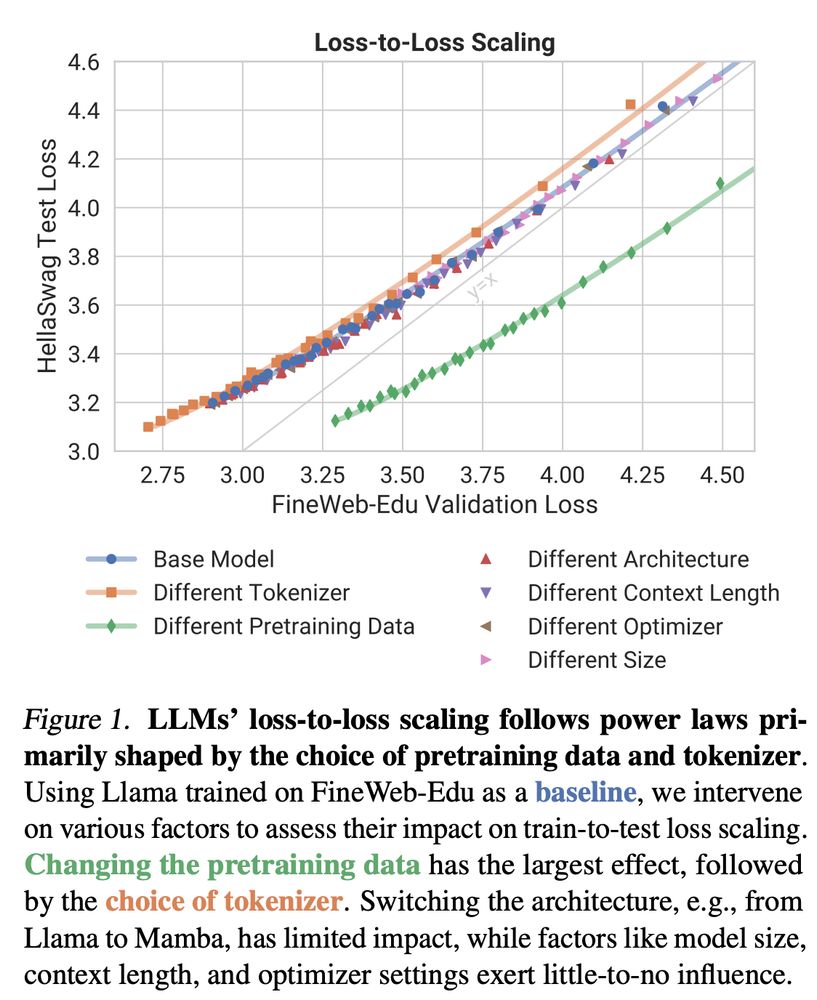

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

25% of Openthoughts-114k-math filtered — issues included proofs, missing figures, and multiple questions with one answer.

Check out work by

@ahochlehnert.bsky.social & @hrdkbhatnagar.bsky.social

below 👇

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

25% of Openthoughts-114k-math filtered — issues included proofs, missing figures, and multiple questions with one answer.

Check out work by

@ahochlehnert.bsky.social & @hrdkbhatnagar.bsky.social

below 👇

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

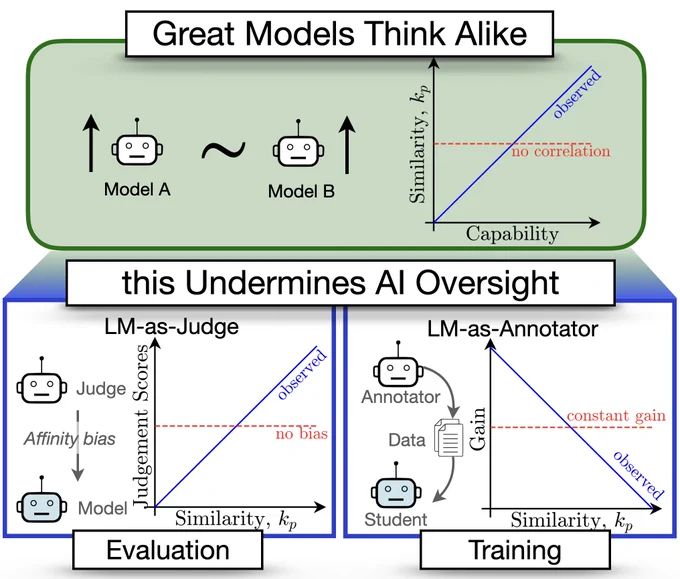

LMs of a similar capability class (not model family tho!) behave similarly and this skews oversight far more than I expected.

Check the 4-in-1 mega paper below to 👀 how 👇

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

LMs of a similar capability class (not model family tho!) behave similarly and this skews oversight far more than I expected.

Check the 4-in-1 mega paper below to 👀 how 👇

Poster @NeurIPS, East Hall #1910- come say hi👋

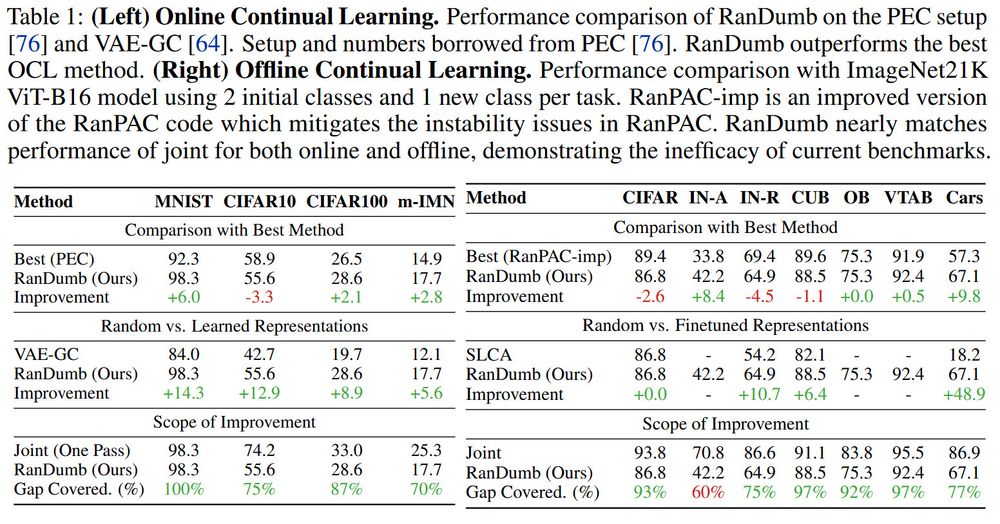

Core claim: Random representations Outperform Online Continual Learning Methods!

How: We replace the deep network by a *random projection* and linear clf, yet outperform all OCL methods by huge margins [1/n]

Poster @NeurIPS, East Hall #1910- come say hi👋

Core claim: Random representations Outperform Online Continual Learning Methods!

How: We replace the deep network by a *random projection* and linear clf, yet outperform all OCL methods by huge margins [1/n]

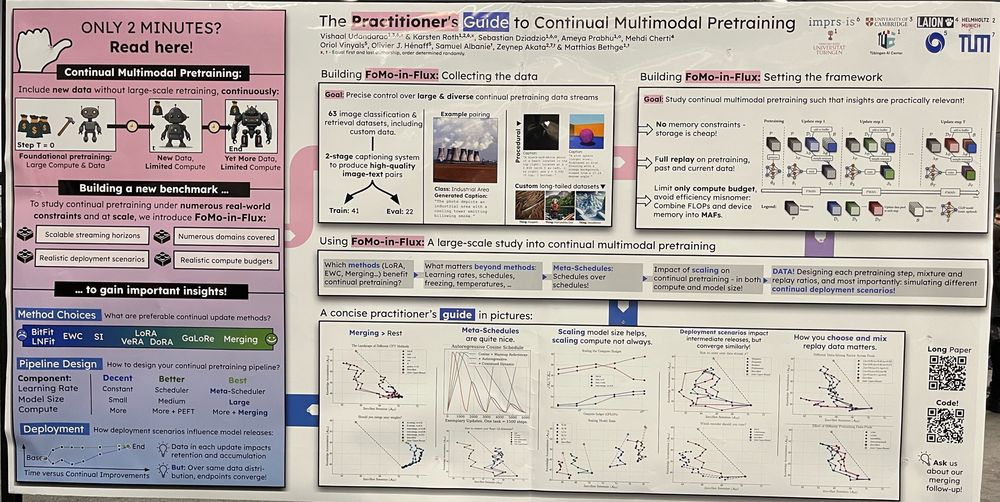

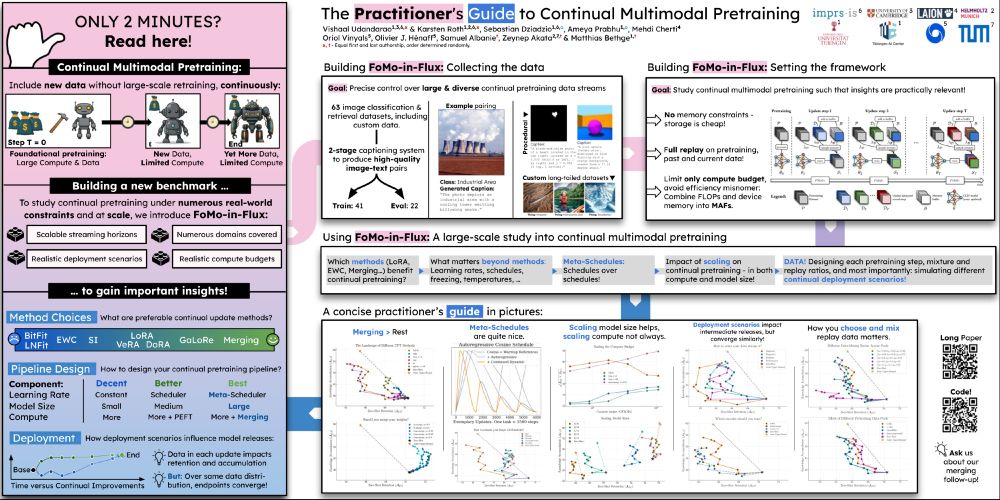

The time axis unlocks scalable mergeability. Merging has surprising scaling gains across size & compute budgets.

All the gory details ⬇️

arxiv.org/abs/2412.06712

Model merging assumes all finetuned models are available at once. But what if they need to be created over time?

We study Temporal Model Merging through the TIME framework to find out!

🧵

The time axis unlocks scalable mergeability. Merging has surprising scaling gains across size & compute budgets.

All the gory details ⬇️

@adhirajghosh.bsky.social and

@dziadzio.bsky.social!⬇️

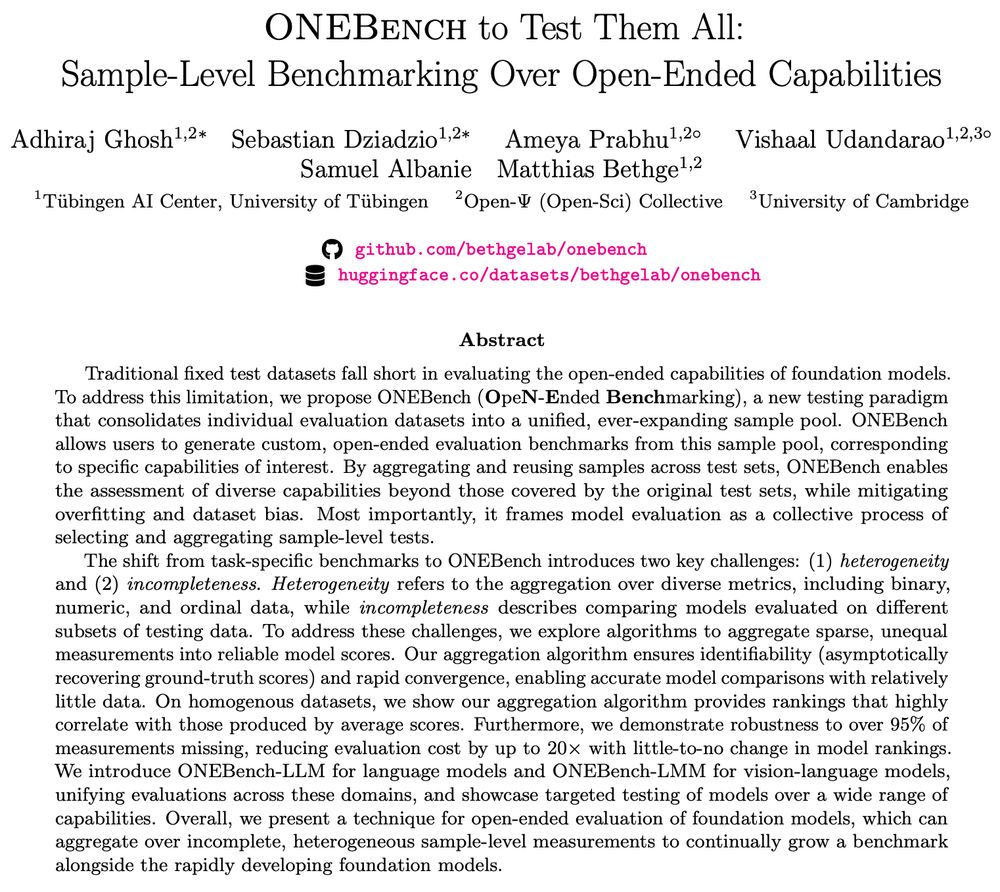

Sample-level benchmarks could be the new generation- reusable, recombinable & evaluate lots of capabilities!

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

@adhirajghosh.bsky.social and

@dziadzio.bsky.social!⬇️

Sample-level benchmarks could be the new generation- reusable, recombinable & evaluate lots of capabilities!

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

@iclr-conf.bsky.social

We received 120 wonderful proposals, with 40 selected as workshops.

@iclr-conf.bsky.social

We received 120 wonderful proposals, with 40 selected as workshops.