Benchmarking | LLM Agents | Data-Centric ML | Continual Learning | Unlearning

drimpossible.github.io

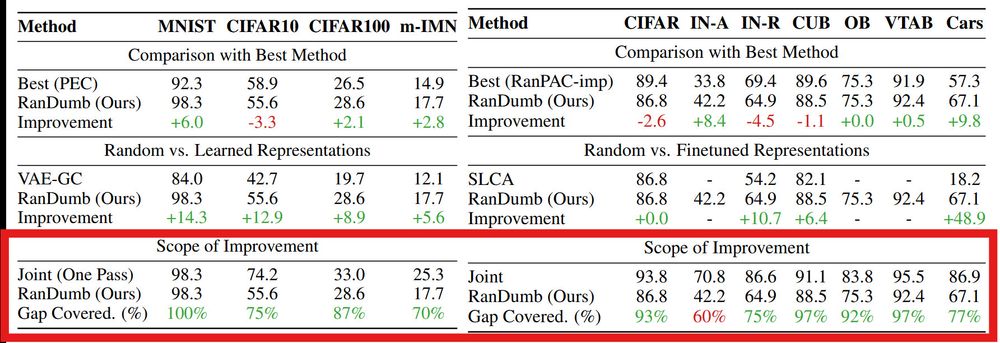

RanDumb recovers 70-90% of the joint performance.

Forgetting isn't the main issue—the benchmarks are too toy!

Key Point: Current OCL benchmarks are too constrained for any effective learning of online continual representations!

RanDumb recovers 70-90% of the joint performance.

Forgetting isn't the main issue—the benchmarks are too toy!

Key Point: Current OCL benchmarks are too constrained for any effective learning of online continual representations!

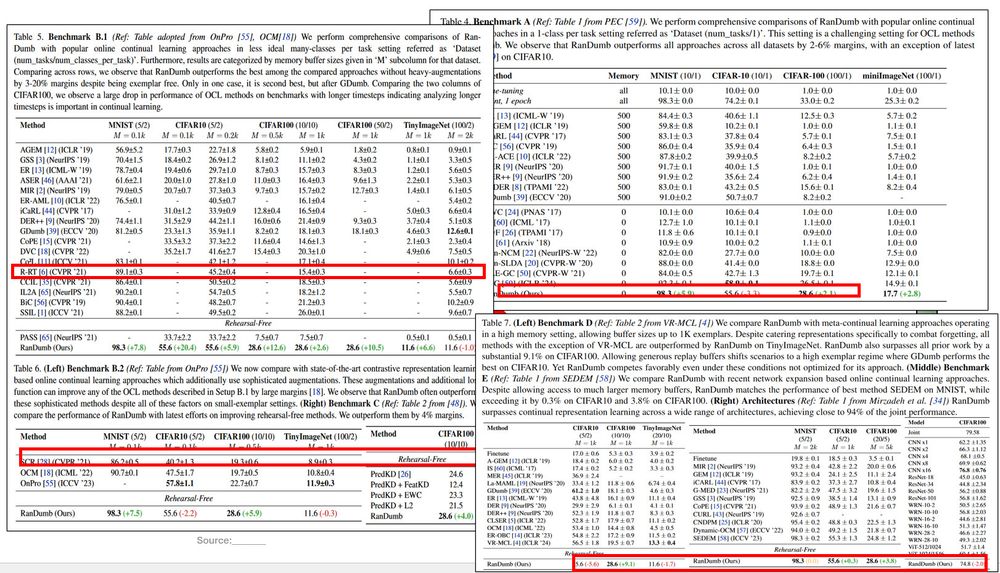

Why might it not work? Updates are limited and networks may not converge.

We find: OCL representations are severely undertrained!

Why might it not work? Updates are limited and networks may not converge.

We find: OCL representations are severely undertrained!

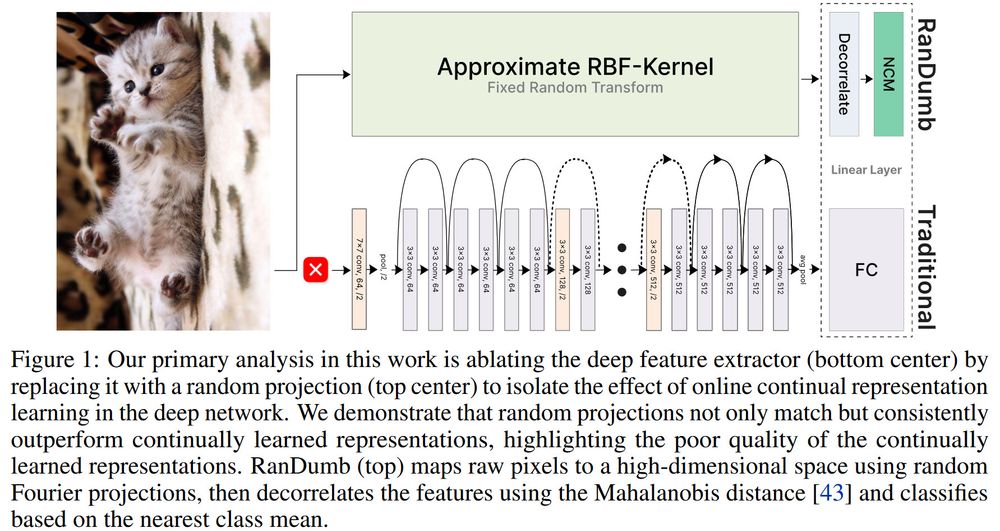

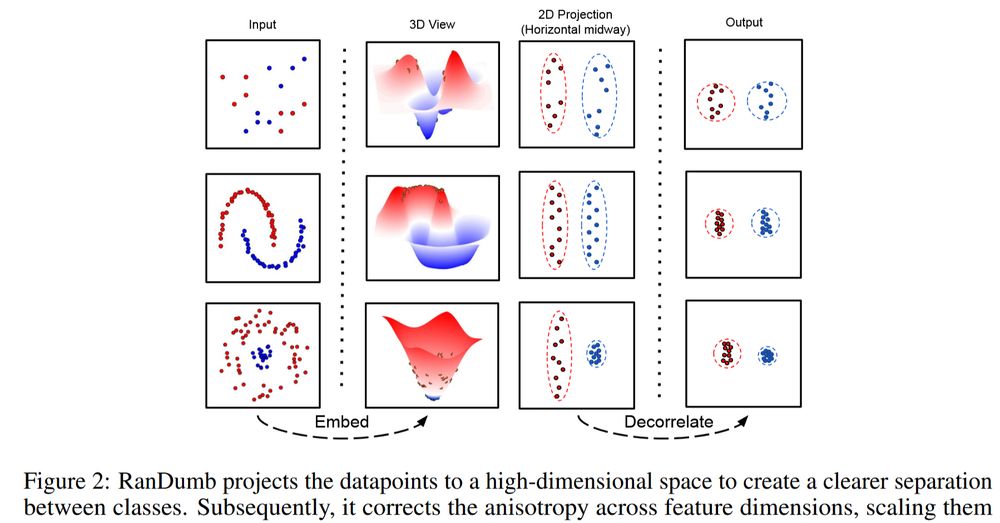

Looks familiar? This is streaming (approx.) Kernel LDA!!

Looks familiar? This is streaming (approx.) Kernel LDA!!