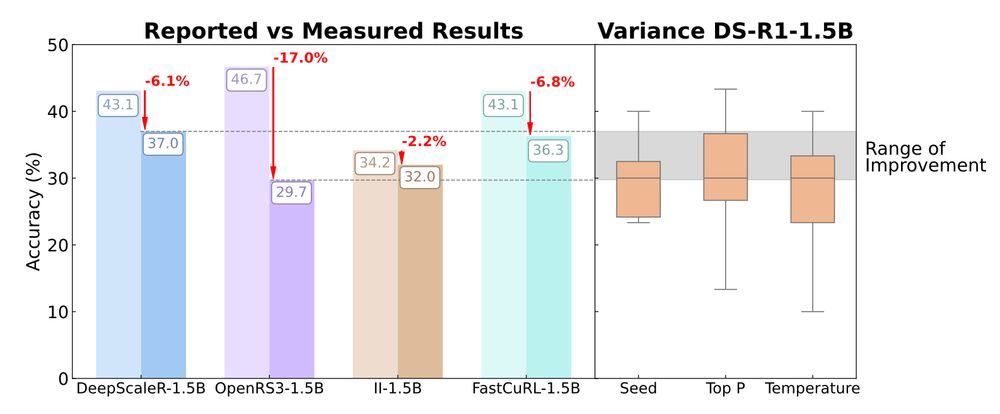

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

📅 Today

🕔 11:00 AM – 1:00 PM

📍 Room 710 - Poster #31

We find that many “reasoning” gains fall within variance and show how to make evaluation reproducible again.

📘 bethgelab.github.io/sober-reasoning

📅 Today

🕔 11:00 AM – 1:00 PM

📍 Room 710 - Poster #31

We find that many “reasoning” gains fall within variance and show how to make evaluation reproducible again.

📘 bethgelab.github.io/sober-reasoning

📄 Paper: www.scholar-inbox.com/papers/He202...

arxiv.org/pdf/2508.13148

💻 Code: github.com/autonomousvi...

🌐 Project Page: cli212.github.io/MDPO/

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

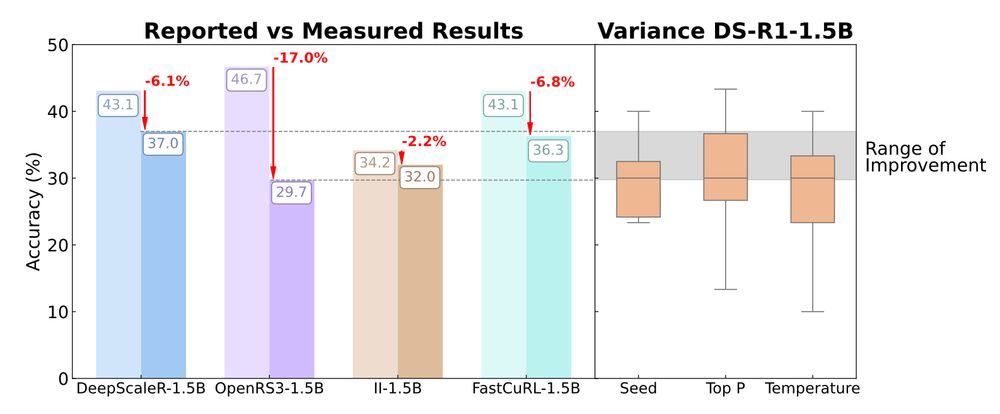

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

SWE-bench MM is a new set of JavaScript issues that have a visual component (‘map isn’t rendering correctly’, ‘button text isn’t appearing’).

www.swebench.com/sb-cli/

SWE-bench MM is a new set of JavaScript issues that have a visual component (‘map isn’t rendering correctly’, ‘button text isn’t appearing’).

www.swebench.com/sb-cli/

CiteMe is a challenging benchmark for LM-based agents to find paper citations, moving beyond simple multiple-choice Q&A to real-world use cases.

Come by and say hi :)

citeme.ai

CiteMe is a challenging benchmark for LM-based agents to find paper citations, moving beyond simple multiple-choice Q&A to real-world use cases.

Come by and say hi :)

citeme.ai

go.bsky.app/NFbVzrA

go.bsky.app/NFbVzrA