nanoVLM: The simplest way to train your own Vision-Language Model in pure PyTorch explained step-by-step!

Easy to read, even easier to use. Train your first VLM today!

nanoVLM: The simplest way to train your own Vision-Language Model in pure PyTorch explained step-by-step!

Easy to read, even easier to use. Train your first VLM today!

All running locally with no installs. Just open the website.

All running locally with no installs. Just open the website.

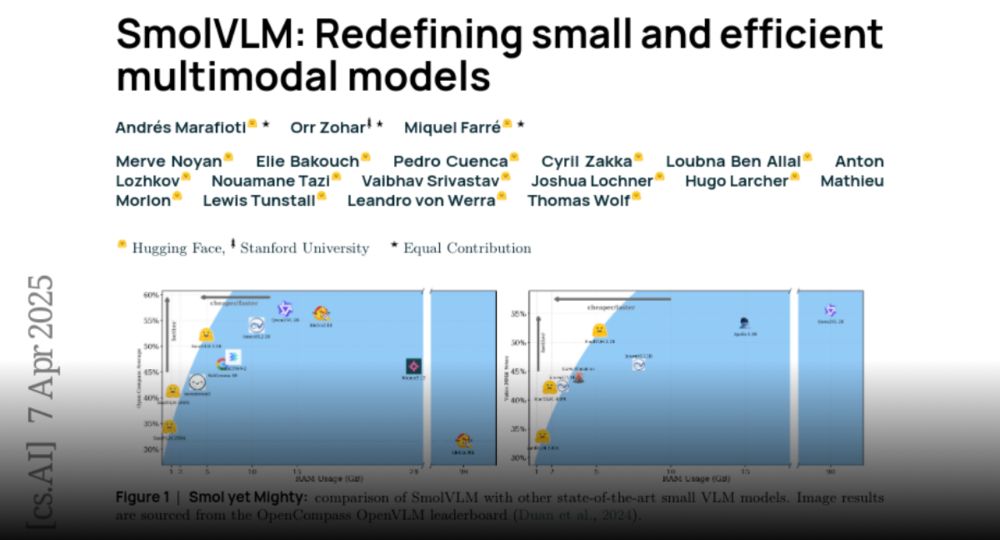

🔥 Explaining how to create a tiny 256M VLM that uses less than 1GB of RAM and outperforms our 80B models from 18 months ago!

huggingface.co/papers/2504....

🔥 Explaining how to create a tiny 256M VLM that uses less than 1GB of RAM and outperforms our 80B models from 18 months ago!

huggingface.co/papers/2504....

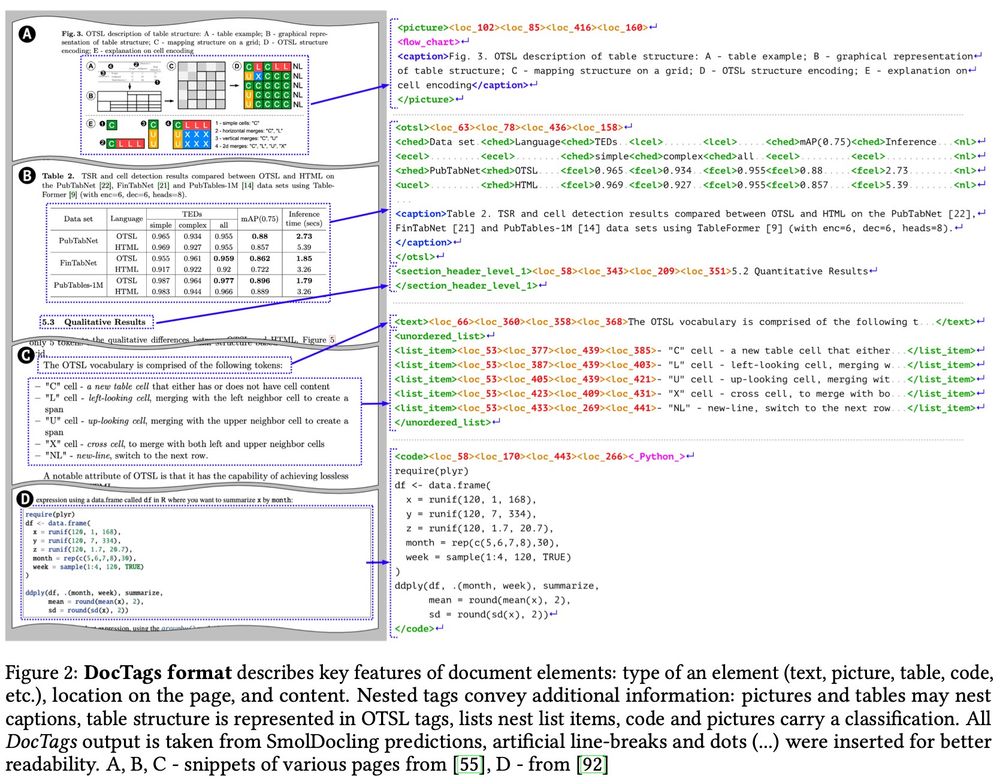

Lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM ⚡

SOTA in document conversion, beating every competing model we tested (including models 27x more params) 🤯

But how? 🧶⬇️

Lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM ⚡

SOTA in document conversion, beating every competing model we tested (including models 27x more params) 🤯

But how? 🧶⬇️

- 8B wins up to 81% of the time in its class, better than Gemini Flash

- 32B beats Llama 3.2 90B!

- Integrated on @hf.co from Day 0!

Check out their blog! huggingface.co/blog/aya-vis...

- 8B wins up to 81% of the time in its class, better than Gemini Flash

- 32B beats Llama 3.2 90B!

- Integrated on @hf.co from Day 0!

Check out their blog! huggingface.co/blog/aya-vis...

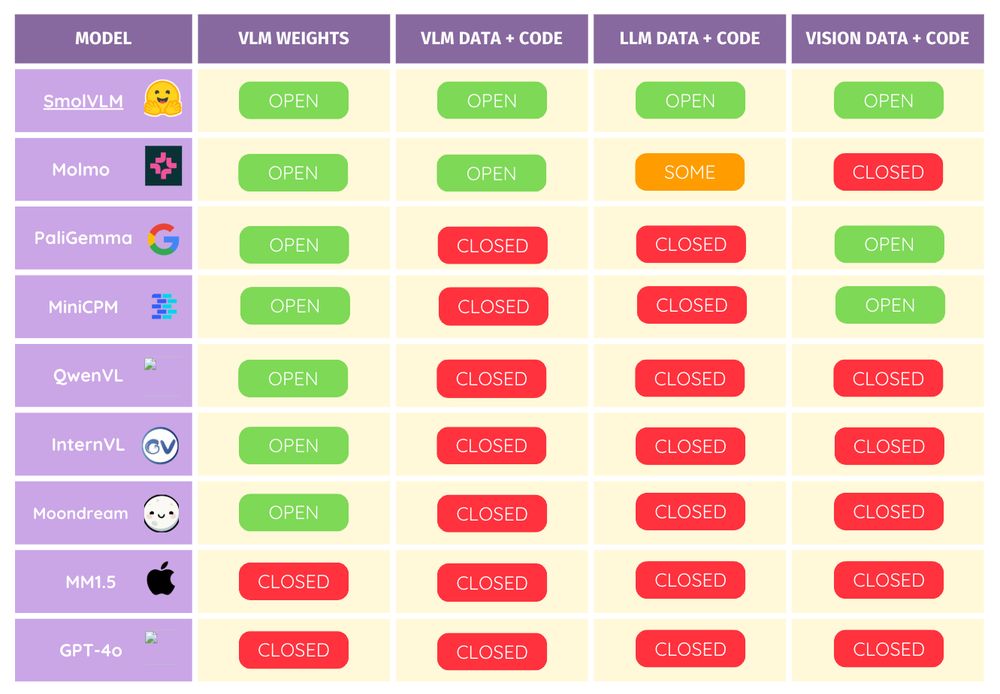

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

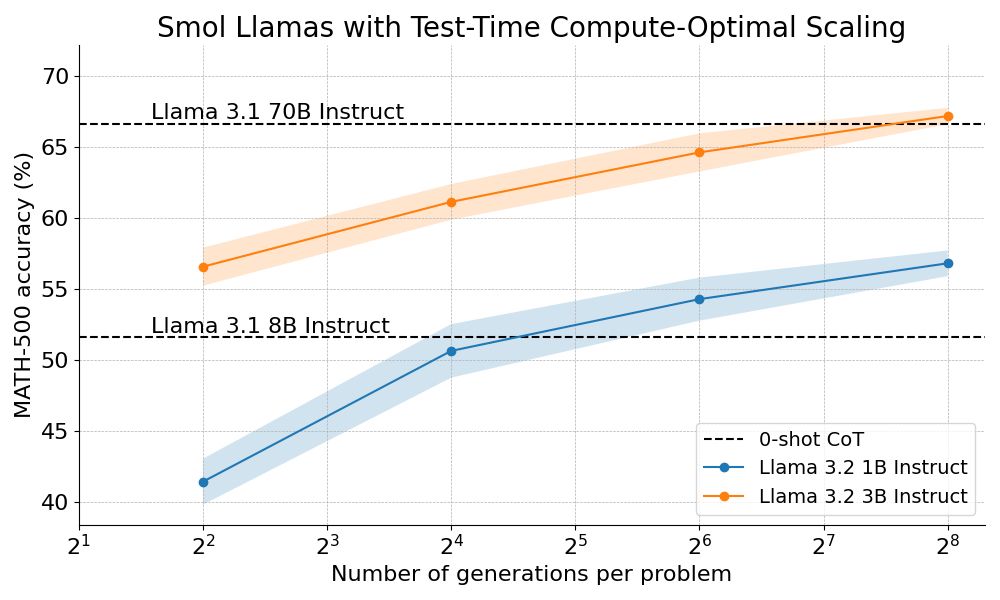

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

huggingface.co/chat/

huggingface.co/chat/

2B params, does some amazing stuff, with little memory, quickly. I'll post a couple of examples below.

Super cool stuff from @merve.bsky.social & @andimara.bsky.social!

2B params, does some amazing stuff, with little memory, quickly. I'll post a couple of examples below.

Super cool stuff from @merve.bsky.social & @andimara.bsky.social!

Check @andimara.bsky.social's Smol Tools for summarization and rewriting. It uses SmolLM2 to summarize text and make it more friendly or professional, all running locally thanks to llama.cpp github.com/huggingface/...

Check @andimara.bsky.social's Smol Tools for summarization and rewriting. It uses SmolLM2 to summarize text and make it more friendly or professional, all running locally thanks to llama.cpp github.com/huggingface/...

you're welcome

you're welcome

apply.workable.com/huggingface/...

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

apply.workable.com/huggingface/...

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

- ColSmolVLM performs better than ColPali and DSE-Qwen2 on all English tasks

- ColSmolVLM is more memory efficient than ColQwen2 💗

Find the model here huggingface.co/vidore/colsm...

- ColSmolVLM performs better than ColPali and DSE-Qwen2 on all English tasks

- ColSmolVLM is more memory efficient than ColQwen2 💗

Find the model here huggingface.co/vidore/colsm...

#NeurIPS2024 #evaleval #AIEvaluation

#NeurIPS2024 #evaleval #AIEvaluation

Hugging Face empowers everyone to use AI to create value and is against monopolization of AI it's a hosting platform above all.

Hugging Face empowers everyone to use AI to create value and is against monopolization of AI it's a hosting platform above all.

We need both!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

We need both!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!