> git clone github.com/huggingface/...

> python train.py

> git clone github.com/huggingface/...

> python train.py

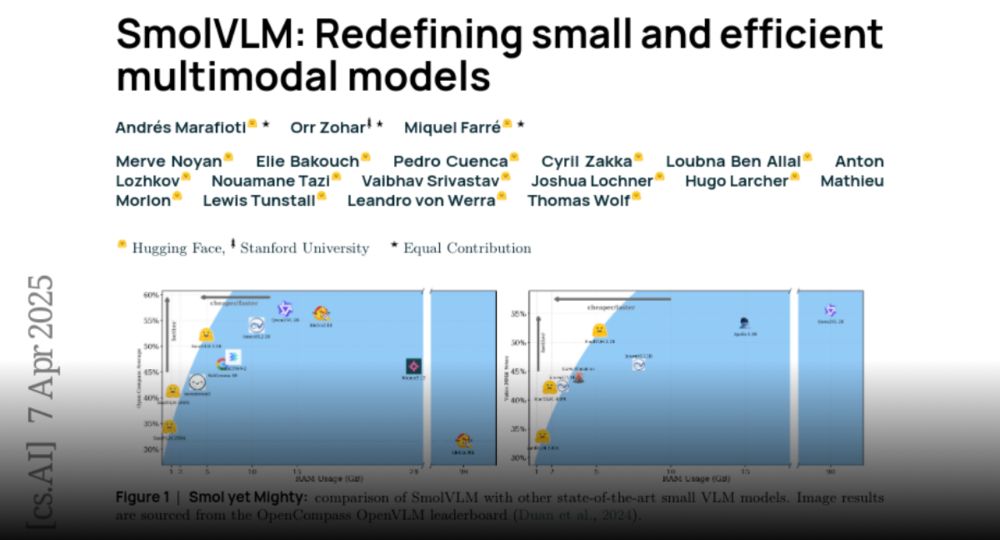

Check out the paper: huggingface.co/papers/2504....

Check out the paper: huggingface.co/papers/2504....

🌐 Browser-based Inference? Yep! We get lightning-fast inference speeds of 40-80 tokens per second directly in a web browser. No tricks, just compact, efficient models!

🌐 Browser-based Inference? Yep! We get lightning-fast inference speeds of 40-80 tokens per second directly in a web browser. No tricks, just compact, efficient models!

✨ Longer videos, better results: Increasing video length during training enhanced performance on both video and image tasks.

✨ Longer videos, better results: Increasing video length during training enhanced performance on both video and image tasks.

✨ Learned positional tokens FTW: For compact models, learned positional tokens significantly outperform raw text tokens, enhancing efficiency and accuracy.

✨ Learned positional tokens FTW: For compact models, learned positional tokens significantly outperform raw text tokens, enhancing efficiency and accuracy.

✨ Longer context = Big wins: Increasing the context length from 2K to 16K gave our tiny VLMs a 60% performance boost!

✨ Longer context = Big wins: Increasing the context length from 2K to 16K gave our tiny VLMs a 60% performance boost!

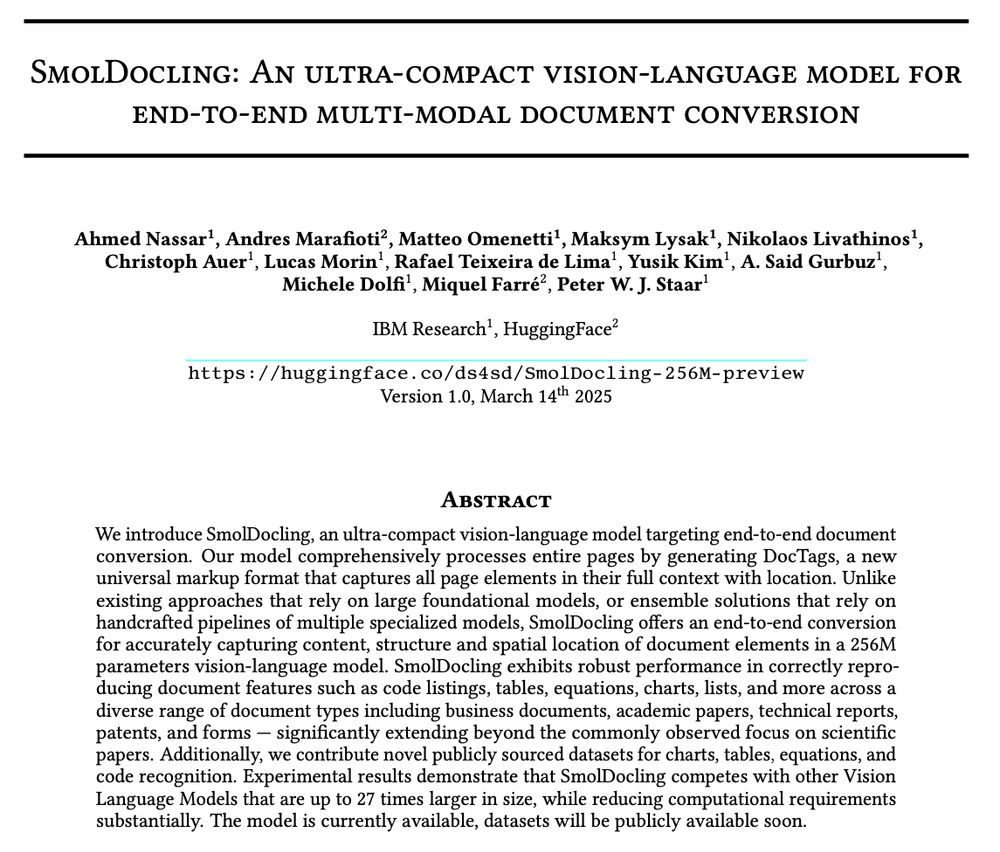

🔗 Model: huggingface.co/ds4sd/SmolDo...

📖 Paper: huggingface.co/papers/2503....

🤗 Space: huggingface.co/spaces/ds4sd...

Try it and let us know what you think! 💬

🔗 Model: huggingface.co/ds4sd/SmolDo...

📖 Paper: huggingface.co/papers/2503....

🤗 Space: huggingface.co/spaces/ds4sd...

Try it and let us know what you think! 💬

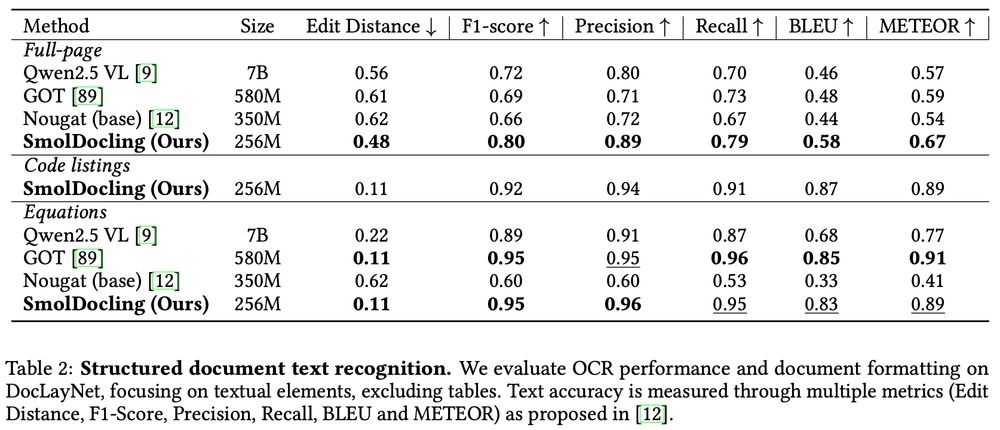

🖋️ Full-page transcription: Beats models 27× bigger!

📑 Equations: Matches or beats leading models like GOT

💻 Code recognition: We introduce the first benchmark for code OCR

🖋️ Full-page transcription: Beats models 27× bigger!

📑 Equations: Matches or beats leading models like GOT

💻 Code recognition: We introduce the first benchmark for code OCR

📌 Handles everything a document has: tables, charts, code, equations, lists, and more

📌 Works beyond scientific papers—supports business docs, patents, and forms

📌 It runs with less than 1GB of RAM, so running at large batch sizes is super cheap!

📌 Handles everything a document has: tables, charts, code, equations, lists, and more

📌 Works beyond scientific papers—supports business docs, patents, and forms

📌 It runs with less than 1GB of RAM, so running at large batch sizes is super cheap!

✅ Full-page conversion

✅ Layout identification

✅ Equations, tables, charts, plots, code OCR

✅ Full-page conversion

✅ Layout identification

✅ Equations, tables, charts, plots, code OCR

It works, it manages to produce SOTA results at 256M and 80B sizes, but it's not production code.

Go check it out:

github.com/huggingface/...

It works, it manages to produce SOTA results at 256M and 80B sizes, but it's not production code.

Go check it out:

github.com/huggingface/...

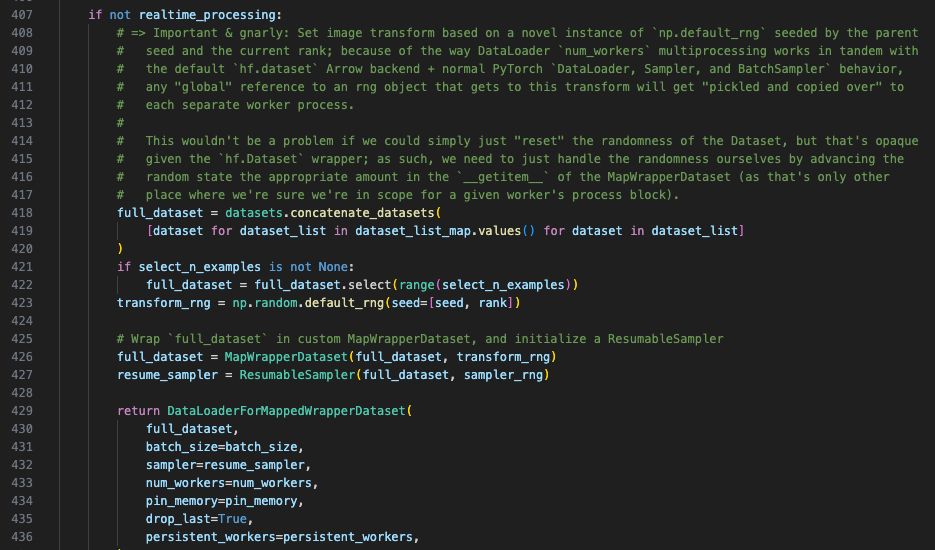

Start different random number generators based on a tuple (seed, rank)!

Start different random number generators based on a tuple (seed, rank)!

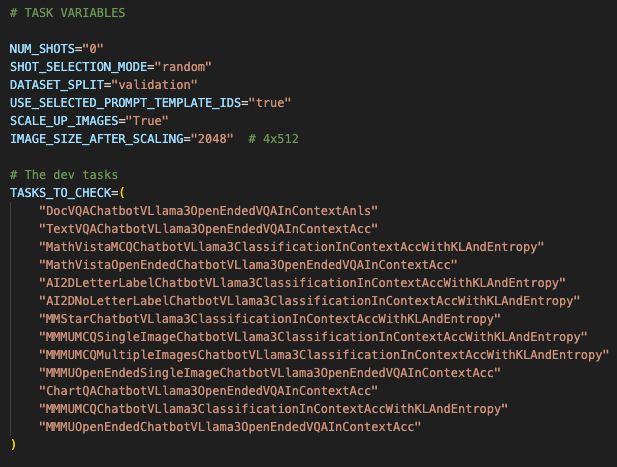

sbatch vision/experiments/evaluation/vloom/async_evals_tr_346/run_evals_0_shots_val_2048 . slurm

sbatch vision/experiments/evaluation/vloom/async_evals_tr_346/run_evals_0_shots_val_2048 . slurm