nanoVLM: The simplest way to train your own Vision-Language Model in pure PyTorch explained step-by-step!

Easy to read, even easier to use. Train your first VLM today!

nanoVLM: The simplest way to train your own Vision-Language Model in pure PyTorch explained step-by-step!

Easy to read, even easier to use. Train your first VLM today!

All running locally with no installs. Just open the website.

All running locally with no installs. Just open the website.

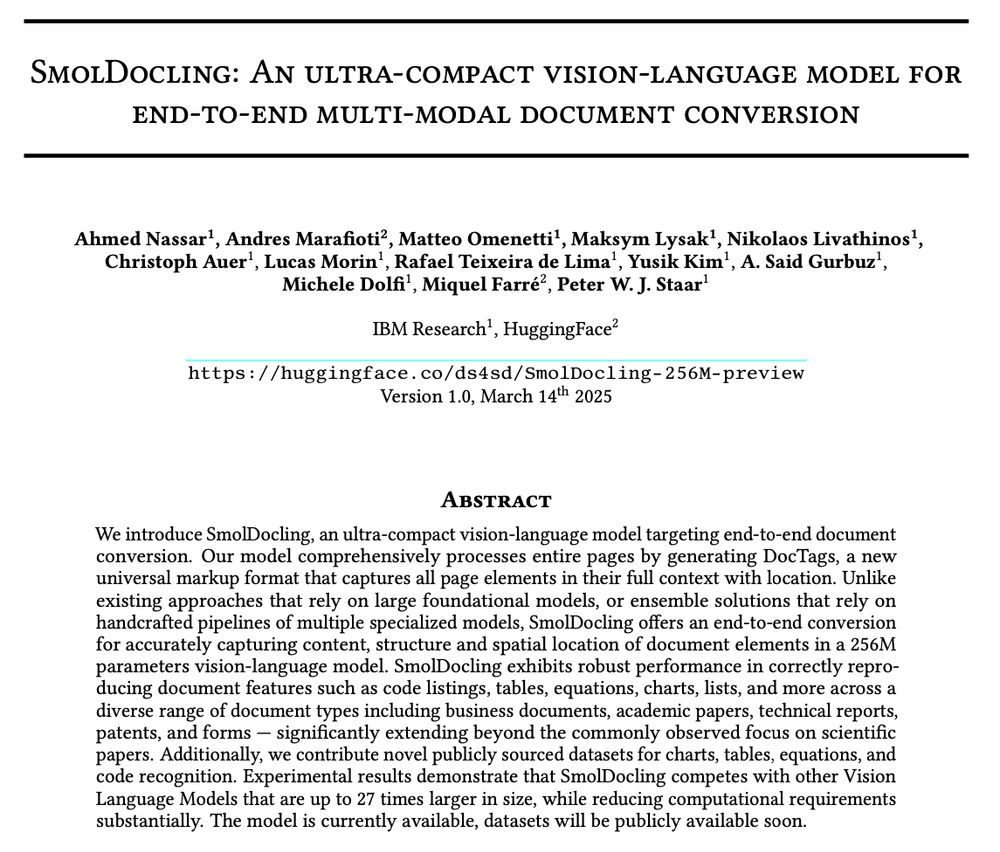

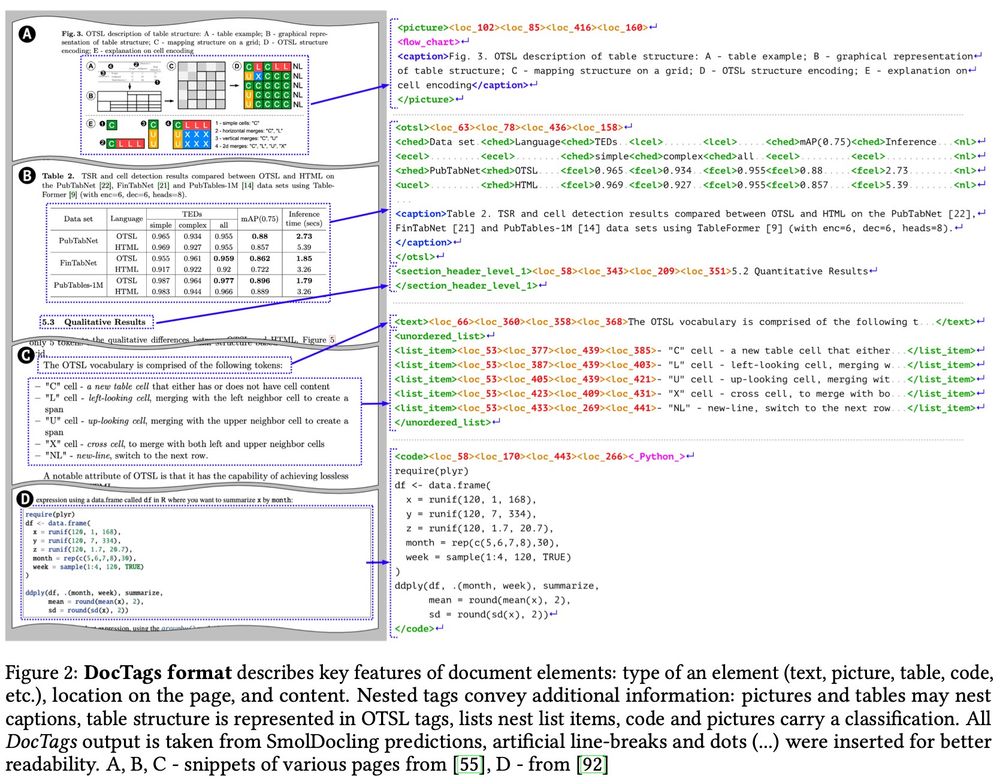

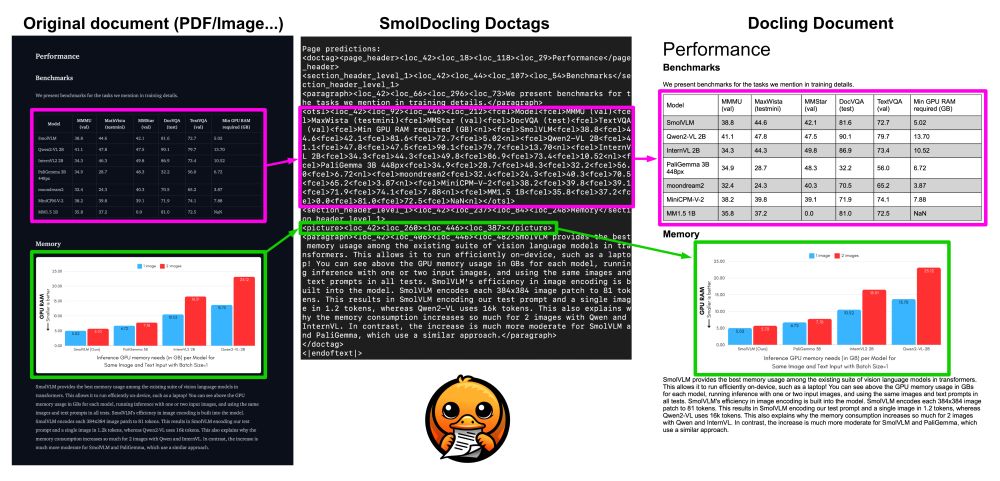

📌 Handles everything a document has: tables, charts, code, equations, lists, and more

📌 Works beyond scientific papers—supports business docs, patents, and forms

📌 It runs with less than 1GB of RAM, so running at large batch sizes is super cheap!

📌 Handles everything a document has: tables, charts, code, equations, lists, and more

📌 Works beyond scientific papers—supports business docs, patents, and forms

📌 It runs with less than 1GB of RAM, so running at large batch sizes is super cheap!

✅ Full-page conversion

✅ Layout identification

✅ Equations, tables, charts, plots, code OCR

✅ Full-page conversion

✅ Layout identification

✅ Equations, tables, charts, plots, code OCR

Lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM ⚡

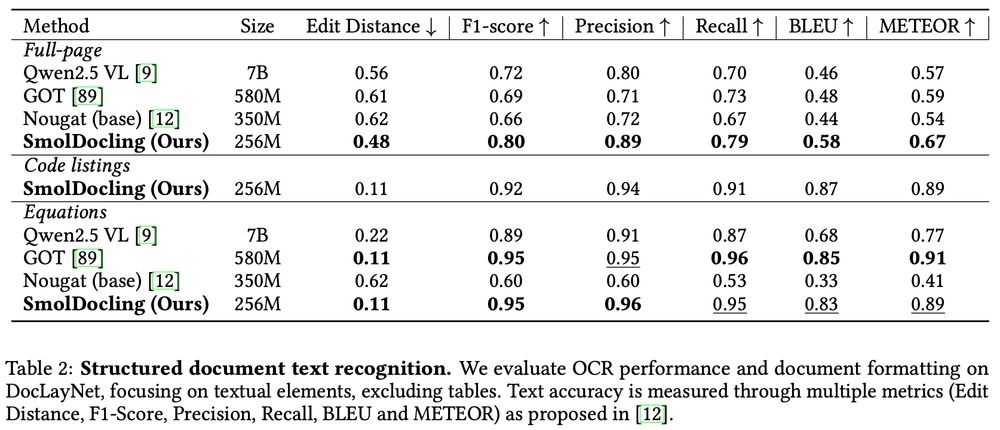

SOTA in document conversion, beating every competing model we tested (including models 27x more params) 🤯

But how? 🧶⬇️

Lightning fast, process a page in 0.35 sec on consumer GPU using < 500MB VRAM ⚡

SOTA in document conversion, beating every competing model we tested (including models 27x more params) 🤯

But how? 🧶⬇️

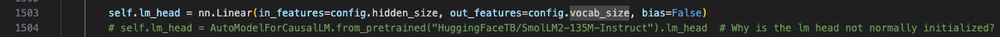

Start different random number generators based on a tuple (seed, rank)!

Start different random number generators based on a tuple (seed, rank)!

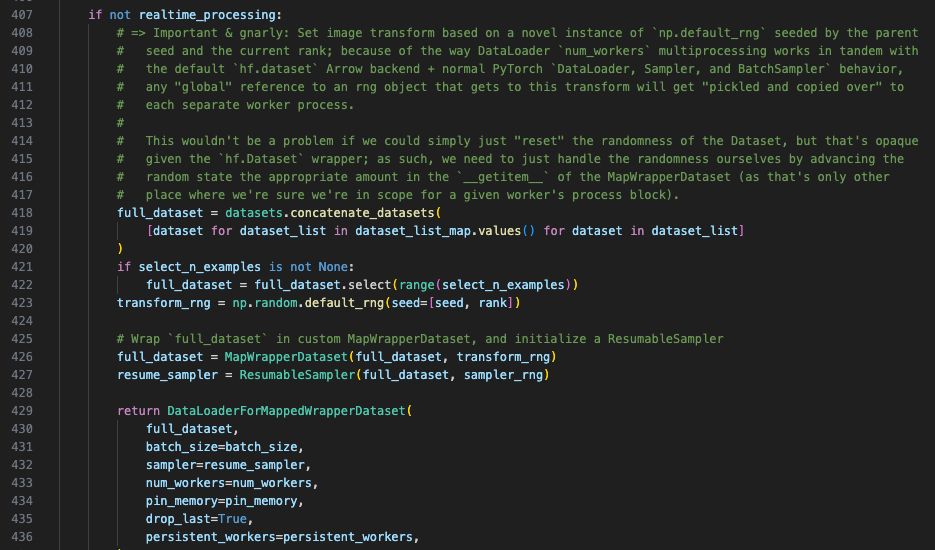

sbatch vision/experiments/evaluation/vloom/async_evals_tr_346/run_evals_0_shots_val_2048 . slurm

sbatch vision/experiments/evaluation/vloom/async_evals_tr_346/run_evals_0_shots_val_2048 . slurm

./vision/experiments/pretraining/vloom/tr_341_smolvlm_025b_1st_stage/01_launch . sh

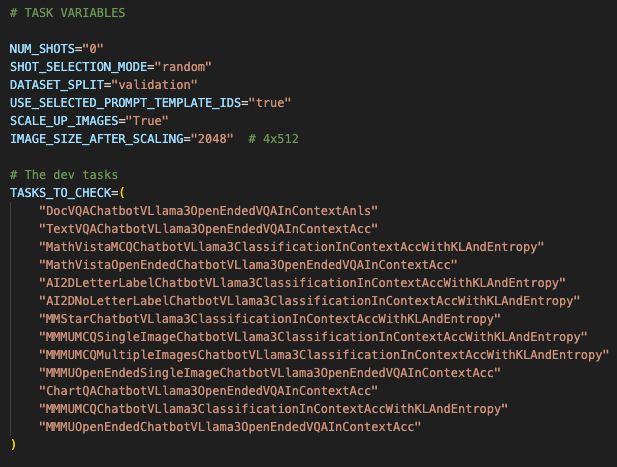

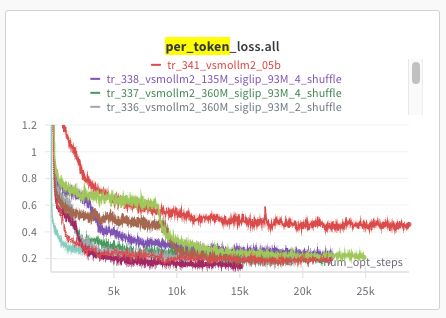

Then we use wandb to track the losses.

Check out the file to find out details!

./vision/experiments/pretraining/vloom/tr_341_smolvlm_025b_1st_stage/01_launch . sh

Then we use wandb to track the losses.

Check out the file to find out details!

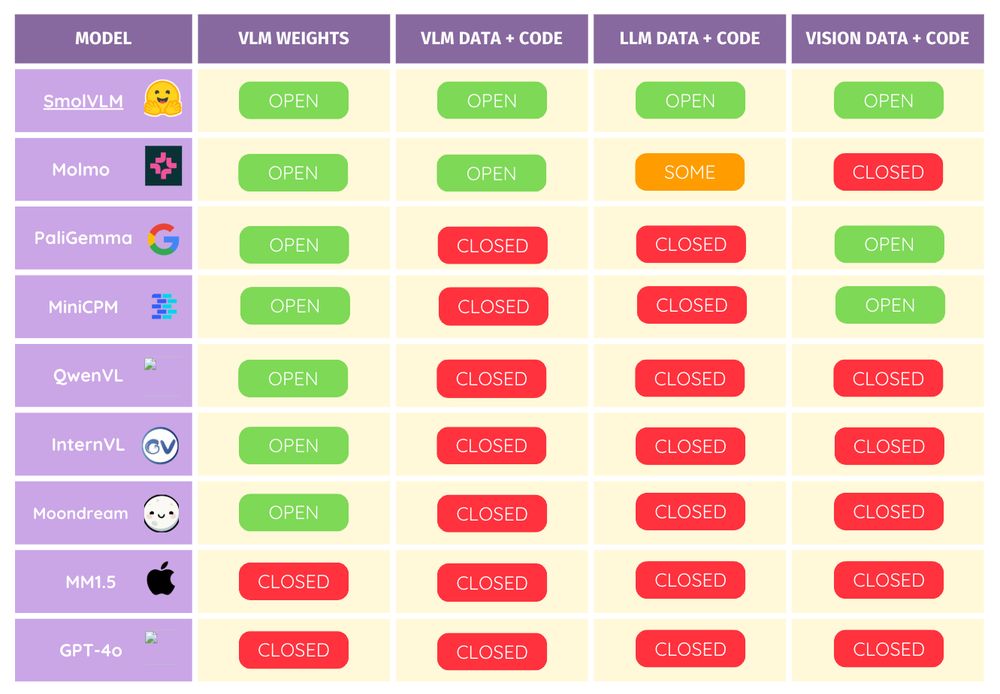

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

Inspired by our team's effort to open-source DeepSeek's R1, we are releasing the training and evaluation code on top of the weights 🫡

Now you can train any SmolVLM—or create your own custom VLMs!

• New vision encoder: Smaller but higher res.

• Improved data mixtures: better OCR and doc understanding.

• Higher pixels/token: 4096 vs. 1820 = more efficient.

• Smart tokenization: Faster training and better performance. 🚀

Better, faster, smarter.

• New vision encoder: Smaller but higher res.

• Improved data mixtures: better OCR and doc understanding.

• Higher pixels/token: 4096 vs. 1820 = more efficient.

• Smart tokenization: Faster training and better performance. 🚀

Better, faster, smarter.

SmolVLM makes it faster and cheaper to build searchable databases.

Real-world impact, unlocked.

SmolVLM makes it faster and cheaper to build searchable databases.

Real-world impact, unlocked.

• 256M delivers 80% of the performance of our 2.2B model.

• 500M hits 90%.

Both beat our SOTA 80B model from 17 months ago! 🎉

Efficiency 🤝 Performance

Explore the collection here: huggingface.co/collections/...

Blog: huggingface.co/blog/smolervlm

• 256M delivers 80% of the performance of our 2.2B model.

• 500M hits 90%.

Both beat our SOTA 80B model from 17 months ago! 🎉

Efficiency 🤝 Performance

Explore the collection here: huggingface.co/collections/...

Blog: huggingface.co/blog/smolervlm

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

SmolVLM (256M & 500M) runs on <1GB GPU memory.

Fine-tune it on your laptop and run it on your toaster. 🚀

Even the 256M model outperforms our Idefics 80B (Aug '23).

How small can we go? 👀

Demo: huggingface.co/spaces/Huggi...

Blog:

Model: huggingface.co/HuggingFaceT...

Fine-tuning script: github.com/huggingface/...

Demo: huggingface.co/spaces/Huggi...

Blog:

Model: huggingface.co/HuggingFaceT...

Fine-tuning script: github.com/huggingface/...

These two models have the same number of parameters, but Qwen2-VL expensive image encoding makes it unsuited for on-device applications!

These two models have the same number of parameters, but Qwen2-VL expensive image encoding makes it unsuited for on-device applications!

Qwen2-VL crashes my MacBook pro M3, but we get 17 tokens per second with SmolVLM and MLX!

Qwen2-VL crashes my MacBook pro M3, but we get 17 tokens per second with SmolVLM and MLX!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!