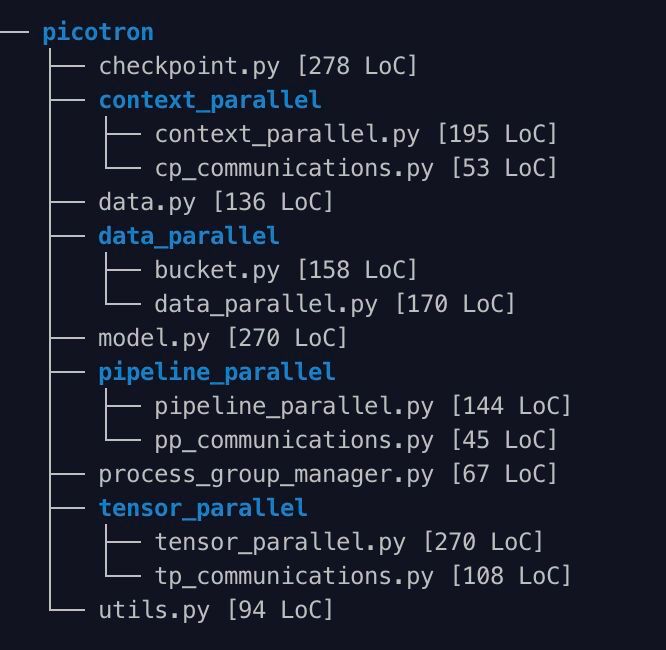

Enter picotron: implementing all 4D parallelism concepts in separate, readable files totaling just 1988 LoC!

Enter picotron: implementing all 4D parallelism concepts in separate, readable files totaling just 1988 LoC!

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

however cool your LLM is, without being agentic it can only go so far

enter smolagents: a new agent library by @hf.co to make the LLM write code, do analysis and automate boring stuff! huggingface.co/blog/smolage...

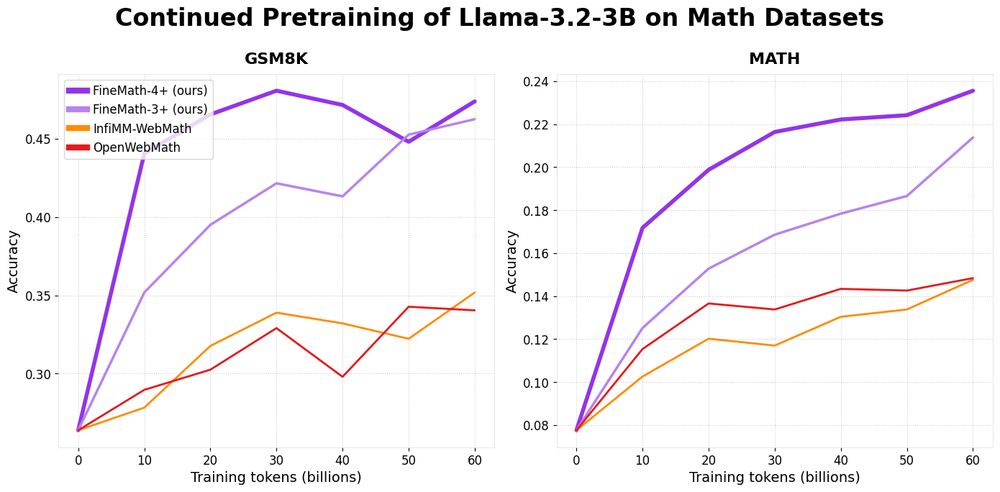

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

The agent can load data, execute code, plot results and following your guidance and ideas!

A very natural way to collaborate with an LLM over data and it's just scratching the surface of what's possible soon!

The agent can load data, execute code, plot results and following your guidance and ideas!

A very natural way to collaborate with an LLM over data and it's just scratching the surface of what's possible soon!

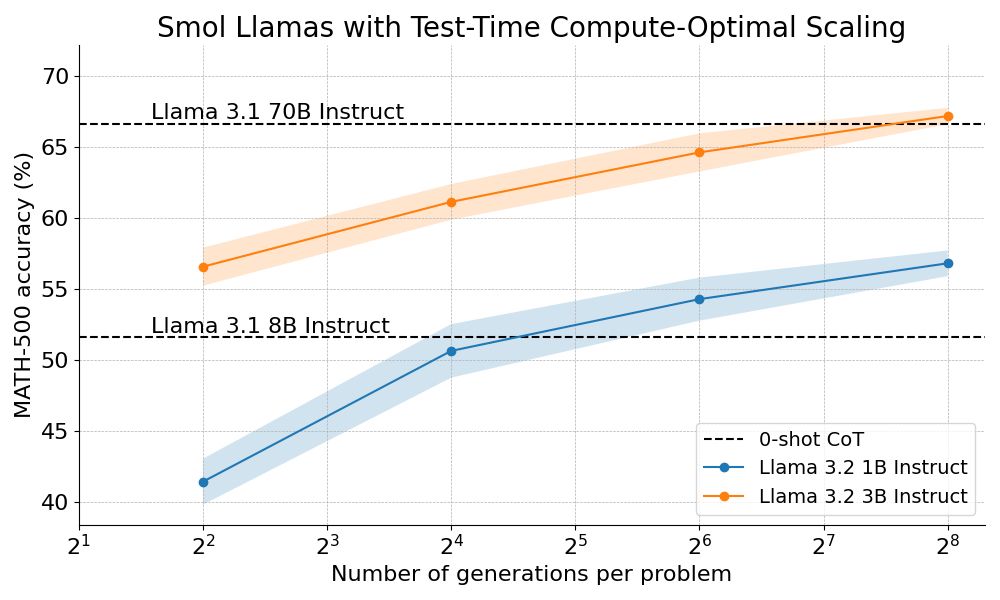

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

We are thrilled to launch LeMaterial, an open-source project in collaboration with @hf.co to accelerate materials discovery ⚛️🤗

Discover LeMat-Bulk: a 6.7M-entry dataset standardizing and unifying Materials Project, Alexandria and OQMD

We are thrilled to launch LeMaterial, an open-source project in collaboration with @hf.co to accelerate materials discovery ⚛️🤗

Discover LeMat-Bulk: a 6.7M-entry dataset standardizing and unifying Materials Project, Alexandria and OQMD

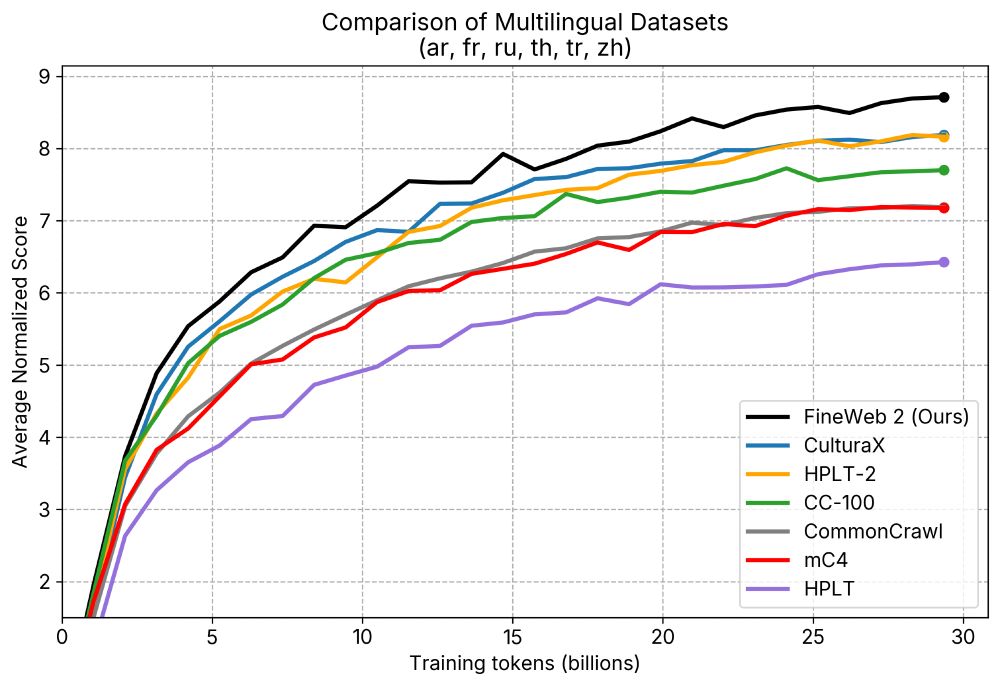

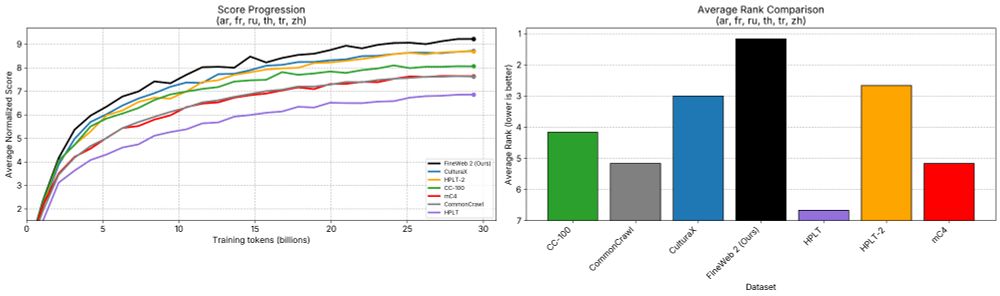

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

We need both!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

We need both!

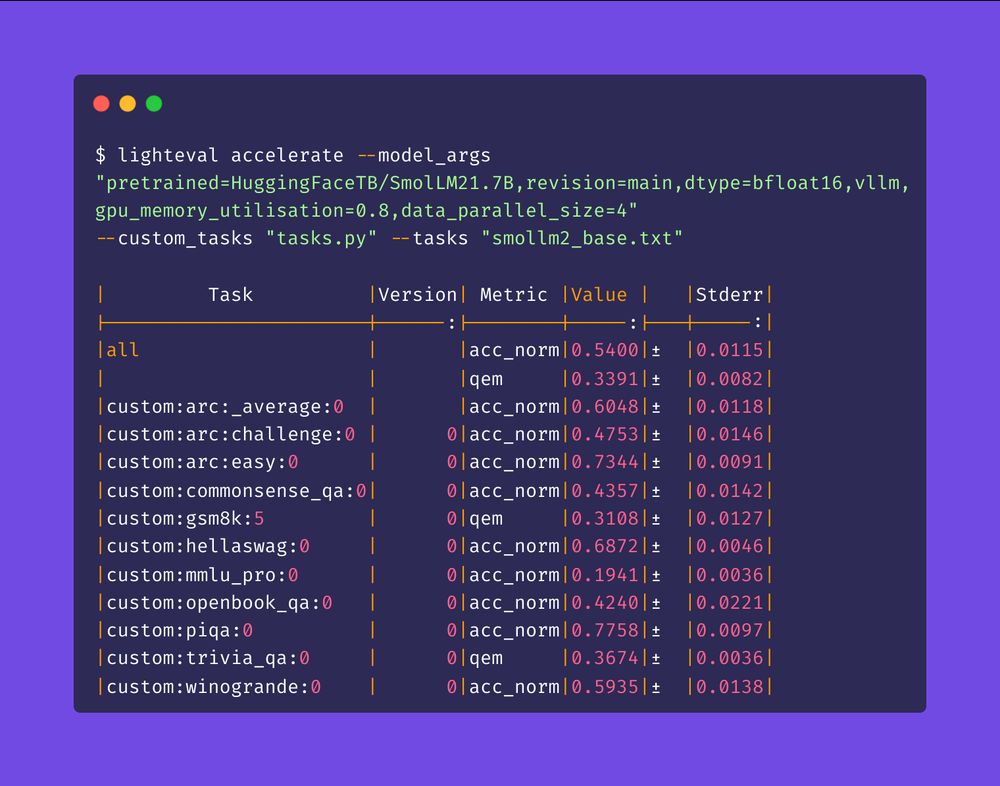

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

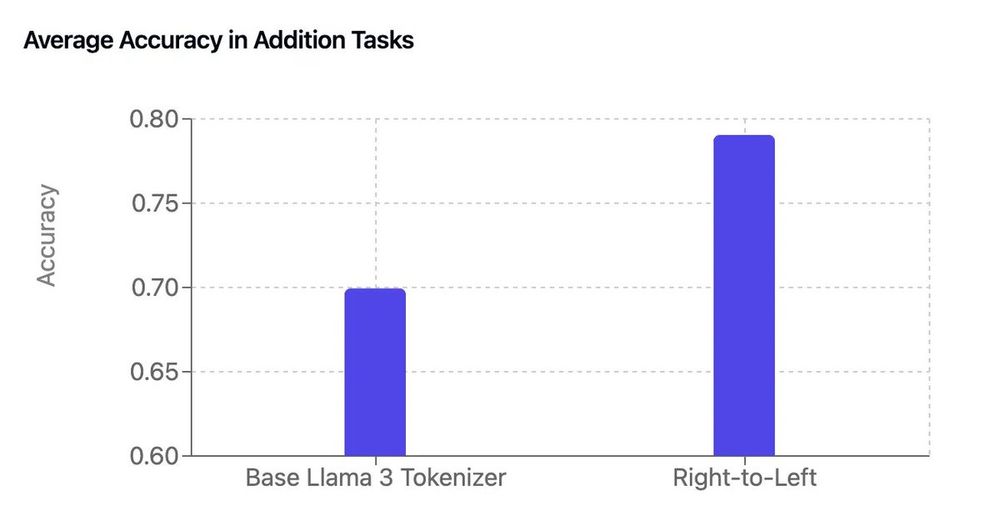

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

By adding a few lines of code to the base Llama 3 tokenizer, he got a free boost in arithmetic performance 😮

[thread]

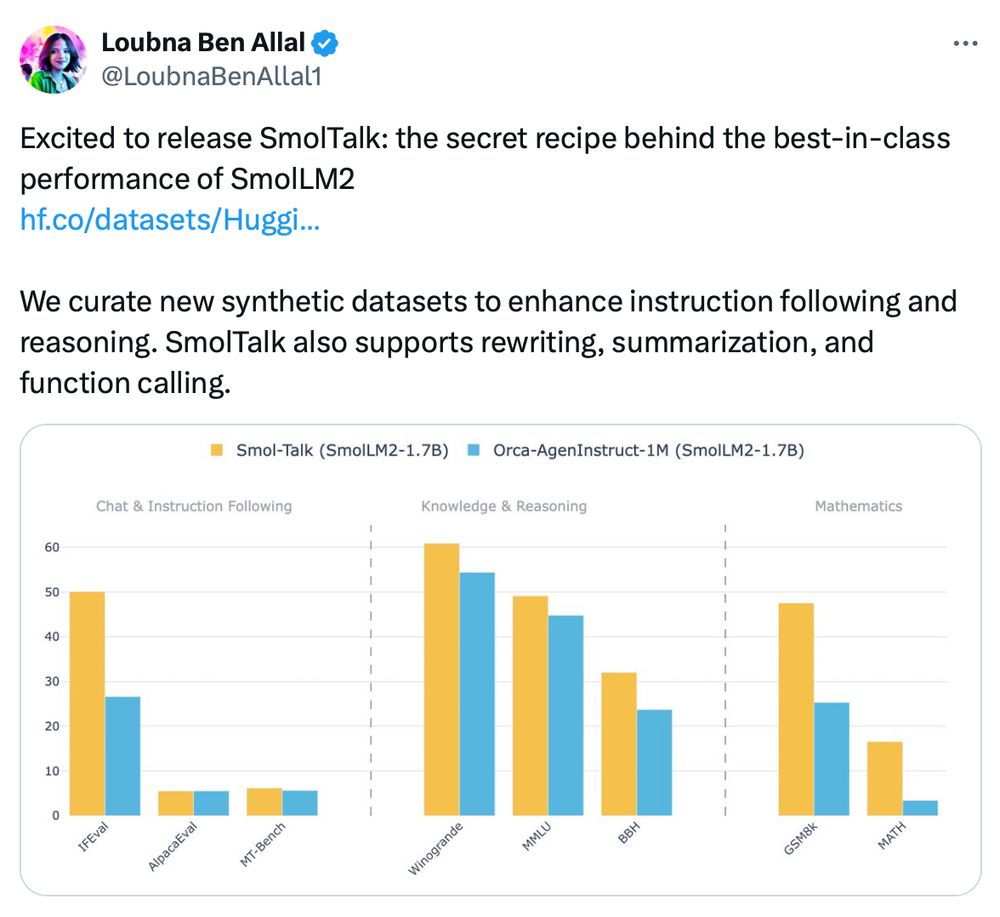

Unsurprisingly: data, data, data!

The SmolTalk is open and available here: huggingface.co/datasets/Hug...

Unsurprisingly: data, data, data!

The SmolTalk is open and available here: huggingface.co/datasets/Hug...

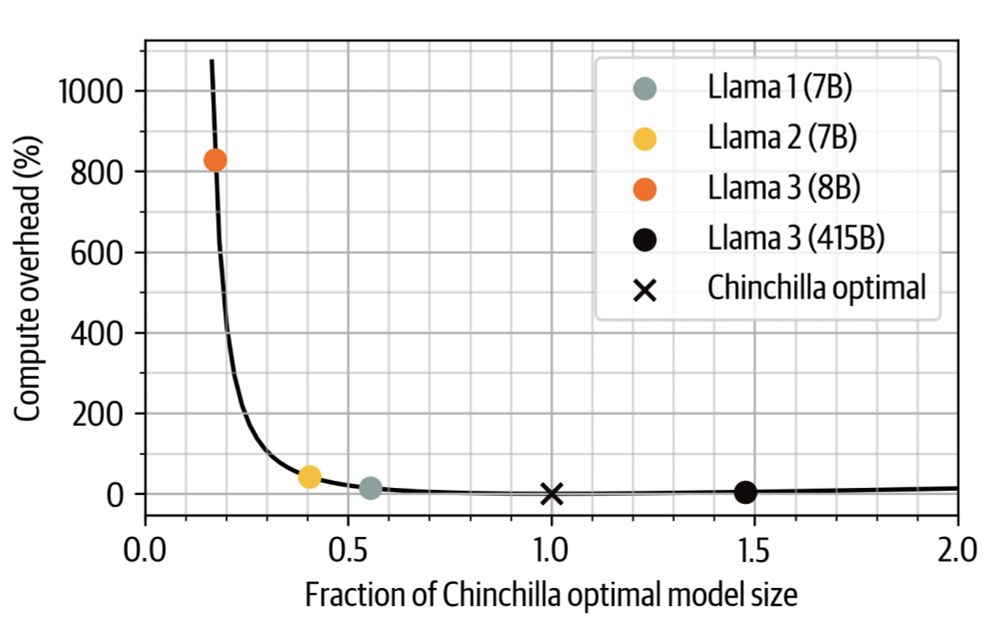

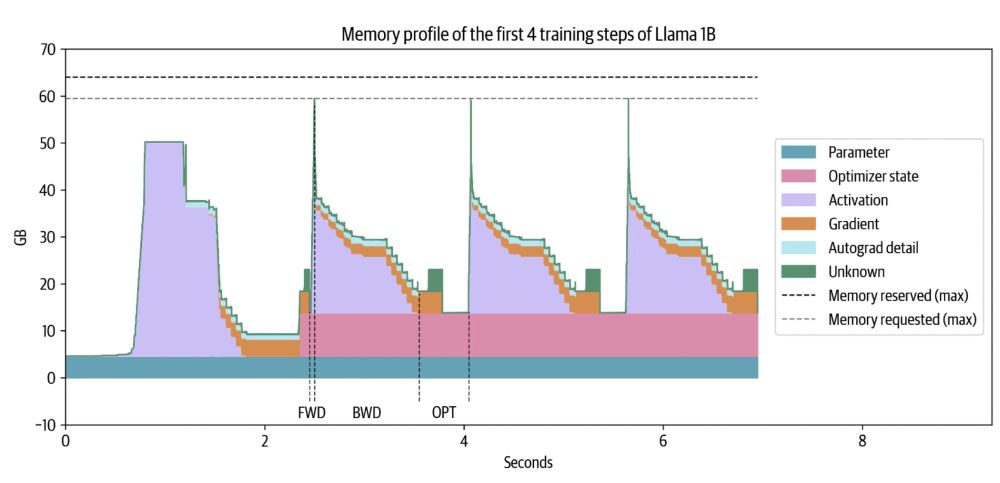

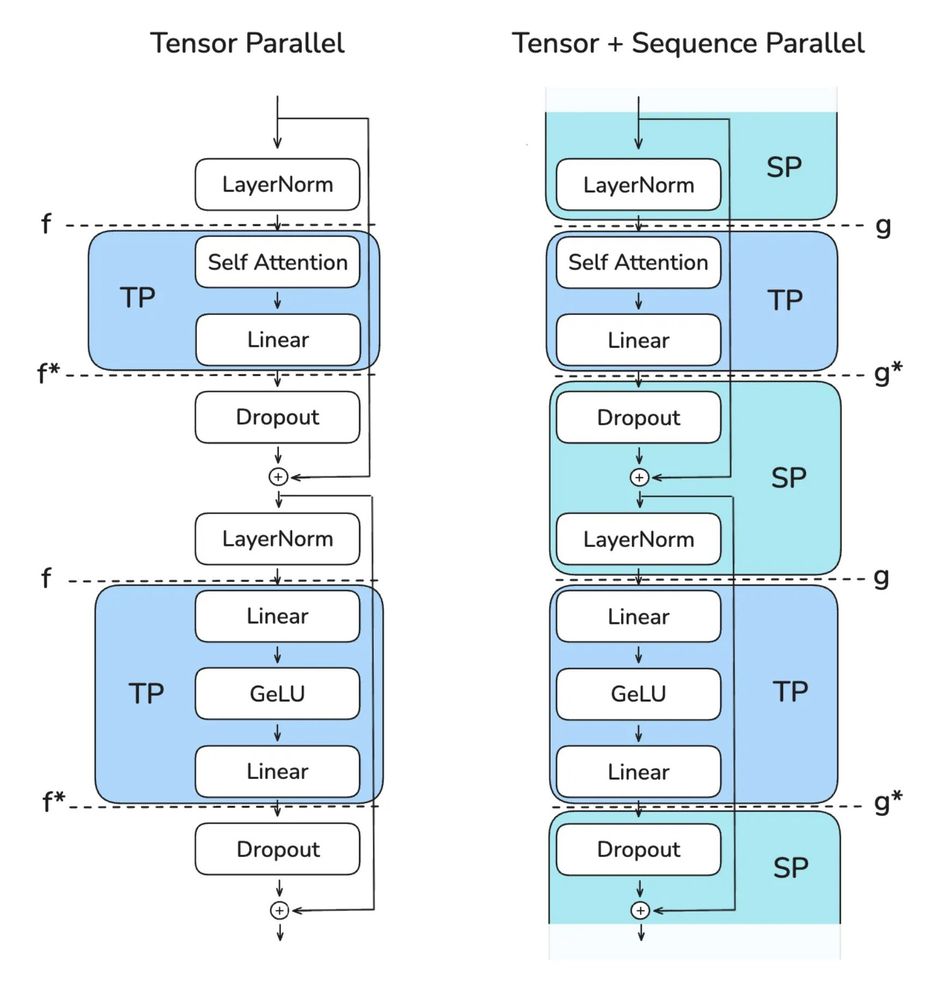

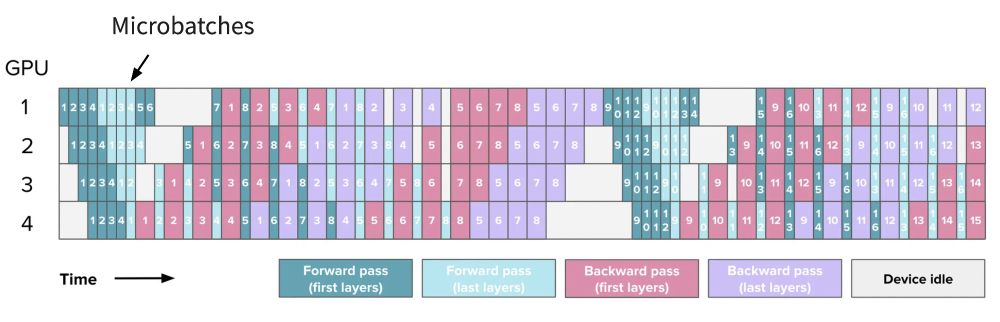

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster

Gave a workshop at Uni Bern: starts with scaling laws and goes to web scale data processing and finishes training with 4D parallelism and ZeRO.

*assuming your home includes an H100 cluster