📖 Co-author of "NLP with Transformers" book

💥 Ex-particle physicist

🤘 Occasional guitarist

🇦🇺 in 🇨🇭

huggingface.co/datasets/ope...

The community has been busy distilling DeepSeek-R1 from inference providers, but we decided to have a go at doing it ourselves from scratch 💪

More details in 🧵

huggingface.co/datasets/ope...

The community has been busy distilling DeepSeek-R1 from inference providers, but we decided to have a go at doing it ourselves from scratch 💪

More details in 🧵

Follow along: github.com/huggingface/...

Follow along: github.com/huggingface/...

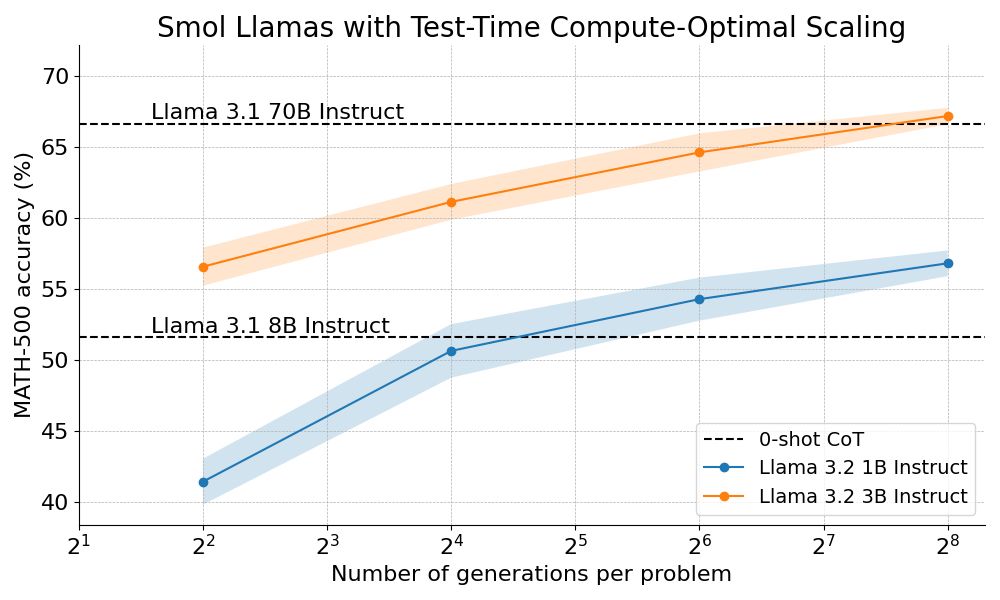

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

How? By combining step-wise reward models with tree search algorithms :)

We're open sourcing the full recipe and sharing a detailed blog post 👇

Hey ML peeps, we found a nice extension to beam search at Hugging Face that is far more scalable and produces more diverse candidates

The basic idea is to split your N beams into N/M subtrees and then run greedy node selection in parallel

Does anyone know what this algorithm is called?

Hey ML peeps, we found a nice extension to beam search at Hugging Face that is far more scalable and produces more diverse candidates

The basic idea is to split your N beams into N/M subtrees and then run greedy node selection in parallel

Does anyone know what this algorithm is called?