Presenting Tian Yun’s paper on abstract reasoners at CoNLL on Thursday.

I’ve been investigating how LLMs internally compose functions lately. Happy to chat about that (among other things) and hang out in Vienna!

Presenting Tian Yun’s paper on abstract reasoners at CoNLL on Thursday.

I’ve been investigating how LLMs internally compose functions lately. Happy to chat about that (among other things) and hang out in Vienna!

arxiv.org/abs/2503.219...

arxiv.org/abs/2503.219...

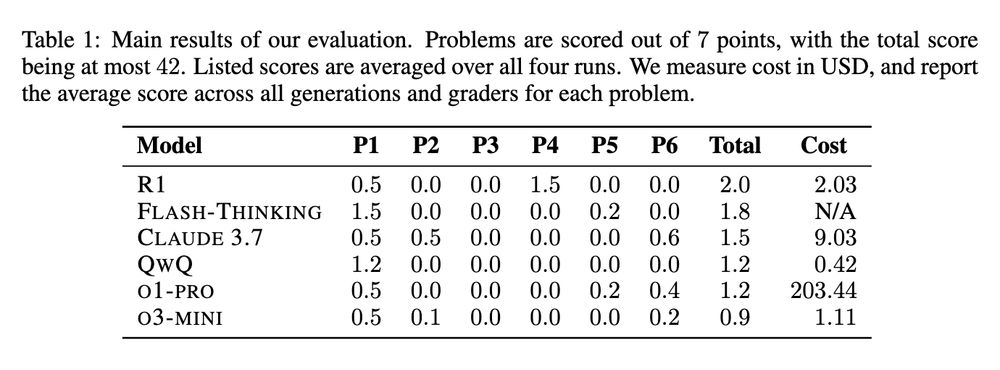

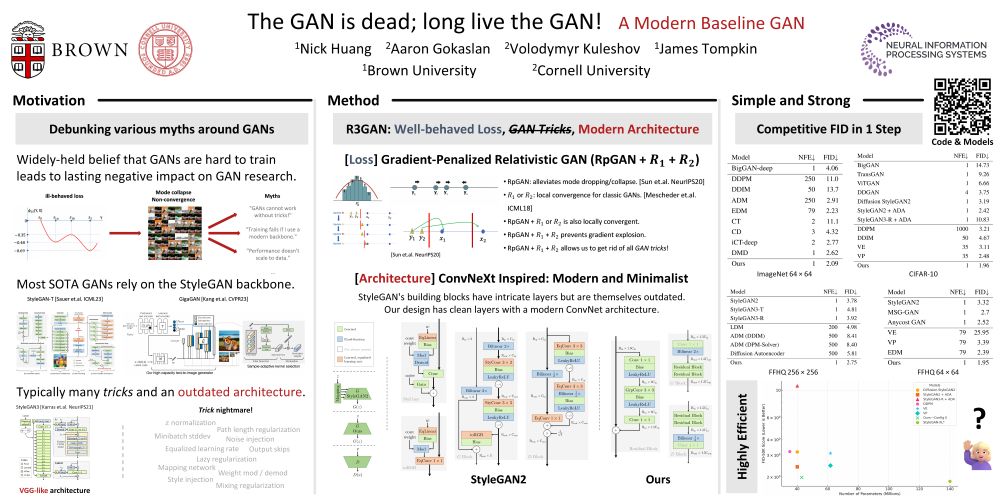

Let's debunk that, shall we?

Let's debunk that, shall we?

github.com/apoorvkh/tor...

Docs: torchrun.xyz

`(uv) pip install torchrunx` today!

(w/ the very talented, Peter Curtin, Brown CS '25)

github.com/apoorvkh/tor...

Docs: torchrun.xyz

`(uv) pip install torchrunx` today!

(w/ the very talented, Peter Curtin, Brown CS '25)

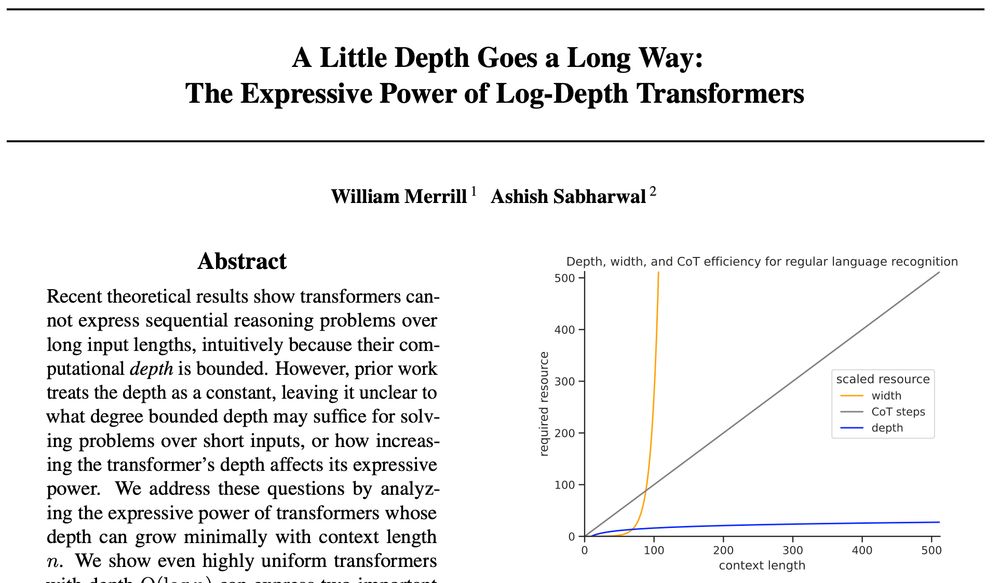

We take this as encouraging for further research on looped transformers!🧵

We take this as encouraging for further research on looped transformers!🧵

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

blog.apoorvkh.com/posts/projec...

blog.apoorvkh.com/posts/projec...

Cohere For AI (www.youtube.com/playlist?lis...)

Simons Institute (www.youtube.com/@SimonsInsti...)

Cohere For AI (www.youtube.com/playlist?lis...)

Simons Institute (www.youtube.com/@SimonsInsti...)

Internally, I’ve been developing and using a library that makes this extremely easy, and I decided to open-source it

Meet the decoding library: github.com/benlipkin/de...

1/7

Internally, I’ve been developing and using a library that makes this extremely easy, and I decided to open-source it

Meet the decoding library: github.com/benlipkin/de...

1/7

I was lucky enough to work on almost every stage of the pipeline in one way or another. Some comments + highlights ⬇️

I was lucky enough to work on almost every stage of the pipeline in one way or another. Some comments + highlights ⬇️