Using complexity theory and formal languages to understand the power and limits of LLMs

https://lambdaviking.com/ https://github.com/viking-sudo-rm

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

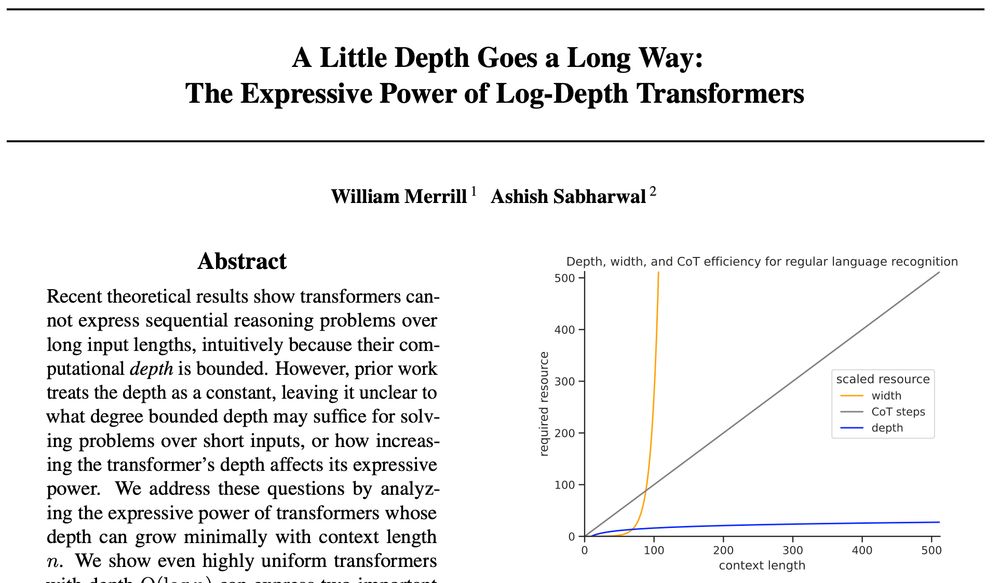

We take this as encouraging for further research on looped transformers!🧵

We take this as encouraging for further research on looped transformers!🧵

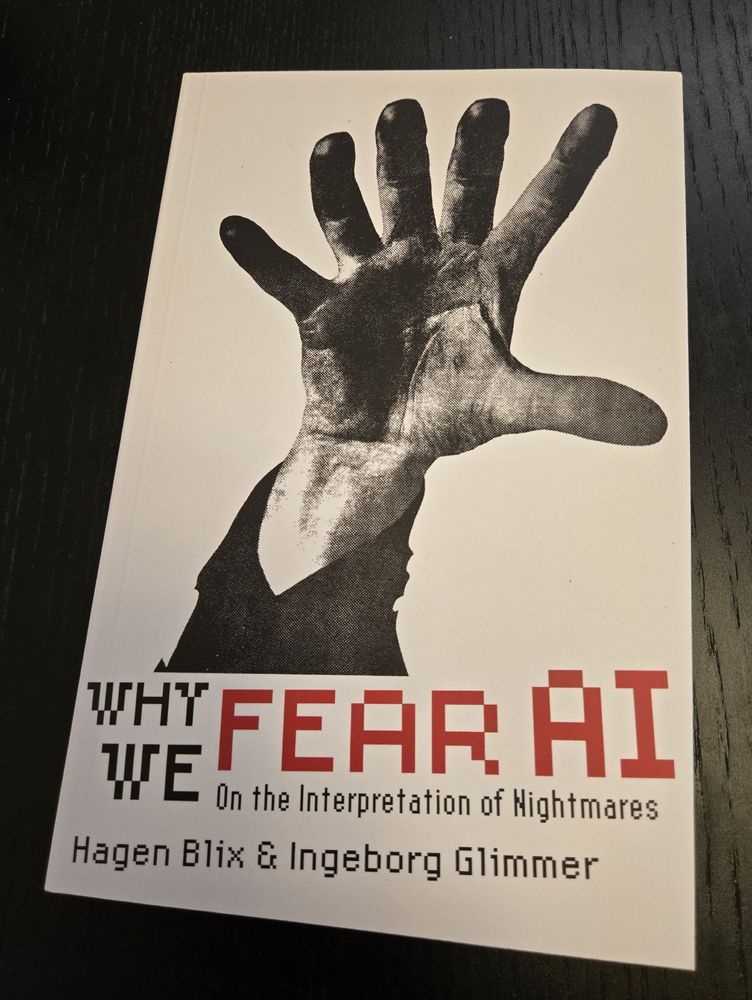

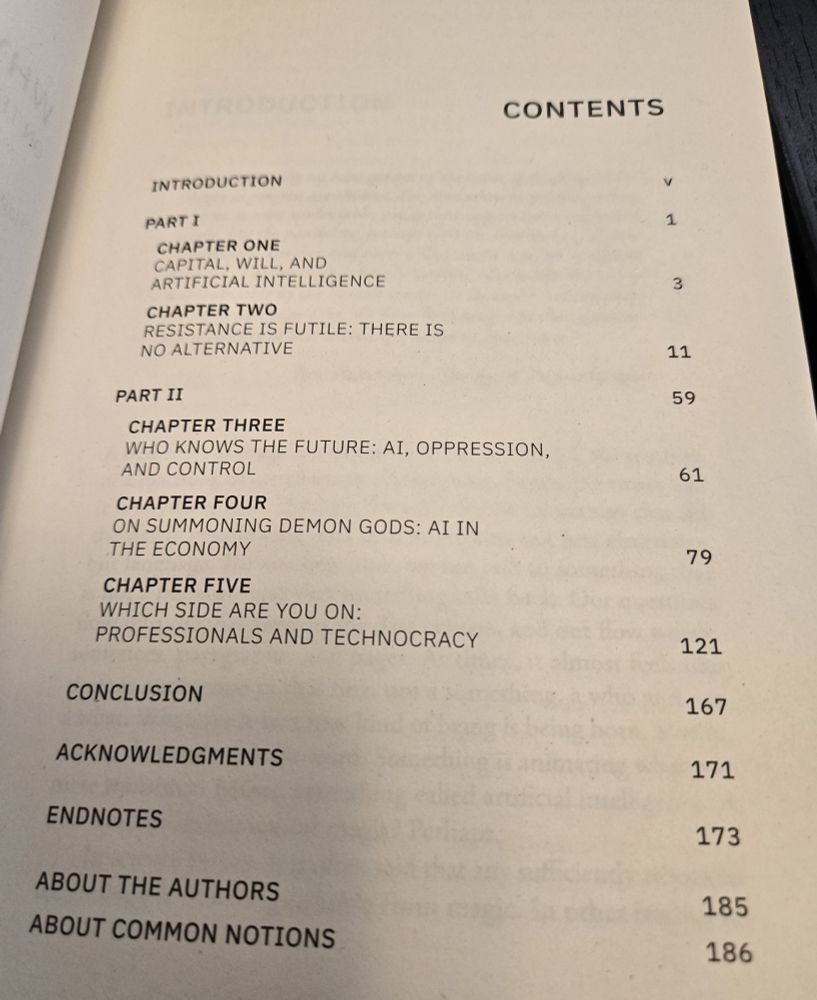

Amazing job from @commonnotions.bsky.social! Love the cover design from Josh MacPhee <3

Get a copy here:

www.commonnotions.org/why-we-fear-ai

Amazing job from @commonnotions.bsky.social! Love the cover design from Josh MacPhee <3

Get a copy here:

www.commonnotions.org/why-we-fear-ai

Further details on registration and whatnot to follow in the coming weeks. Please do circulate to anyone you know who may be interested in attending!

Further details on registration and whatnot to follow in the coming weeks. Please do circulate to anyone you know who may be interested in attending!

www.semanticscholar.org/paper/On-the...

www.semanticscholar.org/paper/On-the...