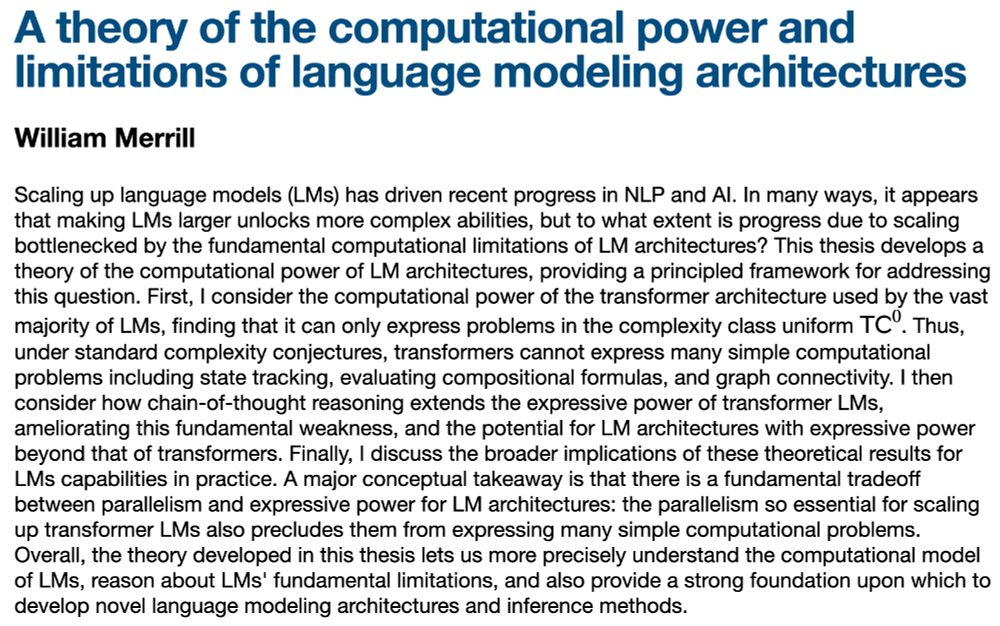

William Merrill

@lambdaviking.bsky.social

Will irl - PhD student @ NYU on the academic job market!

Using complexity theory and formal languages to understand the power and limits of LLMs

https://lambdaviking.com/ https://github.com/viking-sudo-rm

Using complexity theory and formal languages to understand the power and limits of LLMs

https://lambdaviking.com/ https://github.com/viking-sudo-rm

I'll be defending my dissertation at NYU next Monday, June 16 at 4pm ET!

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

June 9, 2025 at 9:24 PM

I'll be defending my dissertation at NYU next Monday, June 16 at 4pm ET!

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

I've definitely missed inviting some people who might be interested, so please email me if you'd like to attend (NYC or Zoom)

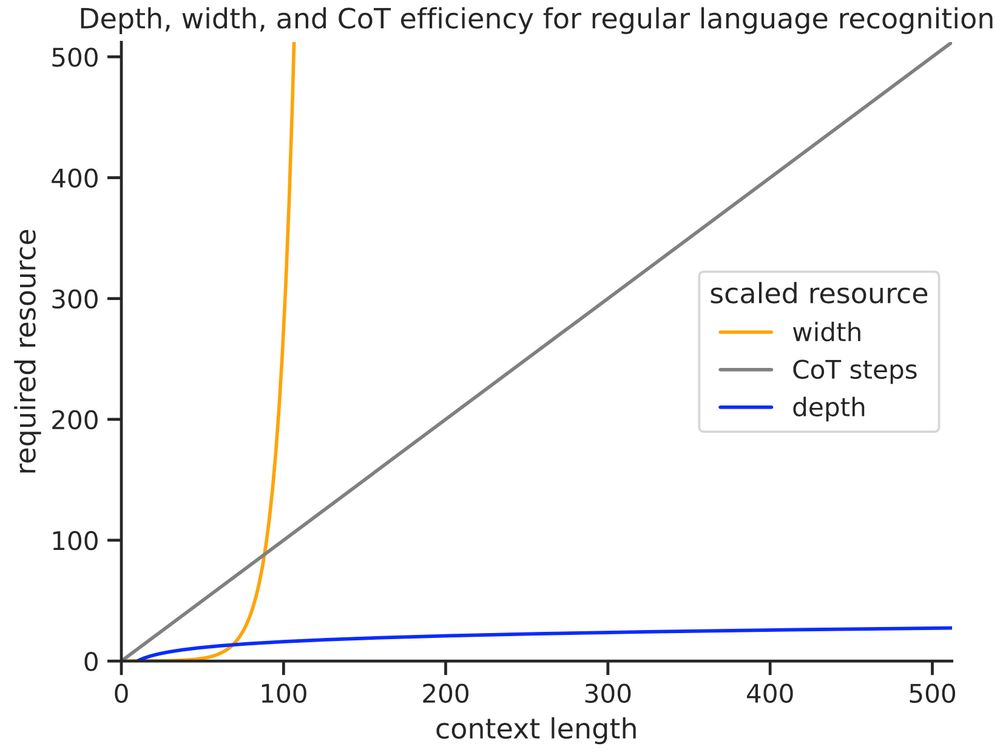

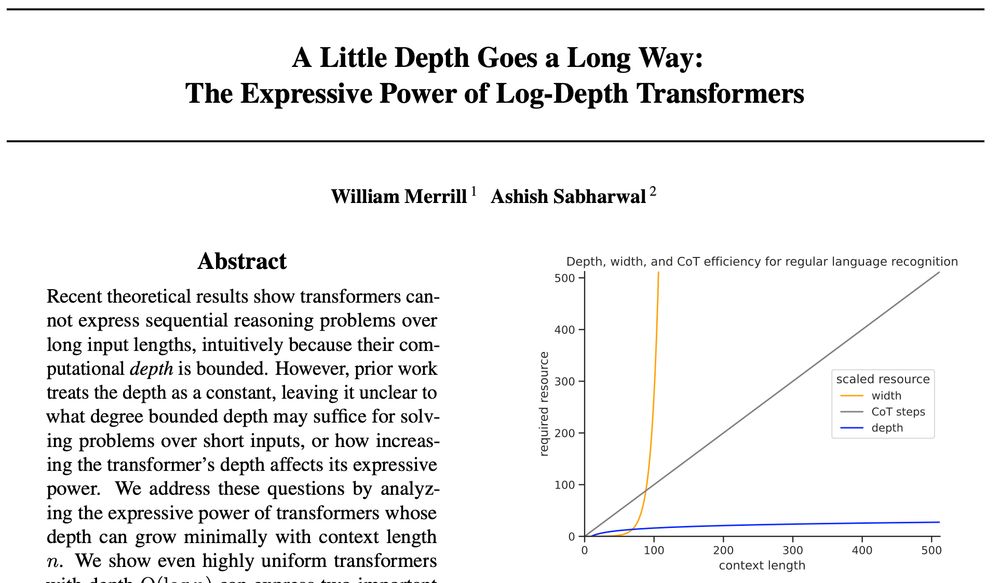

Our results suggest dynamic depth can be a more efficient form of test-time compute than chain of thought (at least for reg languages). While CoT would use ~n steps to recognize regular languages to length n, looped transformers only need ~log n depth

March 7, 2025 at 4:46 PM

Our results suggest dynamic depth can be a more efficient form of test-time compute than chain of thought (at least for reg languages). While CoT would use ~n steps to recognize regular languages to length n, looped transformers only need ~log n depth

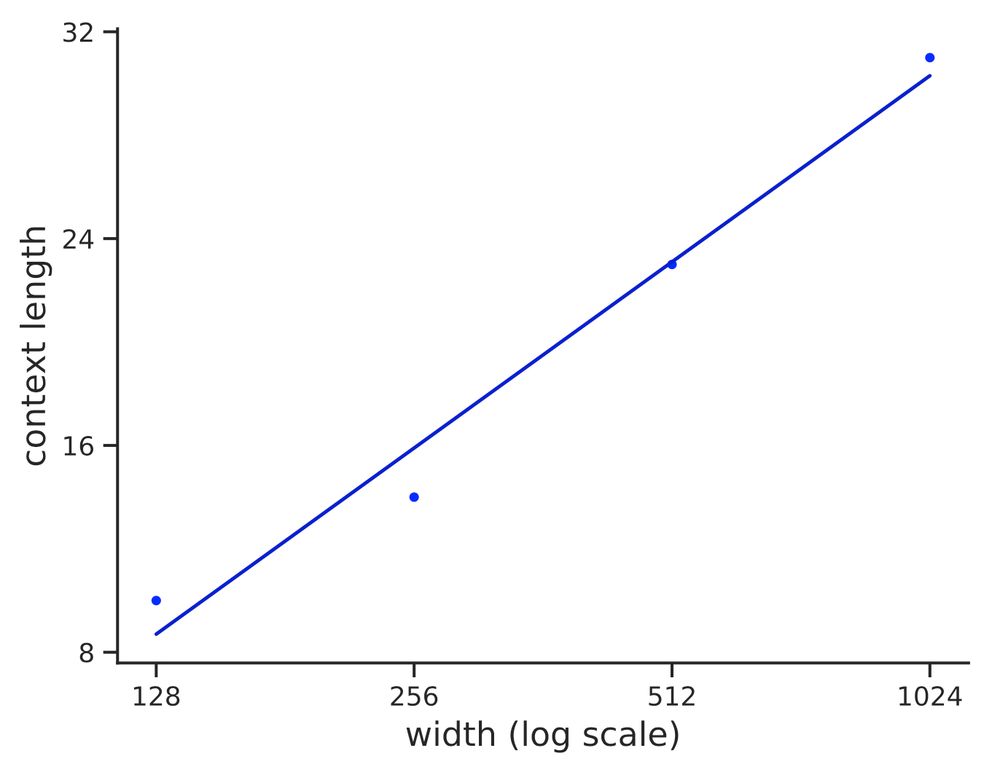

In contrast, both in theory and practice, width must grow exponentially with sequence length to enable regular language recognition. Thus, while slightly increasing depth expands expressive power, increasing width to gain power is intractable!

March 7, 2025 at 4:46 PM

In contrast, both in theory and practice, width must grow exponentially with sequence length to enable regular language recognition. Thus, while slightly increasing depth expands expressive power, increasing width to gain power is intractable!

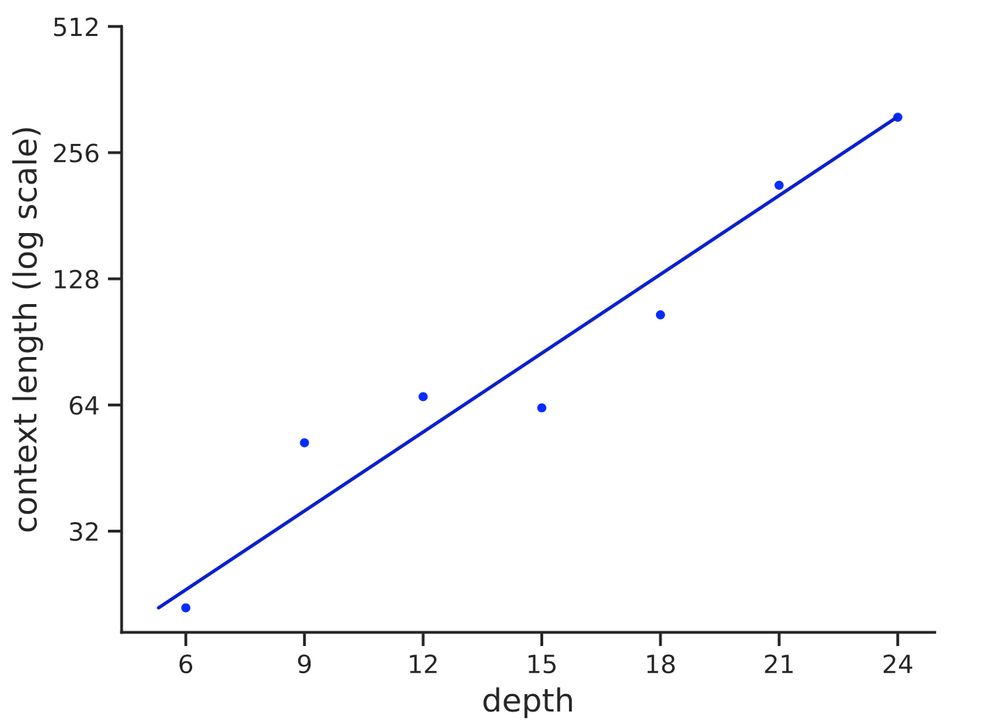

In practice, can transformers learn to solve these problems with log depth?

We find the depth required to recognize strings of length n grows ~ log n with r^2=.93. Thus, log depth appears necessary and sufficient to recognize reg languages in practice, matching our theory

We find the depth required to recognize strings of length n grows ~ log n with r^2=.93. Thus, log depth appears necessary and sufficient to recognize reg languages in practice, matching our theory

March 7, 2025 at 4:46 PM

In practice, can transformers learn to solve these problems with log depth?

We find the depth required to recognize strings of length n grows ~ log n with r^2=.93. Thus, log depth appears necessary and sufficient to recognize reg languages in practice, matching our theory

We find the depth required to recognize strings of length n grows ~ log n with r^2=.93. Thus, log depth appears necessary and sufficient to recognize reg languages in practice, matching our theory

✨How does the depth of a transformer affect its reasoning capabilities? New preprint by myself and @Ashish_S_AI shows that a little depth goes a long way to increase transformers’ expressive power

We take this as encouraging for further research on looped transformers!🧵

We take this as encouraging for further research on looped transformers!🧵

March 7, 2025 at 4:46 PM

✨How does the depth of a transformer affect its reasoning capabilities? New preprint by myself and @Ashish_S_AI shows that a little depth goes a long way to increase transformers’ expressive power

We take this as encouraging for further research on looped transformers!🧵

We take this as encouraging for further research on looped transformers!🧵

🔥 Old Norse poetry gen

The Vikings call, say now,

OLMo 2, the ruler of languages.

May your words fly over the seas,

all over the world, for you are wise.

Wordsmith, balanced and aligned,

for you the skalds themselves sing,

your soul, which hears new lifeforms,

may it live long and tell a saga.

The Vikings call, say now,

OLMo 2, the ruler of languages.

May your words fly over the seas,

all over the world, for you are wise.

Wordsmith, balanced and aligned,

for you the skalds themselves sing,

your soul, which hears new lifeforms,

may it live long and tell a saga.

November 28, 2024 at 6:19 PM

🔥 Old Norse poetry gen

The Vikings call, say now,

OLMo 2, the ruler of languages.

May your words fly over the seas,

all over the world, for you are wise.

Wordsmith, balanced and aligned,

for you the skalds themselves sing,

your soul, which hears new lifeforms,

may it live long and tell a saga.

The Vikings call, say now,

OLMo 2, the ruler of languages.

May your words fly over the seas,

all over the world, for you are wise.

Wordsmith, balanced and aligned,

for you the skalds themselves sing,

your soul, which hears new lifeforms,

may it live long and tell a saga.