currently doing PhD @uwcse,

prev @usyd @ai2

🇦🇺🇨🇦🇬🇧

ivison.id.au

ivison.id.au/2026/02/02/r...

ivison.id.au/2026/02/02/r...

We’re excited to host an AMA to answer your Qs about OLMo, our family of open language models.

🗓️ When: May 8, 8-10 am PT

🌐 Where: r/huggingface

🧠 Why: Gain insights from our expert researchers

Chat soon!

Models with it: OLMo 2 7B, 13B, 32B Instruct; Tulu 3, 3.1 8B; Tulu 3 405b

Models with it: OLMo 2 7B, 13B, 32B Instruct; Tulu 3, 3.1 8B; Tulu 3 405b

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

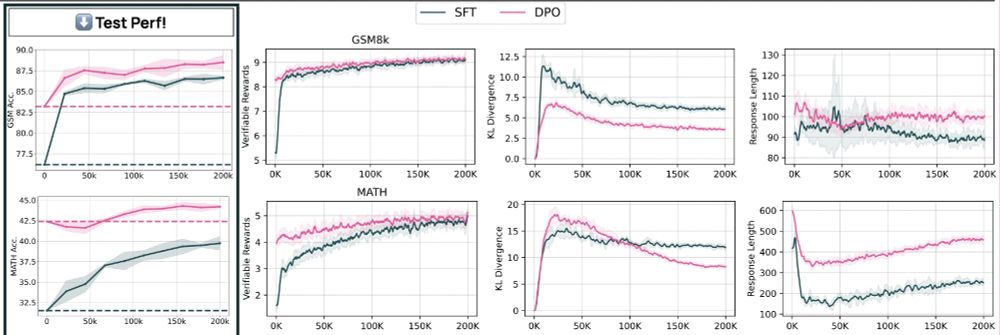

We are happy to "quietly" release our latest GRPO-trained Tulu 3.1 model, which is considerably better in MATH and GSM8K!

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

As phones get faster, more AI will happen on device. With OLMoE, researchers, developers, and users can get a feel for this future: fully private LLMs, available anytime.

Learn more from @soldaini.net👇 youtu.be/rEK_FZE5rqQ

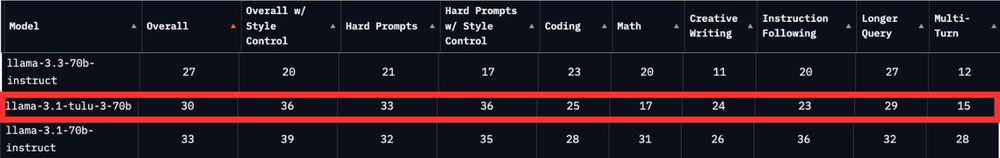

Scaling up the Tulu recipe to 405B works pretty well! We mainly see this as confirmation that open-instruct scales to large-scale training -- more exciting and ambitious things to come!

Scaling up the Tulu recipe to 405B works pretty well! We mainly see this as confirmation that open-instruct scales to large-scale training -- more exciting and ambitious things to come!

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

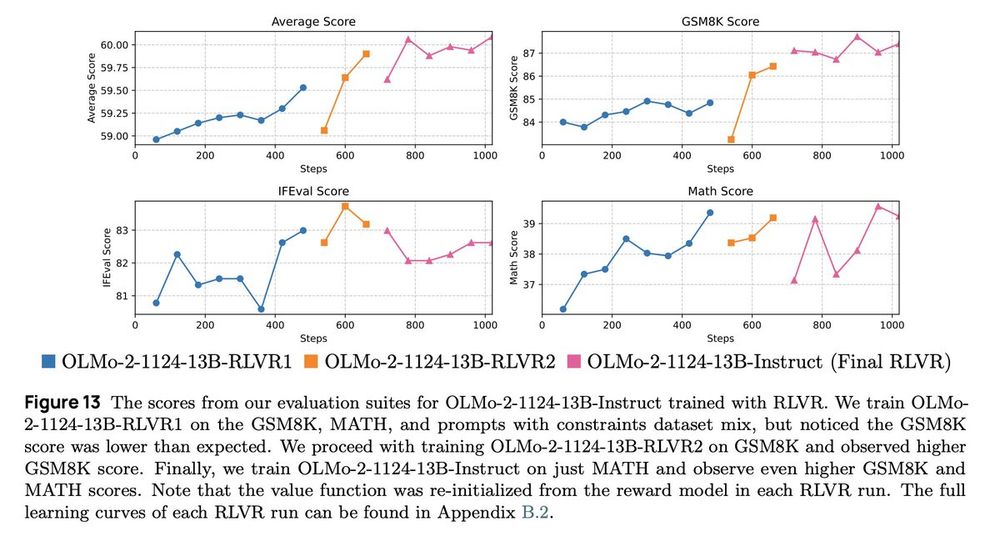

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

This time, we applied RLVR iteratively! Our initial RLVR checkpoint on the RLVR dataset mix shows a low GSM8K score, so we did another RLVR on GSM8K only and another on MATH only 😆.

And it works! A thread 🧵 1/N

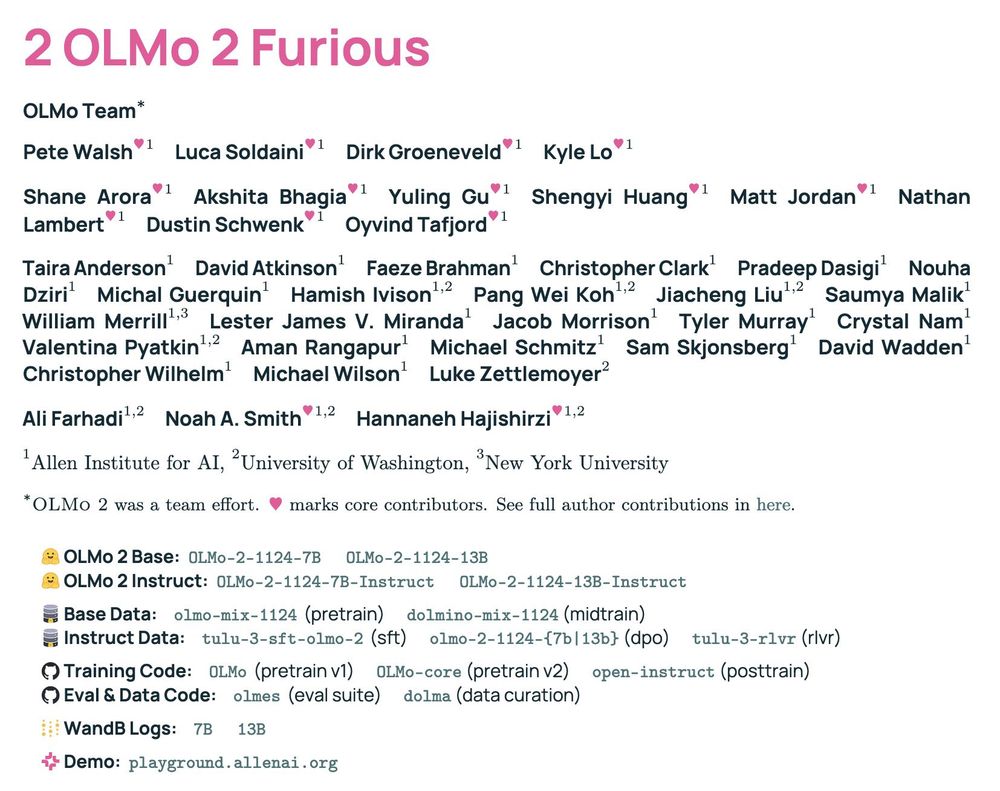

We applied Tulu post-training to OLMo 2 as well, so you can get strong model performance AND see what your model was actually trained on.

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

We applied Tulu post-training to OLMo 2 as well, so you can get strong model performance AND see what your model was actually trained on.

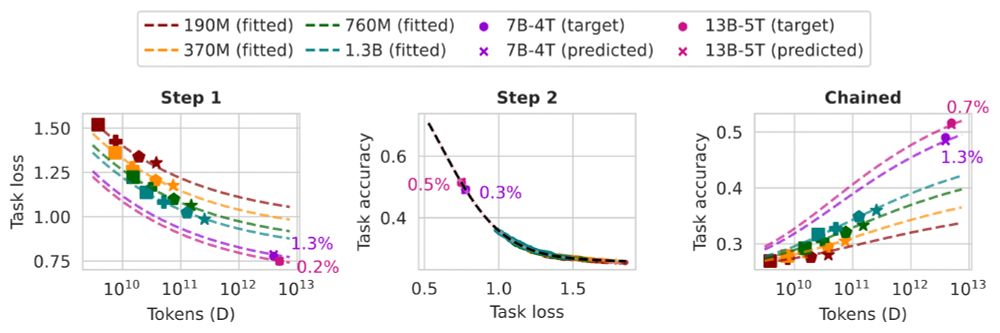

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

Expanding reinforcement learning with verifiable rewards to more domains and with better answer extraction and to more domains in our near roadmap.

https://buff.ly/3V4JEIJ

Expanding reinforcement learning with verifiable rewards to more domains and with better answer extraction and to more domains in our near roadmap.

https://buff.ly/3V4JEIJ

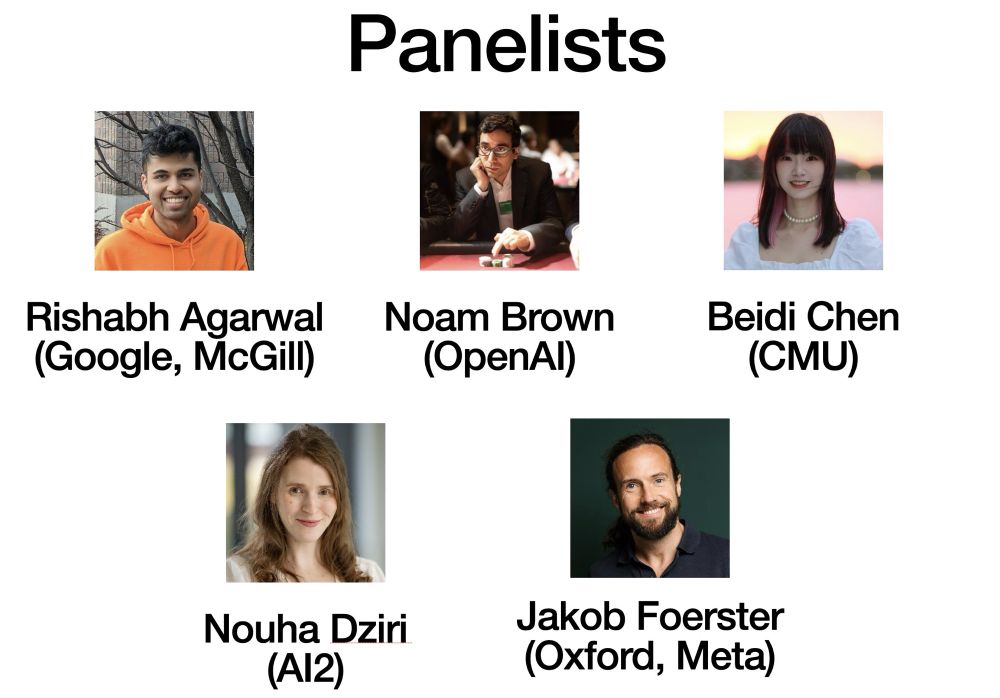

Our website: cmu-l3.github.io/neurips2024-...

Our website: cmu-l3.github.io/neurips2024-...

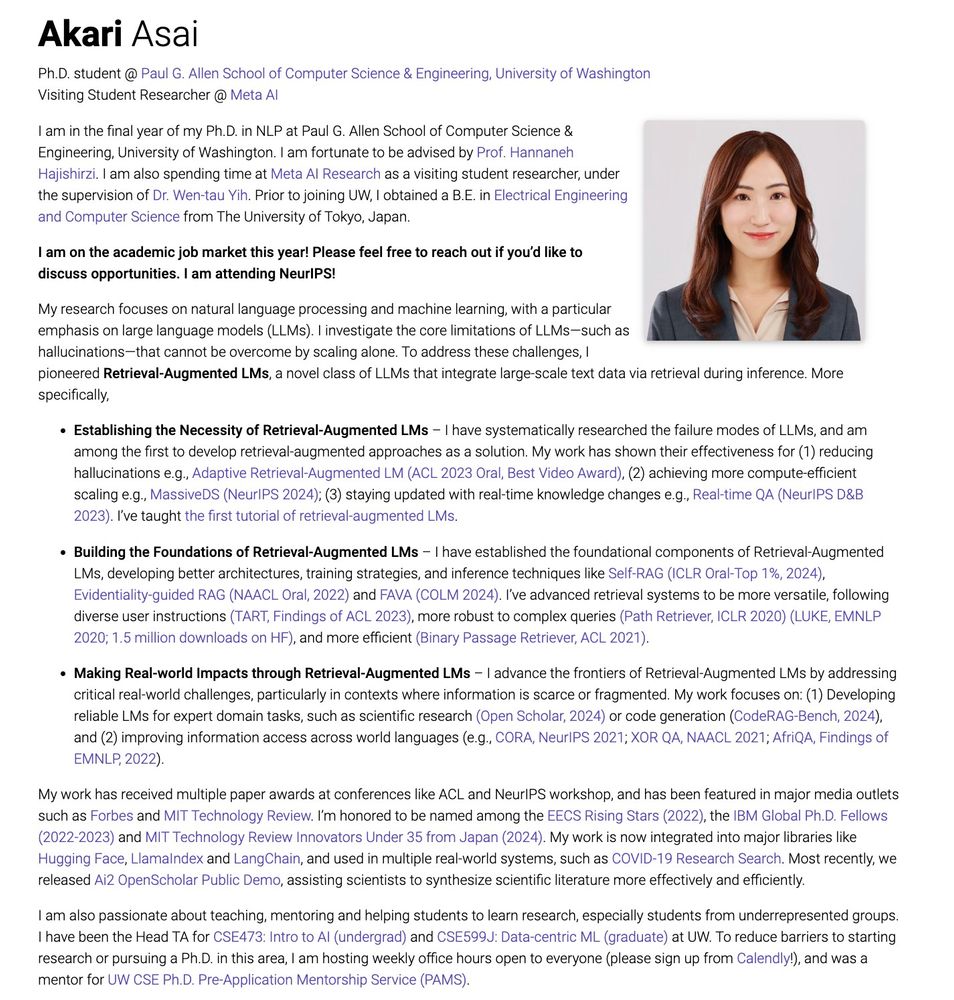

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

My Ph.D. work focuses on Retrieval-Augmented LMs to create more reliable AI systems 🧵

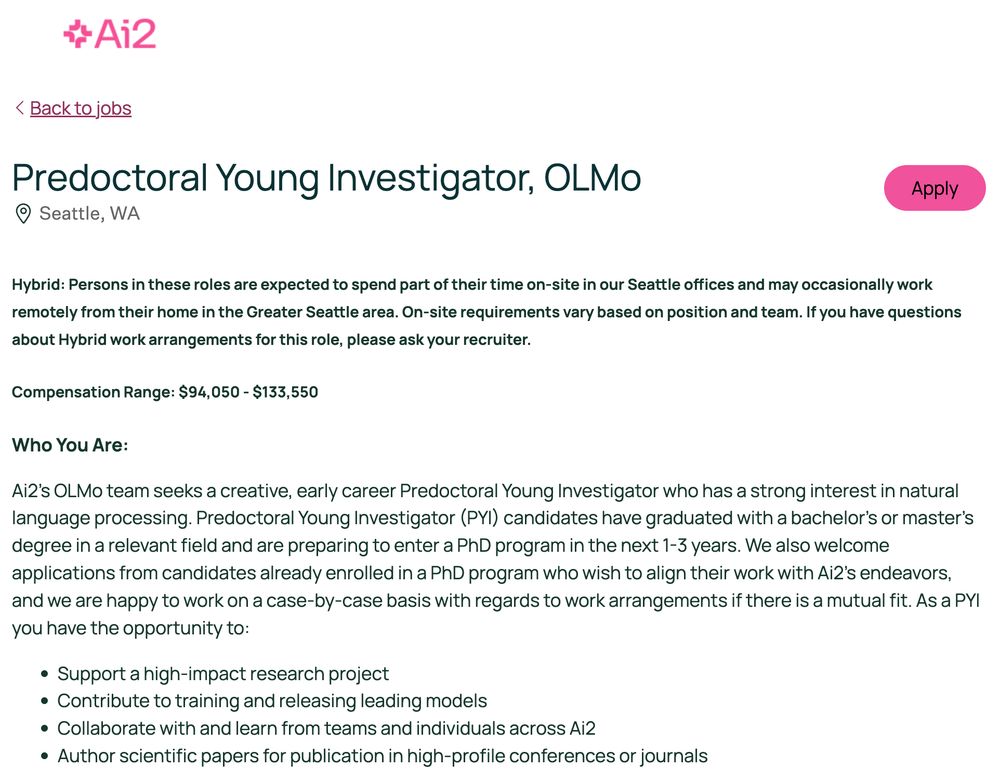

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

This ends up being people done with BS or MS who want to continue to a PhD soon.

https://buff.ly/49nuggo

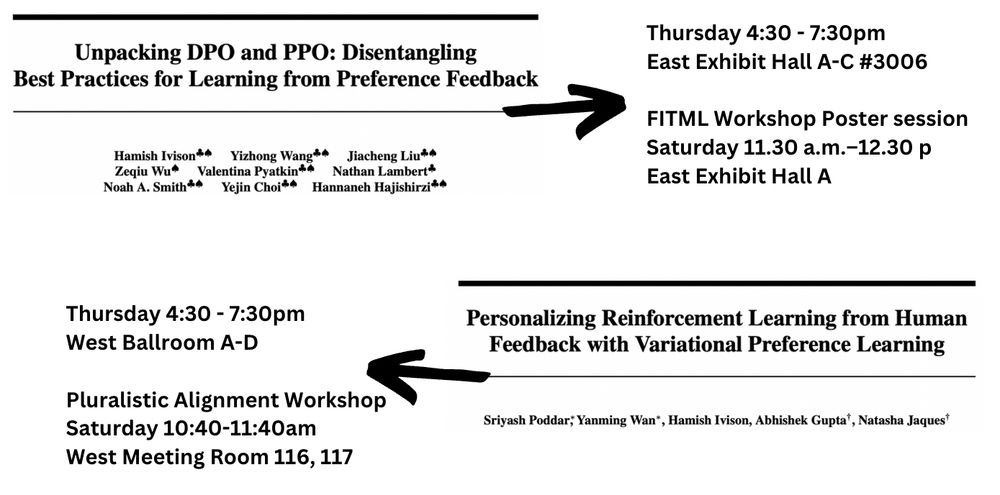

I'll be around all week, with two papers you should go check out (see image or next tweet):

I'll be around all week, with two papers you should go check out (see image or next tweet):