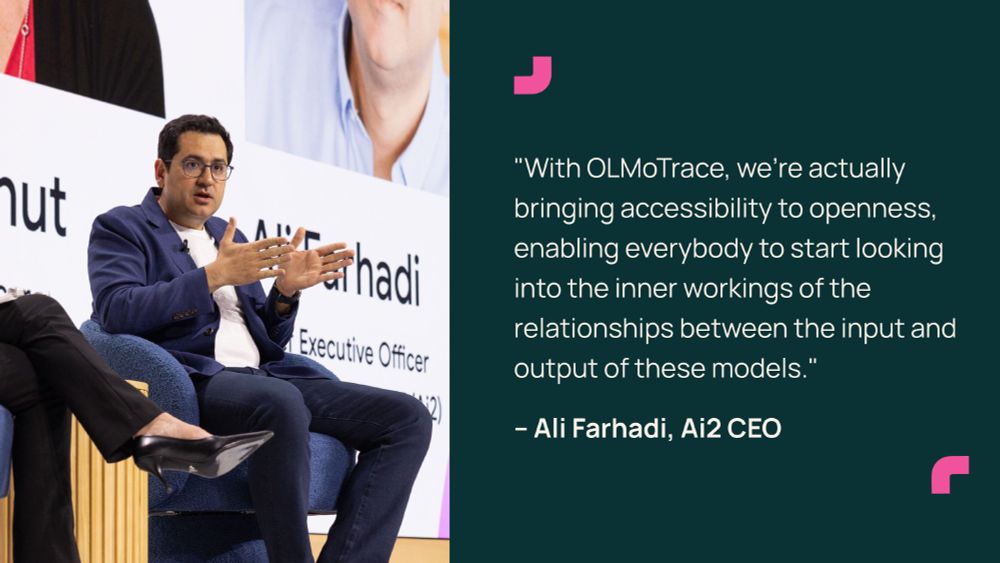

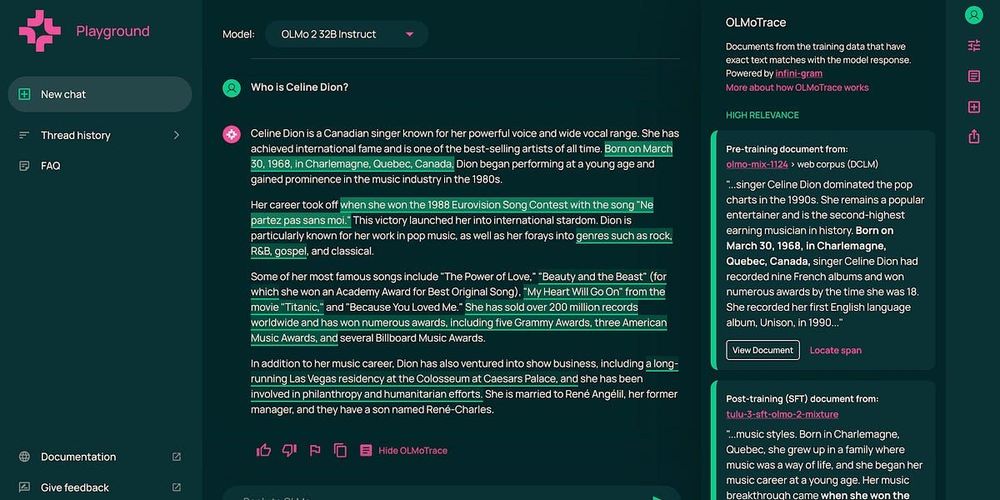

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Ask us anything about OLMo, our family of fully-open language models. Our researchers will be on hand to answer them Thursday, May 8 at 8am PST.

Ask us anything about OLMo, our family of fully-open language models. Our researchers will be on hand to answer them Thursday, May 8 at 8am PST.

We’re excited to host an AMA to answer your Qs about OLMo, our family of open language models.

🗓️ When: May 8, 8-10 am PT

🌐 Where: r/huggingface

🧠 Why: Gain insights from our expert researchers

Chat soon!

We’re excited to host an AMA to answer your Qs about OLMo, our family of open language models.

🗓️ When: May 8, 8-10 am PT

🌐 Where: r/huggingface

🧠 Why: Gain insights from our expert researchers

Chat soon!

Some musings:

Some musings:

📍 Find us at the Vertex AI Model Garden inside the Google Cloud Showcase - try out OLMoTrace, and take a step inside our fully open AI ecosystem.

📍 Find us at the Vertex AI Model Garden inside the Google Cloud Showcase - try out OLMoTrace, and take a step inside our fully open AI ecosystem.

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

“Many were wary of using AI models unless they had full transparency into models’ training data and could customize the models completely. Ai2’s models allow that.”

“Many were wary of using AI models unless they had full transparency into models’ training data and could customize the models completely. Ai2’s models allow that.”

1. Infini-gram is now open-source under Apache 2.0!

2. We indexed the training data of OLMo 2 models. Now you can search in the training data of these strong, fully-open LLMs.

🧵 (1/4)

1. Infini-gram is now open-source under Apache 2.0!

2. We indexed the training data of OLMo 2 models. Now you can search in the training data of these strong, fully-open LLMs.

🧵 (1/4)

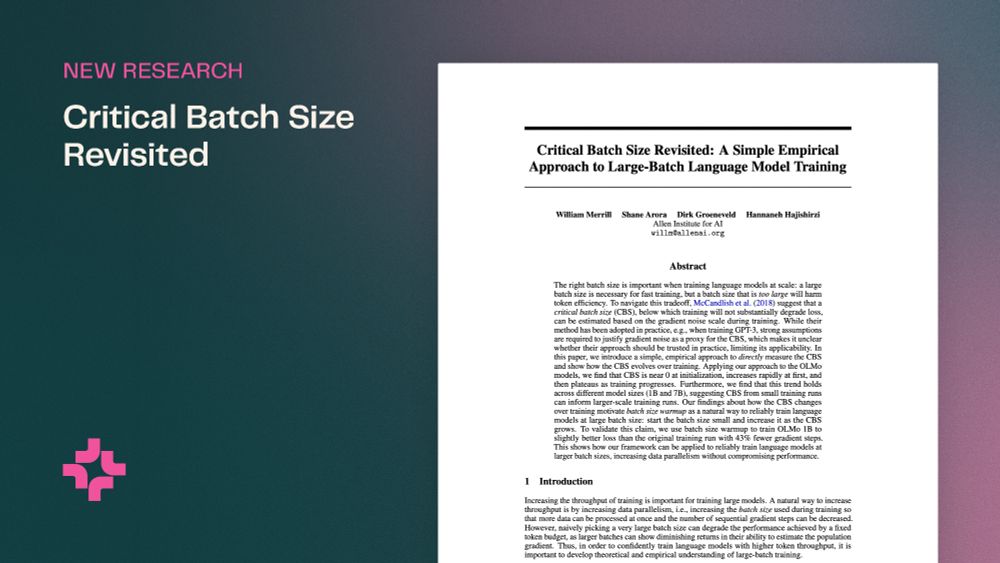

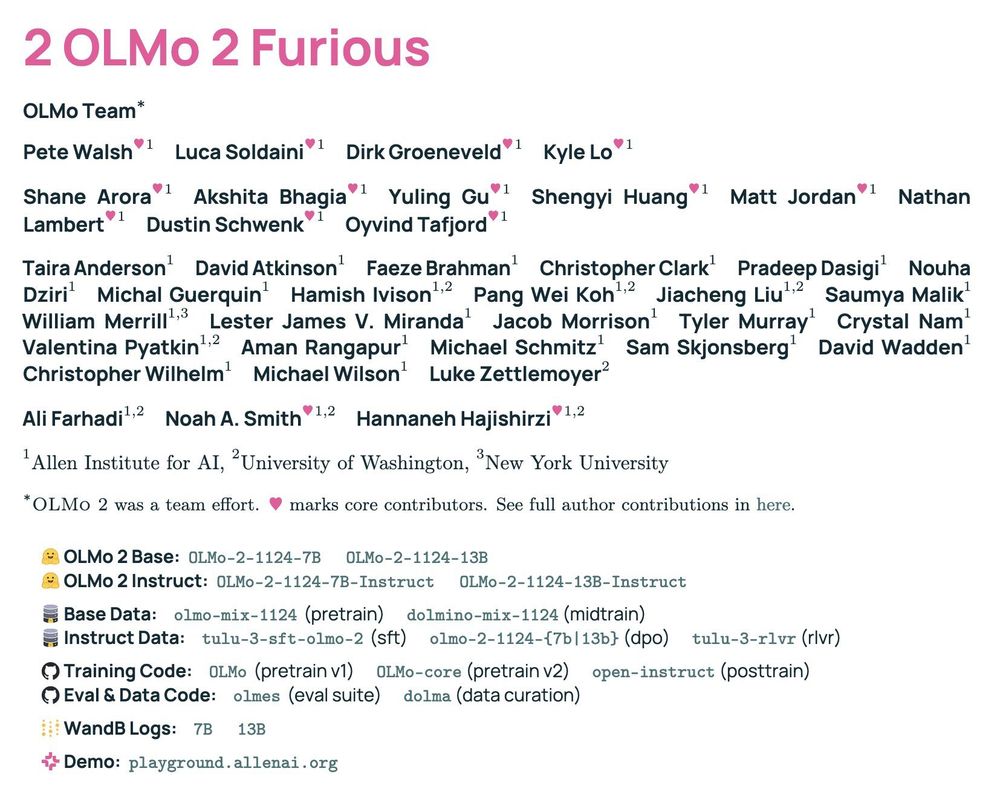

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

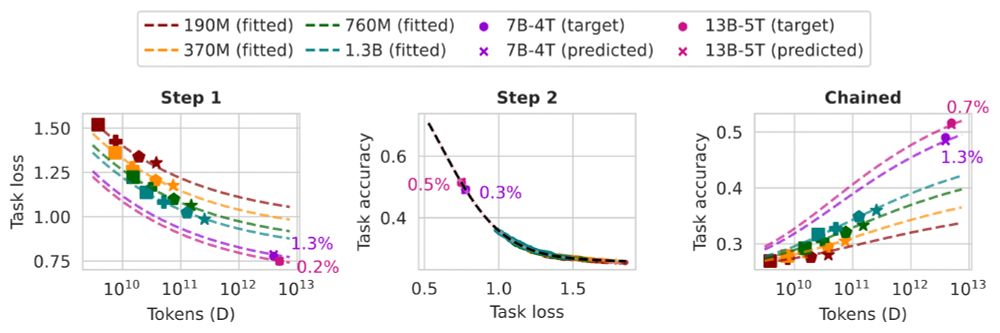

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.