Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

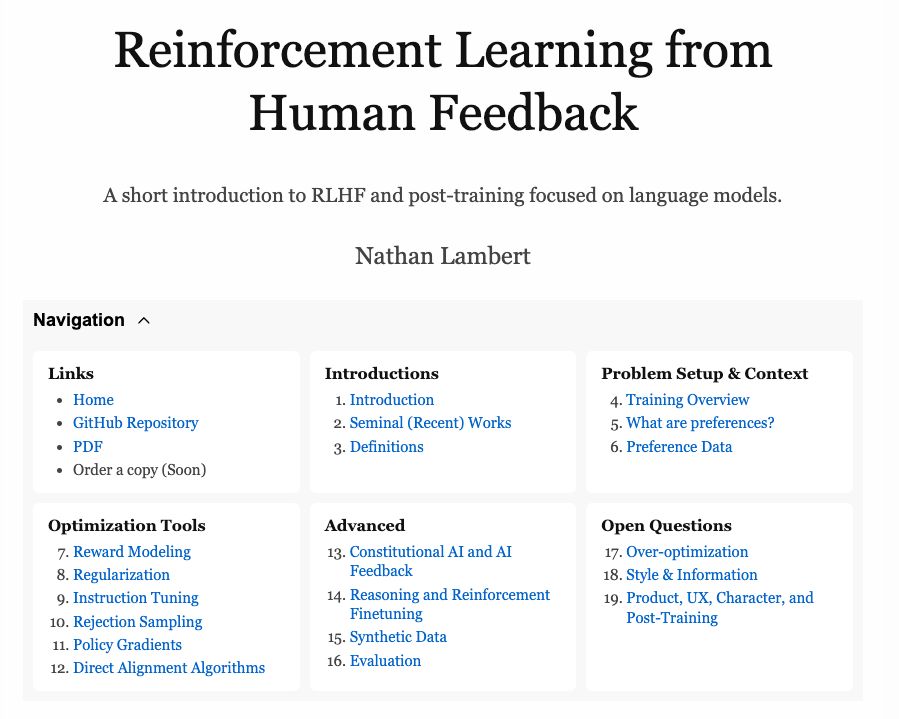

rlhfbook.com

Thanks for reading!

Thanks for reading!

Im just a few minutes in and think it'll make some of the crazy things i was doing way easier to monitor.

Im just a few minutes in and think it'll make some of the crazy things i was doing way easier to monitor.

What would be the right way to measure tasks of that scope?

What would be the right way to measure tasks of that scope?

cursor.com/blog/compose...

cursor.com/blog/compose...

Claude is still king, but codex is closer than ever

www.interconnects.ai/p/opus-46-vs...

Claude is still king, but codex is closer than ever

www.interconnects.ai/p/opus-46-vs...

Claude is still king, but codex is closer than ever

www.interconnects.ai/p/opus-46-vs...

Overall:

1. Qwen

2. Llama

3. GPT-OSS

Big models:

1. DeepSeek

2. GPT-OSS/Qwen/everyone else

Llama's inertia says a lot about how the ecosystem works.

Overall:

1. Qwen

2. Llama

3. GPT-OSS

Big models:

1. DeepSeek

2. GPT-OSS/Qwen/everyone else

Llama's inertia says a lot about how the ecosystem works.

Sounds worth giving a go. Big changes are good.

Sounds worth giving a go. Big changes are good.

I’m very bullish on Nvidia’s open model efforts in 2026.

Interconnects interview #17 on the past, present, and future of the Nemotron project.

www.youtube.com/watch?v=Y3Vb...

I’m very bullish on Nvidia’s open model efforts in 2026.

Interconnects interview #17 on the past, present, and future of the Nemotron project.

www.youtube.com/watch?v=Y3Vb...

Tons of useful "niche" models and anticipation of big releases coming soon.

www.interconnects.ai/p/latest-ope...

Tons of useful "niche" models and anticipation of big releases coming soon.

www.interconnects.ai/p/latest-ope...

On the dgx spark finetuning olmo 2 1b sft. Built by referencing the original repositories + TRL

On the dgx spark finetuning olmo 2 1b sft. Built by referencing the original repositories + TRL

Email me with "CMU Visit" in the subject if you're interested in chatting & why!

Email me with "CMU Visit" in the subject if you're interested in chatting & why!