Nathan Lambert

@natolambert.bsky.social

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Pinned

Nathan Lambert

@natolambert.bsky.social

· Apr 16

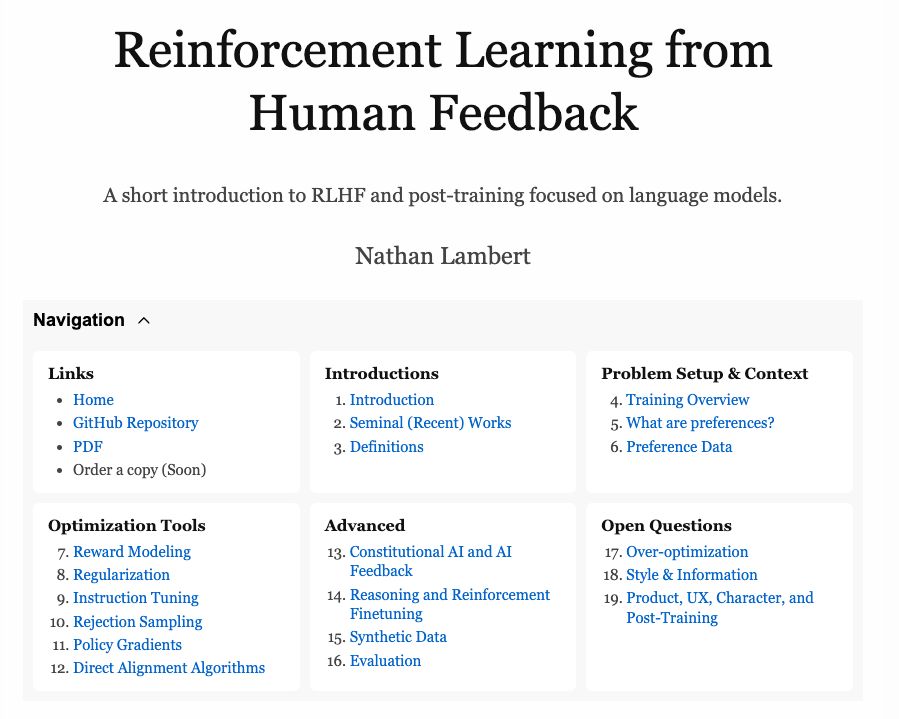

First draft online version of The RLHF Book is DONE. Recently I've been creating the advanced discussion chapters on everything from Constitutional AI to evaluation and character training, but I also sneak in consistent improvements to the RL specific chapter.

rlhfbook.com

rlhfbook.com

If you're working on character training research, what're you working on? What is limiting your ability to do the research you want here?

Surely there are more people studying how to modify & steer model personality after the GPT 4o sycophancy incident.

Surely there are more people studying how to modify & steer model personality after the GPT 4o sycophancy incident.

November 10, 2025 at 9:57 PM

If you're working on character training research, what're you working on? What is limiting your ability to do the research you want here?

Surely there are more people studying how to modify & steer model personality after the GPT 4o sycophancy incident.

Surely there are more people studying how to modify & steer model personality after the GPT 4o sycophancy incident.

Opening the black box of character training

Some new research from me!

Exploring how easy it is to craft personalities like sycophantic chatbots, and exploring how this will change as we move from chat to agents.

www.interconnects.ai/p/opening-th...

Some new research from me!

Exploring how easy it is to craft personalities like sycophantic chatbots, and exploring how this will change as we move from chat to agents.

www.interconnects.ai/p/opening-th...

Opening the character training pipeline

Some new research from me!

www.interconnects.ai

November 10, 2025 at 3:40 PM

Opening the black box of character training

Some new research from me!

Exploring how easy it is to craft personalities like sycophantic chatbots, and exploring how this will change as we move from chat to agents.

www.interconnects.ai/p/opening-th...

Some new research from me!

Exploring how easy it is to craft personalities like sycophantic chatbots, and exploring how this will change as we move from chat to agents.

www.interconnects.ai/p/opening-th...

The DeepSeek moment underestimated the talent portion & overfocused on training capital in N of GPUs.

The rest of 2025 has been living through that reality with Kimi, GLM, Ant Ling, Meituan... The burden of proof is back on scaling if AI will be in the hands of a few companies.

The rest of 2025 has been living through that reality with Kimi, GLM, Ant Ling, Meituan... The burden of proof is back on scaling if AI will be in the hands of a few companies.

November 8, 2025 at 1:05 PM

The DeepSeek moment underestimated the talent portion & overfocused on training capital in N of GPUs.

The rest of 2025 has been living through that reality with Kimi, GLM, Ant Ling, Meituan... The burden of proof is back on scaling if AI will be in the hands of a few companies.

The rest of 2025 has been living through that reality with Kimi, GLM, Ant Ling, Meituan... The burden of proof is back on scaling if AI will be in the hands of a few companies.

I appreciate the shoutout from @simonwillison.net

I'm building up a much richer (and direct) understanding of Chinese AI labs. Excited to share more here soon :)

I'm building up a much richer (and direct) understanding of Chinese AI labs. Excited to share more here soon :)

November 7, 2025 at 6:13 PM

I appreciate the shoutout from @simonwillison.net

I'm building up a much richer (and direct) understanding of Chinese AI labs. Excited to share more here soon :)

I'm building up a much richer (and direct) understanding of Chinese AI labs. Excited to share more here soon :)

Reposted by Nathan Lambert

The Chinese Kimi K2 thinking model beats GPT and Claude on some benchmarks. This analysis from @natolambert.bsky.social is a good overview iew of what is going on www.interconnects.ai/p/kimi-k2-th...

5 Thoughts on Kimi K2 Thinking

Quick thoughts on another fantastic open model from a rapidly rising Chinese lab.

www.interconnects.ai

November 7, 2025 at 12:07 AM

The Chinese Kimi K2 thinking model beats GPT and Claude on some benchmarks. This analysis from @natolambert.bsky.social is a good overview iew of what is going on www.interconnects.ai/p/kimi-k2-th...

Thoughts on Kimi K2 Thinking

Congrats to the Moonshot AI team on the awesome open release. For close followers of Chinese AI models, this isn't shocking, but more inflection points are coming. Pressure is building on US labs with more expensive models.

www.interconnects.ai/p/kimi-k2-th...

Congrats to the Moonshot AI team on the awesome open release. For close followers of Chinese AI models, this isn't shocking, but more inflection points are coming. Pressure is building on US labs with more expensive models.

www.interconnects.ai/p/kimi-k2-th...

November 6, 2025 at 6:53 PM

Thoughts on Kimi K2 Thinking

Congrats to the Moonshot AI team on the awesome open release. For close followers of Chinese AI models, this isn't shocking, but more inflection points are coming. Pressure is building on US labs with more expensive models.

www.interconnects.ai/p/kimi-k2-th...

Congrats to the Moonshot AI team on the awesome open release. For close followers of Chinese AI models, this isn't shocking, but more inflection points are coming. Pressure is building on US labs with more expensive models.

www.interconnects.ai/p/kimi-k2-th...

The Great Lock In

November 6, 2025 at 1:07 AM

The Great Lock In

We're starting to hire for our 2026 Olmo interns! Looking for excellent students to do research to help build our best models (primarily enrolled in Ph.D. with experience or interest in any area of the language modeling pipeline).

job-boards.greenhouse.io/thealleninst...

job-boards.greenhouse.io/thealleninst...

November 5, 2025 at 11:27 PM

We're starting to hire for our 2026 Olmo interns! Looking for excellent students to do research to help build our best models (primarily enrolled in Ph.D. with experience or interest in any area of the language modeling pipeline).

job-boards.greenhouse.io/thealleninst...

job-boards.greenhouse.io/thealleninst...

The PyTorch recording of my Open Models Recap talk is out. I think this a great and very timely talk, I'm very happy with it and recommend you watch it more than I'd recommend my usual content.

(Thanks again to the PyTorch team -- great event)

youtu.be/WfwtvzouZGA

(Thanks again to the PyTorch team -- great event)

youtu.be/WfwtvzouZGA

Recapping Open Models in 2025

Hello! Excited to share a re-upload of a talk that I think is excellent. Thanks to the PyTorch Conference for inviting me and letting me share the talk.

2025 has represented an inflection point year…

youtu.be

November 5, 2025 at 4:11 PM

The PyTorch recording of my Open Models Recap talk is out. I think this a great and very timely talk, I'm very happy with it and recommend you watch it more than I'd recommend my usual content.

(Thanks again to the PyTorch team -- great event)

youtu.be/WfwtvzouZGA

(Thanks again to the PyTorch team -- great event)

youtu.be/WfwtvzouZGA

OlmoEarth is a great way to show how Ai2 investing heavily in core modeling capabilities can have positive second order effects in scientific domains.

It is a multimodal, spatio-temporal model built on a fork from the same pretraining codebase with use for text olmos.

It is a multimodal, spatio-temporal model built on a fork from the same pretraining codebase with use for text olmos.

Introducing OlmoEarth 🌍, state-of-the-art AI foundation models paired with ready-to-use open infrastructure to turn Earth data into clear, up-to-date insights within hours—not years.

November 4, 2025 at 5:24 PM

OlmoEarth is a great way to show how Ai2 investing heavily in core modeling capabilities can have positive second order effects in scientific domains.

It is a multimodal, spatio-temporal model built on a fork from the same pretraining codebase with use for text olmos.

It is a multimodal, spatio-temporal model built on a fork from the same pretraining codebase with use for text olmos.

The first research on the fundamentals of character training -- i.e. applying modern post training techniques to ingrain specific character traits into models.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

November 4, 2025 at 4:51 PM

The first research on the fundamentals of character training -- i.e. applying modern post training techniques to ingrain specific character traits into models.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

All models, datasets, code etc released.

Really excited about this project! Sharan, the lead student author, was a joy to work with.

Interesting chart where service based sectors are using AI more (even though, e.g. the US has way less trust or optimism in AI than a place like China) could be a resounding advantage in a willingness to fund the endeavor as it gets even more expensive in the next couple years.

November 4, 2025 at 2:54 AM

Interesting chart where service based sectors are using AI more (even though, e.g. the US has way less trust or optimism in AI than a place like China) could be a resounding advantage in a willingness to fund the endeavor as it gets even more expensive in the next couple years.

Been fewer model releases from the leading labs this fall than I'd expect with all the "low hanging fruit from RL" and all

November 3, 2025 at 6:45 PM

Been fewer model releases from the leading labs this fall than I'd expect with all the "low hanging fruit from RL" and all

refreshing wrap to the weekend

November 3, 2025 at 2:07 AM

refreshing wrap to the weekend

Spent a few hours this morning just finetuning language in the RLHF book RL material based on great feedback from people online. Being able to get this feedback for free is incredible, and I appreciate it so much.

November 2, 2025 at 8:18 PM

Spent a few hours this morning just finetuning language in the RLHF book RL material based on great feedback from people online. Being able to get this feedback for free is incredible, and I appreciate it so much.

It's pretty funny that the world's best language models are far better at the intricate details of RL algorithms than they are at giving not that complex medical advice for pet illnesses.

November 2, 2025 at 5:23 PM

It's pretty funny that the world's best language models are far better at the intricate details of RL algorithms than they are at giving not that complex medical advice for pet illnesses.

Only GPT 5 Pro has been good at doing my complex google sheet contortions for wedding planning. Google Sheets / Excel no longer has a learning curve. I'm going to be unstoppable.

November 1, 2025 at 11:15 PM

Only GPT 5 Pro has been good at doing my complex google sheet contortions for wedding planning. Google Sheets / Excel no longer has a learning curve. I'm going to be unstoppable.

Arxiv published an enforcement change where position papers & surveys need to be accepted to conferences before they can be uploaded to arxiv.

This is the wrong decision. What Arxiv is in practice versus what it is in reality is very different.

open.substack.com/pub/natolamb...

This is the wrong decision. What Arxiv is in practice versus what it is in reality is very different.

open.substack.com/pub/natolamb...

Contra Arxiv Moderation

Opposing a recent Arxiv change.

open.substack.com

November 1, 2025 at 4:17 PM

Arxiv published an enforcement change where position papers & surveys need to be accepted to conferences before they can be uploaded to arxiv.

This is the wrong decision. What Arxiv is in practice versus what it is in reality is very different.

open.substack.com/pub/natolamb...

This is the wrong decision. What Arxiv is in practice versus what it is in reality is very different.

open.substack.com/pub/natolamb...

Today I finished my 31st trip around the sun and celebrated with 2 hours of puppy snuggles before embracing the day.

October 30, 2025 at 3:06 PM

Today I finished my 31st trip around the sun and celebrated with 2 hours of puppy snuggles before embracing the day.

I'm a total sucker for nice RL training scaling plots.

They're very neglected vis-a-vis the much easier inference-time scaling plots.

They're very neglected vis-a-vis the much easier inference-time scaling plots.

October 29, 2025 at 5:30 PM

I'm a total sucker for nice RL training scaling plots.

They're very neglected vis-a-vis the much easier inference-time scaling plots.

They're very neglected vis-a-vis the much easier inference-time scaling plots.

Cursor announced some new coding models. I'd put money on this being a finetune of one of the large, Chinese MoE models.

Excited to see more companies able to train models that suit their needs. Bodes very well for the ecosystem that specific data is stronger than a bigger, general model.

Excited to see more companies able to train models that suit their needs. Bodes very well for the ecosystem that specific data is stronger than a bigger, general model.

October 29, 2025 at 5:22 PM

Cursor announced some new coding models. I'd put money on this being a finetune of one of the large, Chinese MoE models.

Excited to see more companies able to train models that suit their needs. Bodes very well for the ecosystem that specific data is stronger than a bigger, general model.

Excited to see more companies able to train models that suit their needs. Bodes very well for the ecosystem that specific data is stronger than a bigger, general model.

Most people working in the cutting edge of AI seem to have no long-term plan for their unsustainable work habits.

October 25, 2025 at 5:54 PM

Most people working in the cutting edge of AI seem to have no long-term plan for their unsustainable work habits.

Reposted by Nathan Lambert

Great piece by @natolambert.bsky.social on the current state of human exhaustion in the AI world. Makes this important point:

October 25, 2025 at 3:05 PM

Great piece by @natolambert.bsky.social on the current state of human exhaustion in the AI world. Makes this important point: