https://poldrack.github.io/

I'm just getting started on mobilizing scientists nationwide. I'm going to need all the support I can get. Can you help spread the word? Let's talk about how to do that... secure.actblue.com/donate/scien...

I'm just getting started on mobilizing scientists nationwide. I'm going to need all the support I can get. Can you help spread the word? Let's talk about how to do that... secure.actblue.com/donate/scien...

russpoldrack.substack.com/p/metadata-d... the latest in my Better Code, Better Science series.

russpoldrack.substack.com/p/metadata-d... the latest in my Better Code, Better Science series.

By @lyrebard.bsky.social

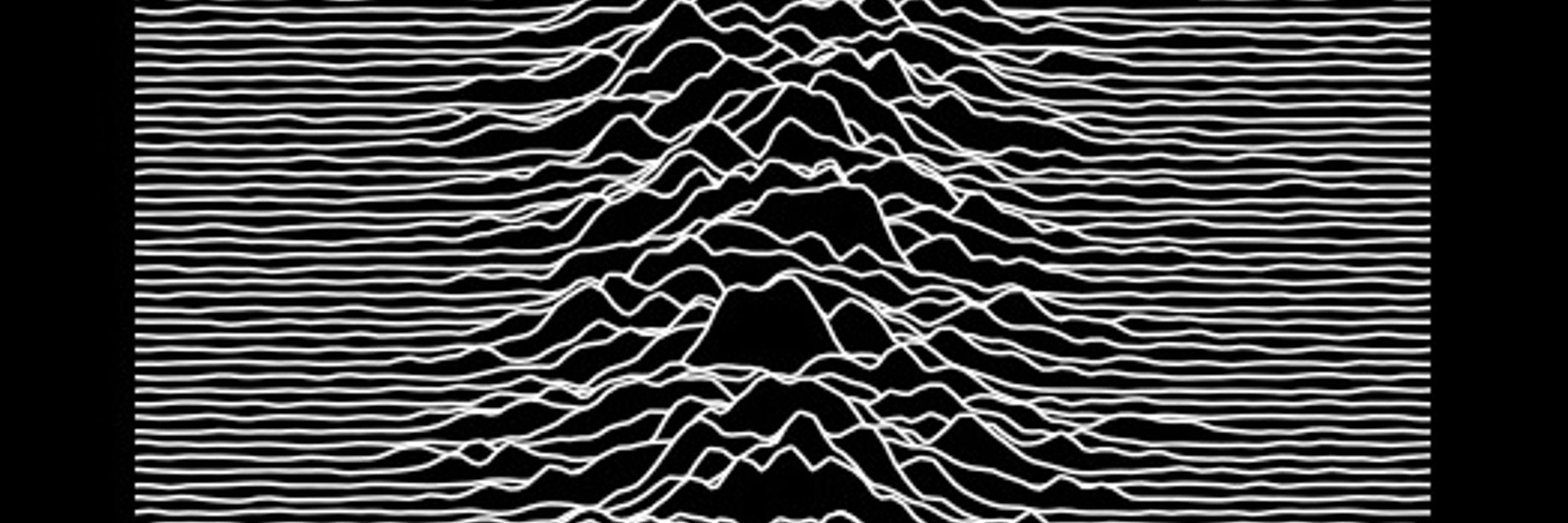

#neuroskyence

www.thetransmitter.org/brain-imagin...

By @lyrebard.bsky.social

#neuroskyence

www.thetransmitter.org/brain-imagin...

Our new preprint tackles exactly that! 🧠✨ doi.org/10.64898/202...

Thread below 🧵

Our new preprint tackles exactly that! 🧠✨ doi.org/10.64898/202...

Thread below 🧵