currently doing PhD @uwcse,

prev @usyd @ai2

🇦🇺🇨🇦🇬🇧

ivison.id.au

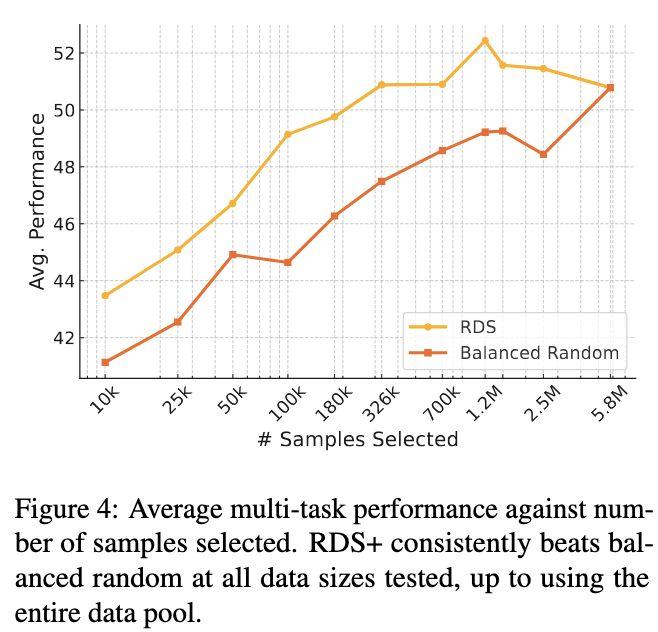

We select 10k samples from a downsampled pool of 200k samples, and then test selecting 10k samples from all 5.8M samples. Surprisingly, many methods drop in performance when the pool size increases!

We select 10k samples from a downsampled pool of 200k samples, and then test selecting 10k samples from all 5.8M samples. Surprisingly, many methods drop in performance when the pool size increases!

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

Turns out, when you look at large, varied data pools, lots of recent methods lag behind simple baselines, and a simple embedding-based method (RDS) does best!

More below ⬇️ (1/8)

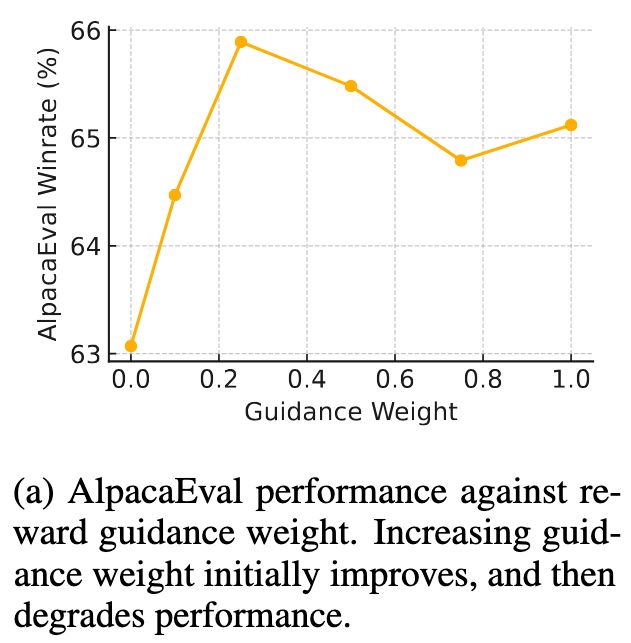

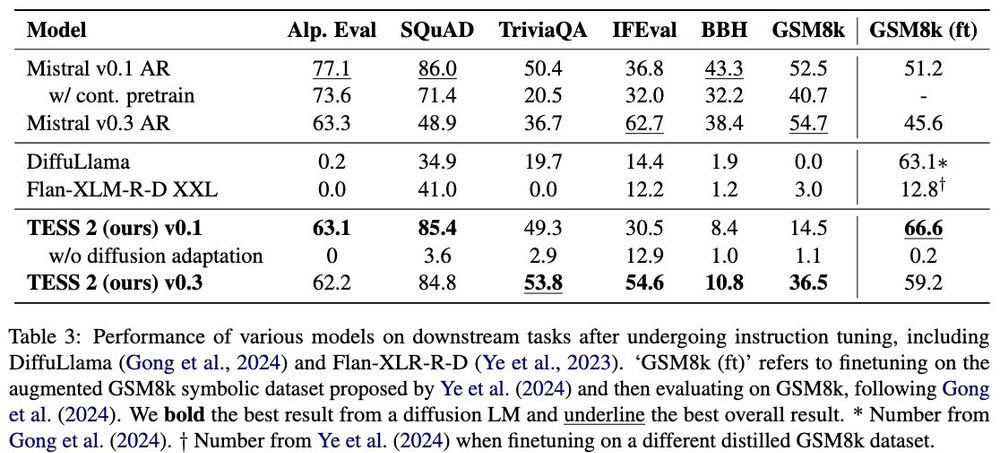

It may be that instruction-tuning mixtures need to be adjusted for diffusion models (we just used Tulu 2/3 off the shelf).

It may be that instruction-tuning mixtures need to be adjusted for diffusion models (we just used Tulu 2/3 off the shelf).

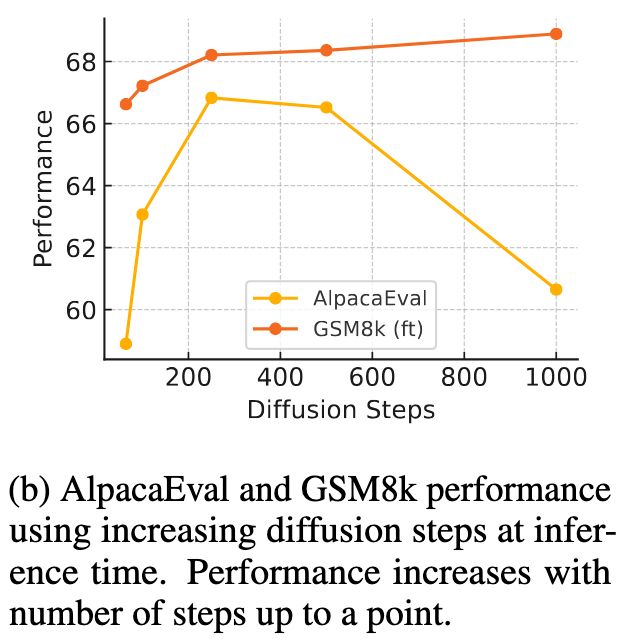

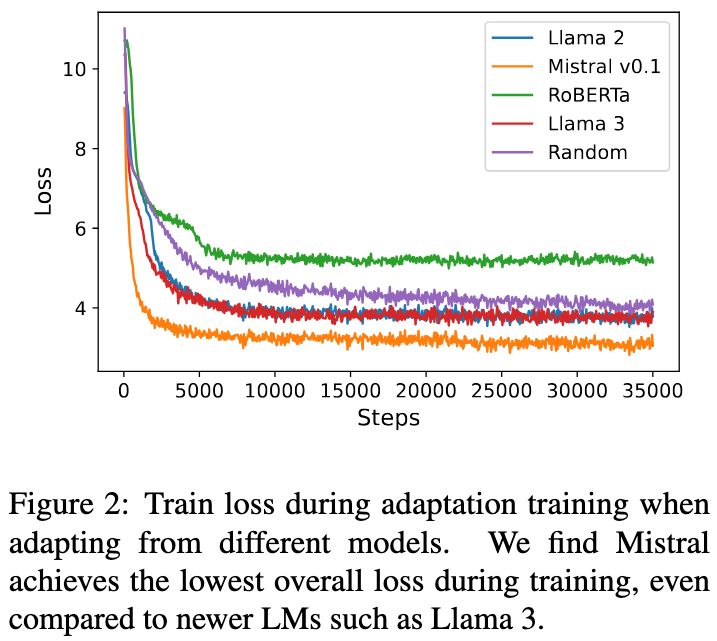

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

TESS 2 is an instruction-tuned diffusion LM that can perform close to AR counterparts for general QA tasks, trained by adapting from an existing pretrained AR model.

📜 Paper: arxiv.org/abs/2502.13917

🤖 Demo: huggingface.co/spaces/hamis...

More below ⬇️

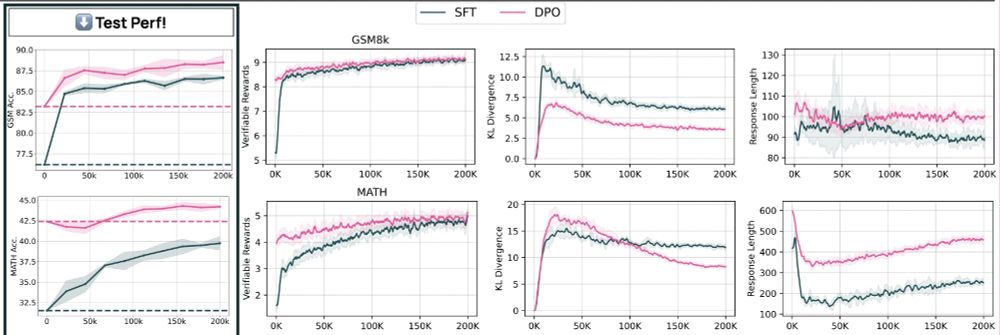

(multiply y-axis by 10 to get MATH test perf)

(multiply y-axis by 10 to get MATH test perf)

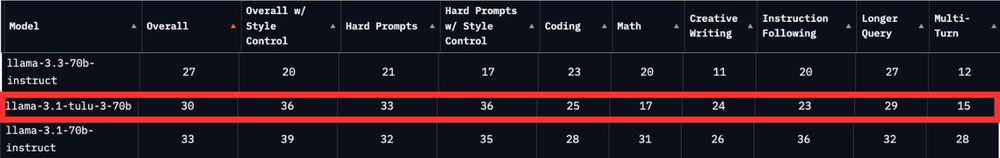

Scaling up the Tulu recipe to 405B works pretty well! We mainly see this as confirmation that open-instruct scales to large-scale training -- more exciting and ambitious things to come!

Scaling up the Tulu recipe to 405B works pretty well! We mainly see this as confirmation that open-instruct scales to large-scale training -- more exciting and ambitious things to come!

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Particularly happy it is top 20 for Math and Multi-turn prompts :)

All the details and data on how to train a model this good are right here: arxiv.org/abs/2411.15124

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

Using RL to train against labels is a simple idea, but very effective (>10pt gains just using GSM8k train set).

It's implemented for you to use in Open-Instruct 😉: github.com/allenai/open...

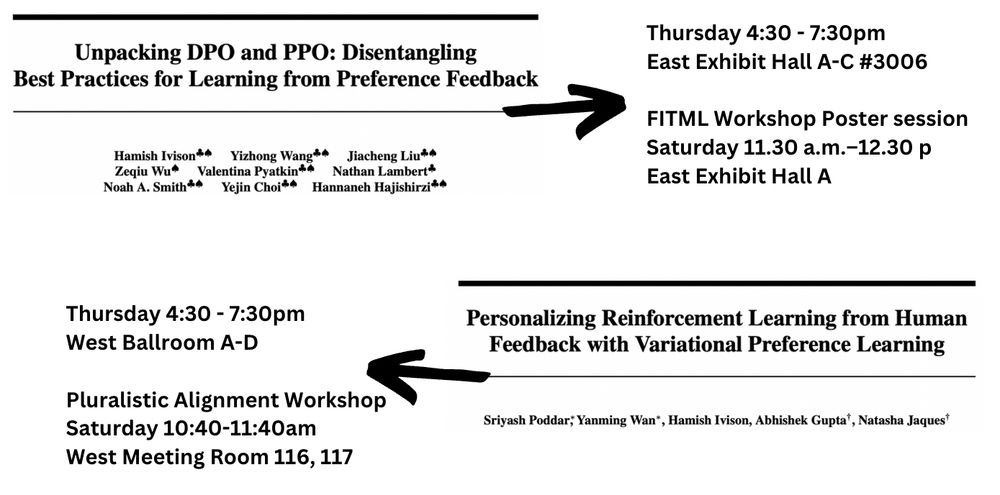

I'll be around all week, with two papers you should go check out (see image or next tweet):

I'll be around all week, with two papers you should go check out (see image or next tweet):