Our new paper "Modeling Others' Minds as Code" shows this outperforms BC by 2x, and reaches human-level performance in predicting human behavior.

Our new paper shows AI which models others’ minds as Python code 💻 can quickly and accurately predict human behavior!

shorturl.at/siUYI%F0%9F%...

Our new paper "Modeling Others' Minds as Code" shows this outperforms BC by 2x, and reaches human-level performance in predicting human behavior.

"before I got married I had six theories about raising children, now I have six kids and no theories"......but here's another theory #cogsci2025

📜 arxiv.org/abs/2504.03206

🌎 sites.google.com/cs.washingto...

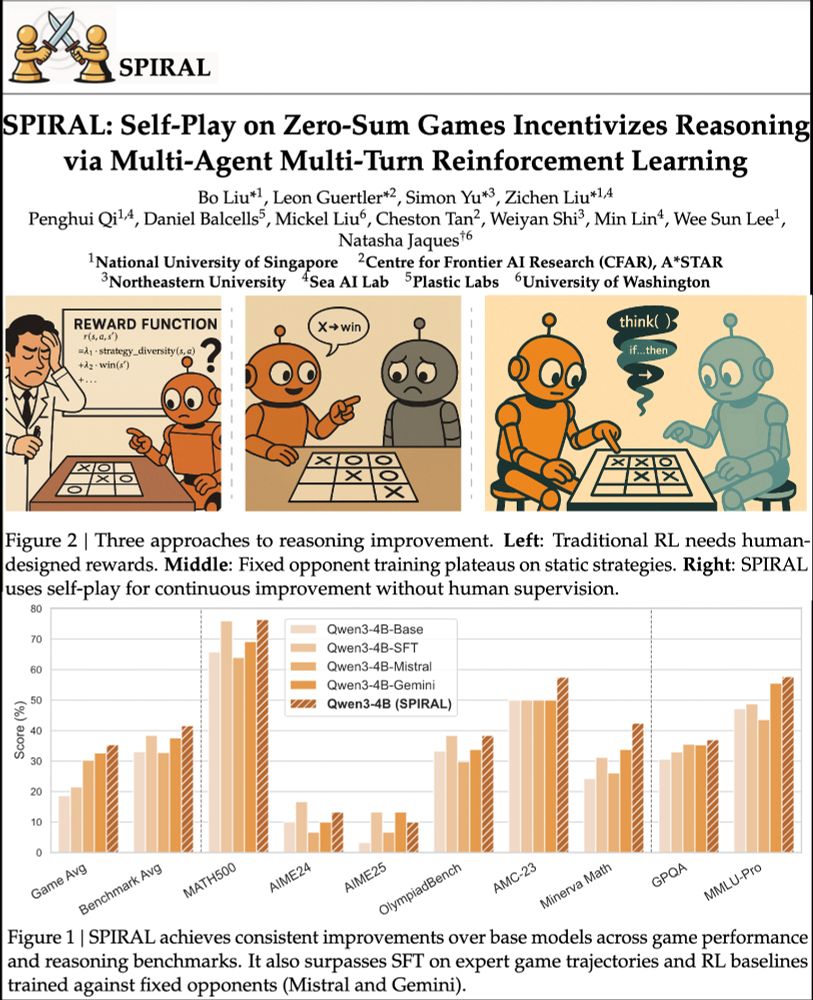

SPIRAL: models learn via self-competition. Kuhn Poker → +8.7% math, +18.1 Minerva Math! 🃏

Paper: huggingface.co/papers/2506....

Code: github.com/spiral-rl/spiral

Instead, we use online adversarial training to achieve theoretical safety guarantees and substantial empirical safety improvements over RLHF, without sacrificing capabilities.

🌟We propose 𝗼𝗻𝗹𝗶𝗻𝗲 𝐦𝐮𝐥𝐭𝐢-𝐚𝐠𝐞𝐧𝐭 𝗥𝗟 𝘁𝗿𝗮𝗶𝗻𝗶𝗻𝗴 where Attacker & Defender self-play to co-evolve, finding diverse attacks and improving safety by up to 72% vs. RLHF 🧵

Instead, we use online adversarial training to achieve theoretical safety guarantees and substantial empirical safety improvements over RLHF, without sacrificing capabilities.

Instead, we use online adversarial training to achieve theoretical safety guarantees and substantial empirical safety improvements over RLHF, without sacrificing capabilities.

🌟We propose 𝗼𝗻𝗹𝗶𝗻𝗲 𝐦𝐮𝐥𝐭𝐢-𝐚𝐠𝐞𝐧𝐭 𝗥𝗟 𝘁𝗿𝗮𝗶𝗻𝗶𝗻𝗴 where Attacker & Defender self-play to co-evolve, finding diverse attacks and improving safety by up to 72% vs. RLHF 🧵

Instead, we use online adversarial training to achieve theoretical safety guarantees and substantial empirical safety improvements over RLHF, without sacrificing capabilities.

Can't thank my collaborators enough: @cogscikid.bsky.social y.social @liangyanchenggg @simon-du.bsky.social @maxkw.bsky.social @natashajaques.bsky.social

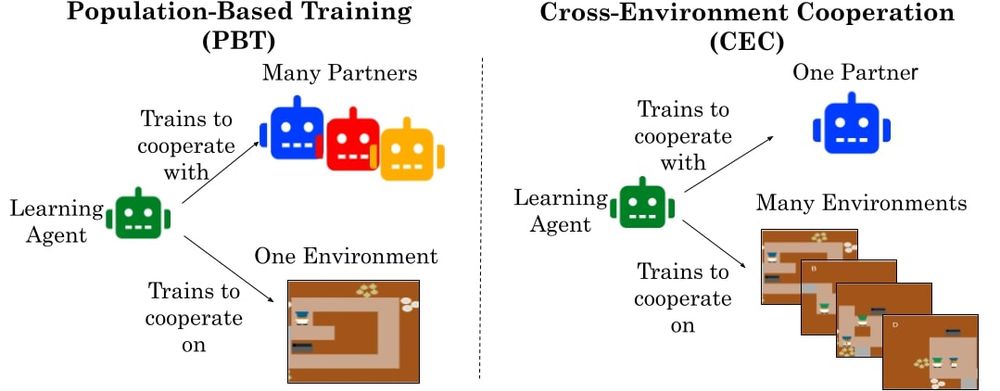

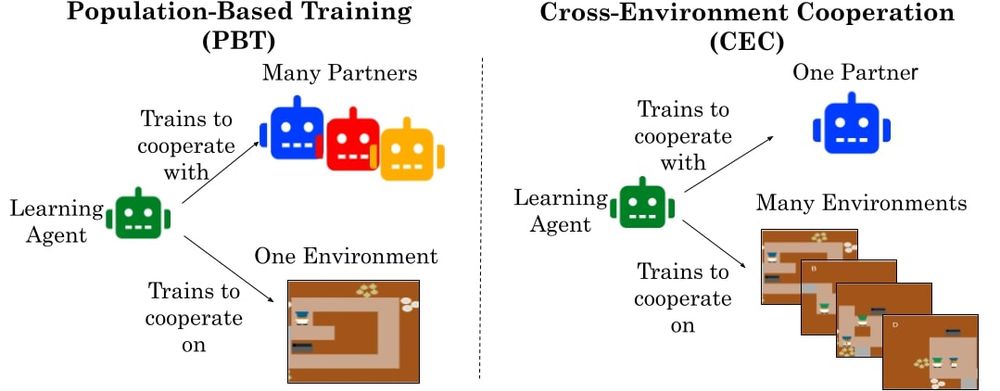

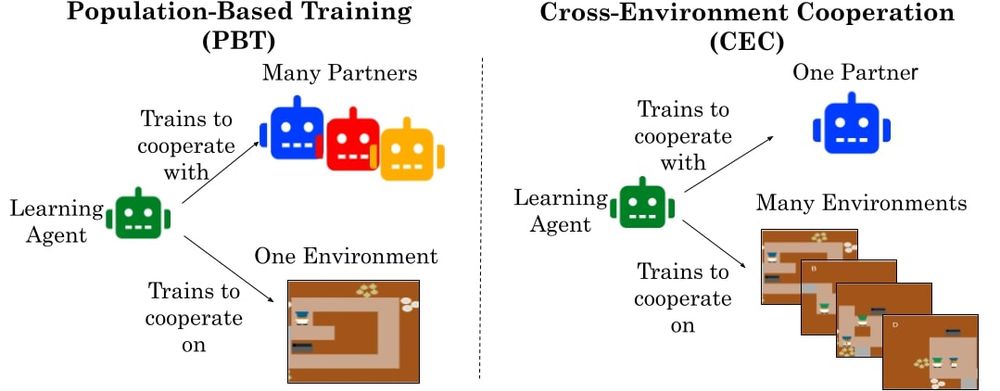

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Can't thank my collaborators enough: @cogscikid.bsky.social y.social @liangyanchenggg @simon-du.bsky.social @maxkw.bsky.social @natashajaques.bsky.social

Full schedule: sites.google.com/view/rldm202...

Full schedule: sites.google.com/view/rldm202...

Instead, we find that training on billions of procedurally generated tasks trains agents to learn general cooperative norms that transfer to humans... like avoiding collision

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Instead, we find that training on billions of procedurally generated tasks trains agents to learn general cooperative norms that transfer to humans... like avoiding collision

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

Agents trained in self-play across many environments learn cooperative norms that transfer to humans on novel tasks.

shorturl.at/fqsNN%F0%9F%...

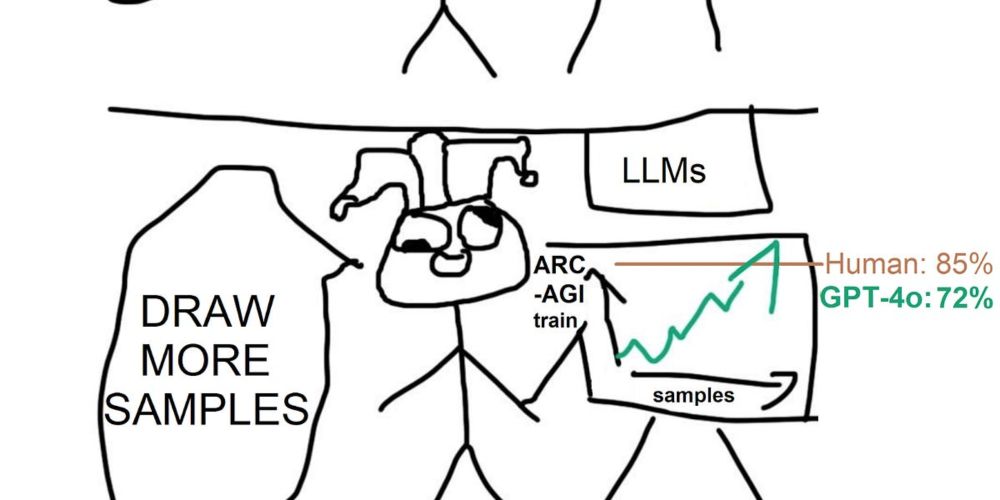

o3 is probably a more principled search technique...

Come by the IMOL workshop to check it out and chat more!

Come by the IMOL workshop to check it out and chat more!