Website: adhirajghosh.github.io

Twitter: https://x.com/adhiraj_ghosh98

Special thanks to everyone at @bethgelab.bsky.social, Bo Li, Yujie Lu and Palzer Lama for all your help!

Special thanks to everyone at @bethgelab.bsky.social, Bo Li, Yujie Lu and Palzer Lama for all your help!

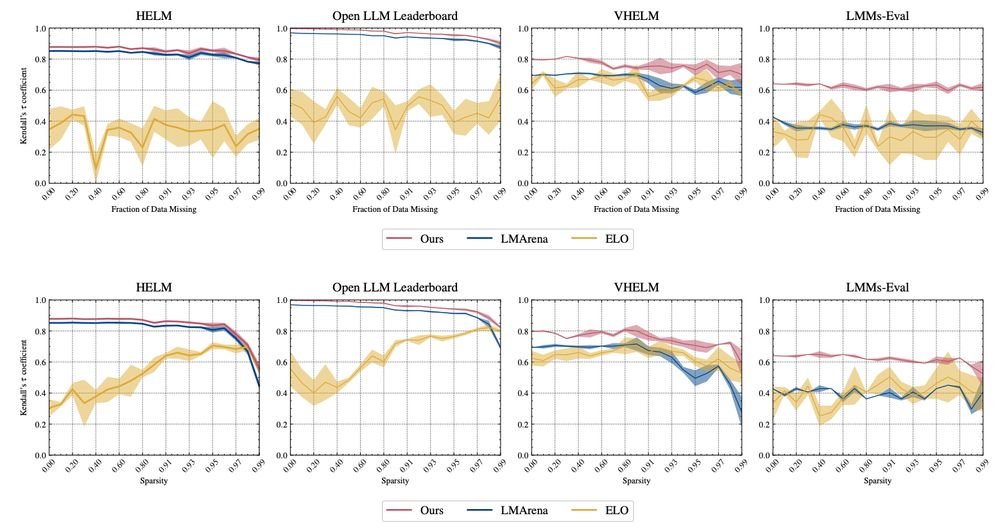

More insights like these in the paper!

More insights like these in the paper!