In our new preprint, we show that generative video models can solve a wide range of tasks across the entire vision stack without being explicitly trained for it.

🌐 video-zero-shot.github.io

1/n

Turns out: currently not very. Visualizations have small errors that compound in multi-step problems, and models often ignore correct visual aids in their decision making.

We introduce Mentis Oculi, a benchmark for machine mental imagery: multi-step visual puzzles that require maintaining and updating visual states over time.

📄 arxiv.org/abs/2602.02465

🌐 jana-z.github.io/mentis-oculi/

🧵⬇️

Turns out: currently not very. Visualizations have small errors that compound in multi-step problems, and models often ignore correct visual aids in their decision making.

👉 Apply now: forms.gle/XmLkwEDD45fY...

👉 Apply now: forms.gle/XmLkwEDD45fY...

🕦 Poster Session 1 | 11:30–13:30

📍 Poster #88

Come by if you're into audio-visual learning and want to know whether multiple modalities actually help or hurt.

🕦 Poster Session 1 | 11:30–13:30

📍 Poster #88

Come by if you're into audio-visual learning and want to know whether multiple modalities actually help or hurt.

In our new preprint, we show that generative video models can solve a wide range of tasks across the entire vision stack without being explicitly trained for it.

🌐 video-zero-shot.github.io

1/n

In our new preprint, we show that generative video models can solve a wide range of tasks across the entire vision stack without being explicitly trained for it.

🌐 video-zero-shot.github.io

1/n

As always, it was super fun working on this with @prasannamayil.bsky.social

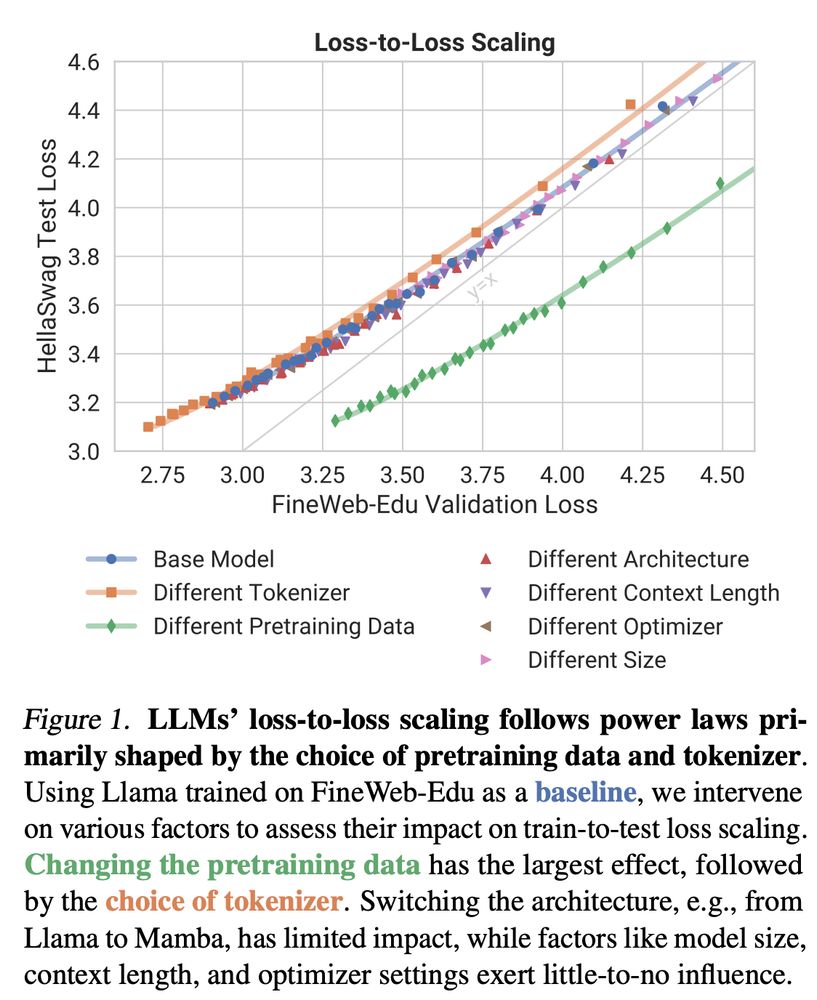

How does LLM training loss translate to downstream performance?

We show that pretraining data and tokenizer shape loss-to-loss scaling, while architecture and other factors play a surprisingly minor role!

brendel-group.github.io/llm-line/ 🧵1/8

As always, it was super fun working on this with @prasannamayil.bsky.social

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...

We’re building cutting-edge, open-source AI tutoring models for high-quality, adaptive learning for all pupils with support from the Hector Foundation.

👉 forms.gle/sxvXbJhZSccr...