do you know the difference between generative AI and AI for enemy movement? do you have information that suggests they used LLMs or generative AI for enemy movement?

November 10, 2025 at 5:05 PM

do you know the difference between generative AI and AI for enemy movement? do you have information that suggests they used LLMs or generative AI for enemy movement?

I don't consider it to be, but that's the problem. There's no real functional definition of AI anymore, as it's become nothing more than a techbro buzzword. LLMs are built with ML which in itself is a huge array of techniques which could fall under AI. LLMs could be called AI but all AI is not LLMs.

November 9, 2025 at 9:00 PM

I don't consider it to be, but that's the problem. There's no real functional definition of AI anymore, as it's become nothing more than a techbro buzzword. LLMs are built with ML which in itself is a huge array of techniques which could fall under AI. LLMs could be called AI but all AI is not LLMs.

nope

society needs banks.

but even if society needs LLMs (it doesn’t), it doesn’t need any specific “ai” company

there are lots of alternative options (which again, society does not need)

society needs banks.

but even if society needs LLMs (it doesn’t), it doesn’t need any specific “ai” company

there are lots of alternative options (which again, society does not need)

my hot AI take is that regardless of how we feel about it, bailing out the big AI companies may be *necessary* to avoid long-term systemic harm to real people

as always, the system acts too late to contain the blast radius

as always, the system acts too late to contain the blast radius

November 8, 2025 at 7:55 PM

nope

society needs banks.

but even if society needs LLMs (it doesn’t), it doesn’t need any specific “ai” company

there are lots of alternative options (which again, society does not need)

society needs banks.

but even if society needs LLMs (it doesn’t), it doesn’t need any specific “ai” company

there are lots of alternative options (which again, society does not need)

The article doesn't say it, but this cool solar forecasting software uses no large language models, the thing people usually think of when they hear "AI." It uses a fairly simple convolutional neural network that's readily trained on a laptop. No data centers or LLMs involved. Research paper here:

November 9, 2025 at 12:29 PM

The article doesn't say it, but this cool solar forecasting software uses no large language models, the thing people usually think of when they hear "AI." It uses a fairly simple convolutional neural network that's readily trained on a laptop. No data centers or LLMs involved. Research paper here:

And there are many other reasons I don't use LLMs. They're not reliable, they are built on theft, their creation incurs environmental costs & direct harm to people. The broader societal push to use AI for everything is destructive in countless ways & has horrifying political ramifications.

November 8, 2025 at 1:53 PM

And there are many other reasons I don't use LLMs. They're not reliable, they are built on theft, their creation incurs environmental costs & direct harm to people. The broader societal push to use AI for everything is destructive in countless ways & has horrifying political ramifications.

It’s also just fundamentally a stupid idea because LLMs aren’t capable of emulating player behavior and instincts anyway because it’s closer to spellcheck than true AI

November 6, 2025 at 4:09 PM

It’s also just fundamentally a stupid idea because LLMs aren’t capable of emulating player behavior and instincts anyway because it’s closer to spellcheck than true AI

Anyway, calling any data-driven prediction or decision software "AI" supports that dubious narrative, "accept LLM harms now in exchange for LLMs saving the world later." I get why people brand all sorts of stuff as "AI" now - they want to get in on the hype and money - but I think it's bad practice.

November 9, 2025 at 12:38 PM

Anyway, calling any data-driven prediction or decision software "AI" supports that dubious narrative, "accept LLM harms now in exchange for LLMs saving the world later." I get why people brand all sorts of stuff as "AI" now - they want to get in on the hype and money - but I think it's bad practice.

First: AI is a misnomer for the entire field (because intelligence is neither defined nor created), but machine learning has a wide array of applications and benefits. I don’t like using the term “AI” for any of it, whether I like it or not. Finally, this is unlike LLMs completely.

November 9, 2025 at 9:34 PM

First: AI is a misnomer for the entire field (because intelligence is neither defined nor created), but machine learning has a wide array of applications and benefits. I don’t like using the term “AI” for any of it, whether I like it or not. Finally, this is unlike LLMs completely.

I very much like AI when it means machine learning, which (as in this case) is doing a great deal of good in many fields.

I even think LLMs have good uses.

I’m just not keen on subcontracting thought to LLMs.

I even think LLMs have good uses.

I’m just not keen on subcontracting thought to LLMs.

Holy shit. This company claims it has developed an air-source heat pump with a COP of *7* (3-5 is considered good). For you non-nerds, that basically means it produces 7 units of heat for every unit of electricity it consumes. How are they doing it? You're not gonna like this answer, but: it's AI.

Fairland COP7 R290 ATW HeatPump - The Future of AI HeatPump

/PRNewswire/ -- As gentle heat wraps around you, you walk barefoot to bathroom and enjoy an instant hot shower—no waiting, no cold shock. Every corner indoors...

www.prnewswire.co.uk

November 9, 2025 at 9:27 PM

I very much like AI when it means machine learning, which (as in this case) is doing a great deal of good in many fields.

I even think LLMs have good uses.

I’m just not keen on subcontracting thought to LLMs.

I even think LLMs have good uses.

I’m just not keen on subcontracting thought to LLMs.

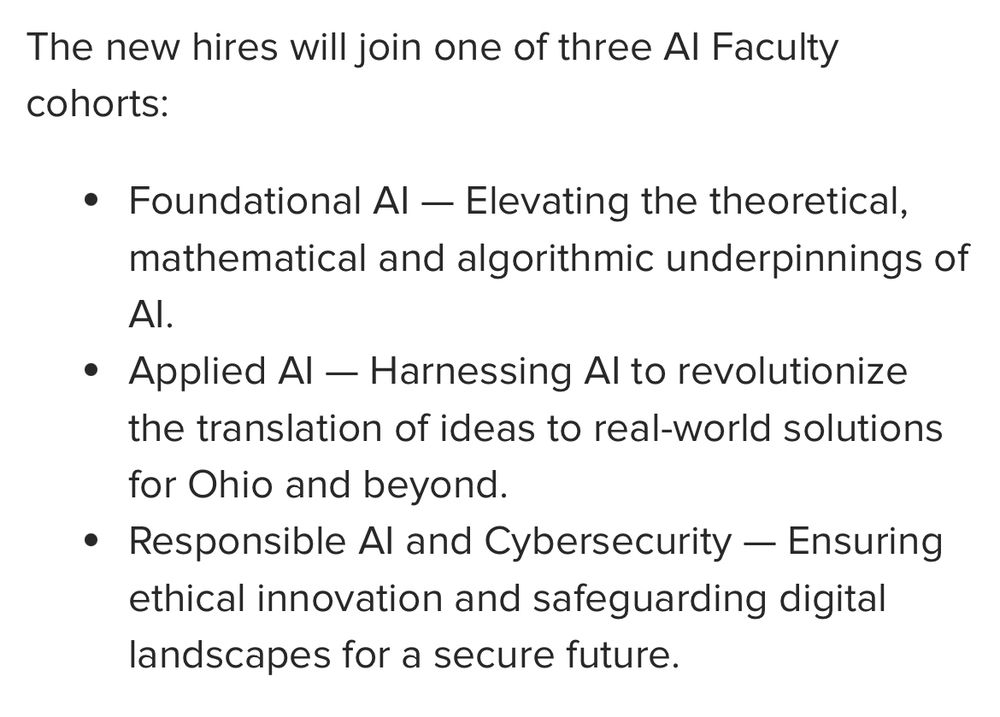

So this is all a bunch of bullshit, especially the “Foundational AI” cohort.

“Foundational AI” and “Foundation Models” are garbage concepts developed to distance LLMs and GenAI from longstanding criticisms by STS scholars who make clear the harms of such technologies.

“Foundational AI” and “Foundation Models” are garbage concepts developed to distance LLMs and GenAI from longstanding criticisms by STS scholars who make clear the harms of such technologies.

November 7, 2025 at 11:17 PM

So this is all a bunch of bullshit, especially the “Foundational AI” cohort.

“Foundational AI” and “Foundation Models” are garbage concepts developed to distance LLMs and GenAI from longstanding criticisms by STS scholars who make clear the harms of such technologies.

“Foundational AI” and “Foundation Models” are garbage concepts developed to distance LLMs and GenAI from longstanding criticisms by STS scholars who make clear the harms of such technologies.

The issue of an eroding boundary between fiction and reality already exists and AI is *dramatically* speeding up that erosion in a multitide of ways. Generative AI and LLMs are a deeply destructive technology that are being weaponized by the distrust economy, trapping their members in states of

4/

4/

November 10, 2025 at 4:50 PM

The issue of an eroding boundary between fiction and reality already exists and AI is *dramatically* speeding up that erosion in a multitide of ways. Generative AI and LLMs are a deeply destructive technology that are being weaponized by the distrust economy, trapping their members in states of

4/

4/

The results were clear — and surprising.

Even short social media posts written by LLMs are readily distinguishable.

Our BERT-based classifier spots AI with 70–80% accuracy across X, Bluesky, and Reddit.

LLMs are much less human-like than they may seem.

Even short social media posts written by LLMs are readily distinguishable.

Our BERT-based classifier spots AI with 70–80% accuracy across X, Bluesky, and Reddit.

LLMs are much less human-like than they may seem.

November 7, 2025 at 11:13 AM

The results were clear — and surprising.

Even short social media posts written by LLMs are readily distinguishable.

Our BERT-based classifier spots AI with 70–80% accuracy across X, Bluesky, and Reddit.

LLMs are much less human-like than they may seem.

Even short social media posts written by LLMs are readily distinguishable.

Our BERT-based classifier spots AI with 70–80% accuracy across X, Bluesky, and Reddit.

LLMs are much less human-like than they may seem.

As a neuroscientist, I’d suggest there is a profound disconnect between what *some* computer scientists think is representative of “intelligence”, cognitive ability, or descriptions of consciousness from some in AI work.

LLMs are not how neural systems process information, nor how brains function.

LLMs are not how neural systems process information, nor how brains function.

November 6, 2025 at 5:19 PM

As a neuroscientist, I’d suggest there is a profound disconnect between what *some* computer scientists think is representative of “intelligence”, cognitive ability, or descriptions of consciousness from some in AI work.

LLMs are not how neural systems process information, nor how brains function.

LLMs are not how neural systems process information, nor how brains function.

And a lack of strategy. Every Institute is currently hiring some AI Specialist who in the end is someone who applied LLMs or large Markov Models to their data. This dilutes funds from buildind "true" AI Expertise.

Its important to build expertise in usere cases, but thats not an AI strategy.

Its important to build expertise in usere cases, but thats not an AI strategy.

November 11, 2025 at 5:43 AM

And a lack of strategy. Every Institute is currently hiring some AI Specialist who in the end is someone who applied LLMs or large Markov Models to their data. This dilutes funds from buildind "true" AI Expertise.

Its important to build expertise in usere cases, but thats not an AI strategy.

Its important to build expertise in usere cases, but thats not an AI strategy.

asked "if generative AI/LLMs were trained on public domain info, were run entirely locally, and had no power costs, would they still be bad". feels like a trap question, tbh! like, that is a theoretical that does not currently exist, and if it does it is not what is being used in day-to-days.

November 8, 2025 at 10:41 AM

asked "if generative AI/LLMs were trained on public domain info, were run entirely locally, and had no power costs, would they still be bad". feels like a trap question, tbh! like, that is a theoretical that does not currently exist, and if it does it is not what is being used in day-to-days.

LLMs are not a technology, they are a product produced by a process

no “ai” company must be kept alive even if your goal is to further LLM functionality

no “ai” company must be kept alive even if your goal is to further LLM functionality

November 8, 2025 at 7:59 PM

LLMs are not a technology, they are a product produced by a process

no “ai” company must be kept alive even if your goal is to further LLM functionality

no “ai” company must be kept alive even if your goal is to further LLM functionality

Just listened to Cory Doctorow talking about the rise of “peer-reviewed journals” SPECIFICALLY designed to publish this kind of garbage, feed it into LLMs, & get it spit out again by engines like Grok, Google AI, Chat GPT etc.

Another reason AI is dangerous.

Another reason AI is dangerous.

November 7, 2025 at 6:20 PM

Just listened to Cory Doctorow talking about the rise of “peer-reviewed journals” SPECIFICALLY designed to publish this kind of garbage, feed it into LLMs, & get it spit out again by engines like Grok, Google AI, Chat GPT etc.

Another reason AI is dangerous.

Another reason AI is dangerous.

Getting nervous for the talk I'm about to give at a workshop about "using AI to drive impact" which features slides such as these.

November 6, 2025 at 8:41 PM

Getting nervous for the talk I'm about to give at a workshop about "using AI to drive impact" which features slides such as these.

It’s fucking generative AI. I am a computer scientist. They are using LLMs for voice production based on existing data. The difference between this and deepfakes for voice is there isn’t one. It is a way to not pay voice actors in the longer term! Ahhhhhhh!

November 10, 2025 at 6:34 PM

It’s fucking generative AI. I am a computer scientist. They are using LLMs for voice production based on existing data. The difference between this and deepfakes for voice is there isn’t one. It is a way to not pay voice actors in the longer term! Ahhhhhhh!

That’s exactly how AI bros and their companies are selling LLMs in medicine and therapy.

Quite explicitly saying that ‘something is better than nothing’ for the poorest/those with limited access

Quite explicitly saying that ‘something is better than nothing’ for the poorest/those with limited access

I’ve also been hearing larger concerns from colleagues in medicine about the potential for a two-tiered healthcare delivery system where rich people get doctors and poor people get chatbots as hospitals try to save money

November 8, 2025 at 12:11 AM

That’s exactly how AI bros and their companies are selling LLMs in medicine and therapy.

Quite explicitly saying that ‘something is better than nothing’ for the poorest/those with limited access

Quite explicitly saying that ‘something is better than nothing’ for the poorest/those with limited access

Tech VCs: AI and LLMs will create universal high income, not just universal basic income.

Also Tech VCs: government shutdowns are good, SNAP is bad, we need to invest more money into AI

Also Tech VCs: government shutdowns are good, SNAP is bad, we need to invest more money into AI

November 9, 2025 at 4:00 PM

Tech VCs: AI and LLMs will create universal high income, not just universal basic income.

Also Tech VCs: government shutdowns are good, SNAP is bad, we need to invest more money into AI

Also Tech VCs: government shutdowns are good, SNAP is bad, we need to invest more money into AI

Microsoft has discovered a side-channel attack (Whisper Leak) on the network communications between AI chatbots and their backend LLMs

www.microsoft.com/en-us/securi...

www.microsoft.com/en-us/securi...

November 9, 2025 at 2:38 PM

Microsoft has discovered a side-channel attack (Whisper Leak) on the network communications between AI chatbots and their backend LLMs

www.microsoft.com/en-us/securi...

www.microsoft.com/en-us/securi...

Newspapers, including the Globe and Mail, have policies restricting use of LLMs. These experiments show why those policies are needed -they can't be trusted in writing, research or editing.

I'm not anti-AI. It's worth developing. But the design of LLMs makes them a non-option for truth-based work

I'm not anti-AI. It's worth developing. But the design of LLMs makes them a non-option for truth-based work

November 7, 2025 at 2:23 PM

Newspapers, including the Globe and Mail, have policies restricting use of LLMs. These experiments show why those policies are needed -they can't be trusted in writing, research or editing.

I'm not anti-AI. It's worth developing. But the design of LLMs makes them a non-option for truth-based work

I'm not anti-AI. It's worth developing. But the design of LLMs makes them a non-option for truth-based work

not once, plagiarism machines stole everything from human creatives so in no way will I use them to encourage them, turn off as many AI features as I can when I notice them (or are told about them)

Fuck LLMs that have stolen their information

Fuck LLMs that have stolen their information

Hands up if you've never used Chat GPT ✋

(I feel like Dozer and Tank in The Matrix right now - at first I didn't use it because, rather ironically, I'm lazy and stubborn (peak Taurus energy there) - literally no, don't make me use the new thing I don't wanna. Now I'm glad I didn't 😅)

(I feel like Dozer and Tank in The Matrix right now - at first I didn't use it because, rather ironically, I'm lazy and stubborn (peak Taurus energy there) - literally no, don't make me use the new thing I don't wanna. Now I'm glad I didn't 😅)

Probably a good way to tell right now if the job you’re applying for is run by absolute dumbfucks is to ask if they’re using AI.

November 9, 2025 at 6:51 PM

not once, plagiarism machines stole everything from human creatives so in no way will I use them to encourage them, turn off as many AI features as I can when I notice them (or are told about them)

Fuck LLMs that have stolen their information

Fuck LLMs that have stolen their information

Of course they use AI LLMs to generate the image because the words are spelt incorrectly.

November 9, 2025 at 11:48 PM

Of course they use AI LLMs to generate the image because the words are spelt incorrectly.