Studying the intersection of AI, social media, and politics.

Polarization, misinformation, radicalization, digital platforms, social complexity.

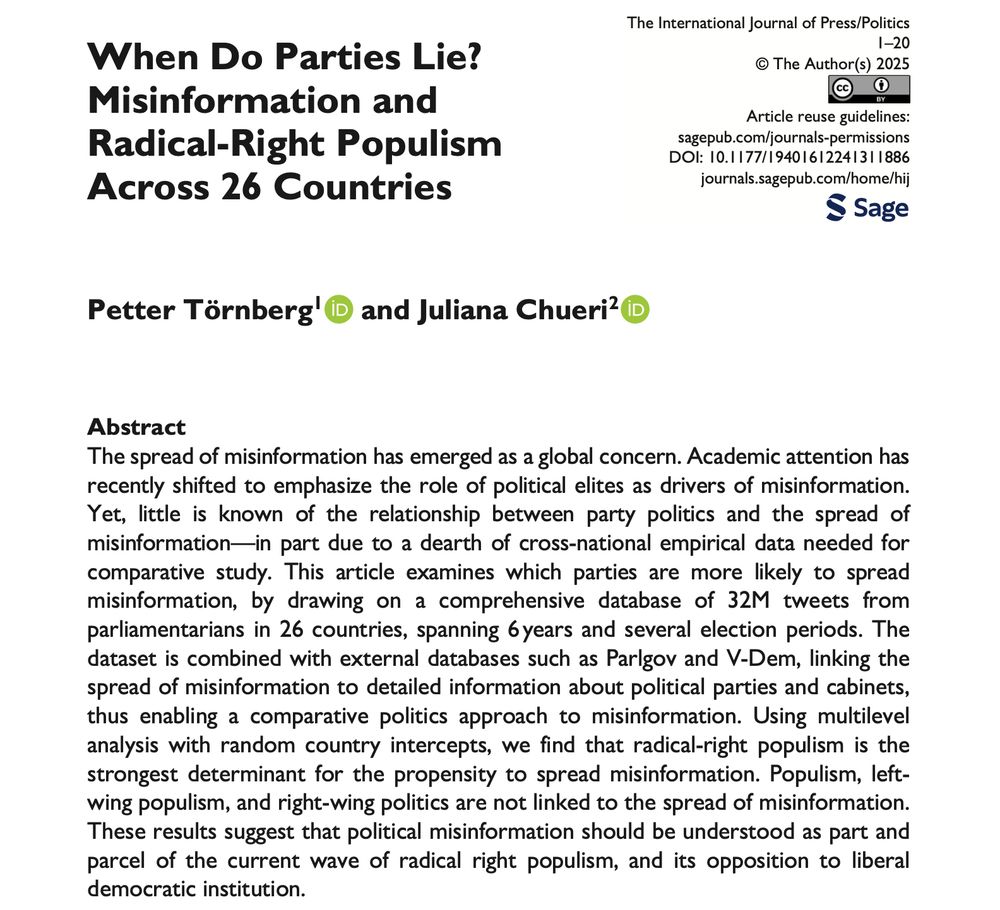

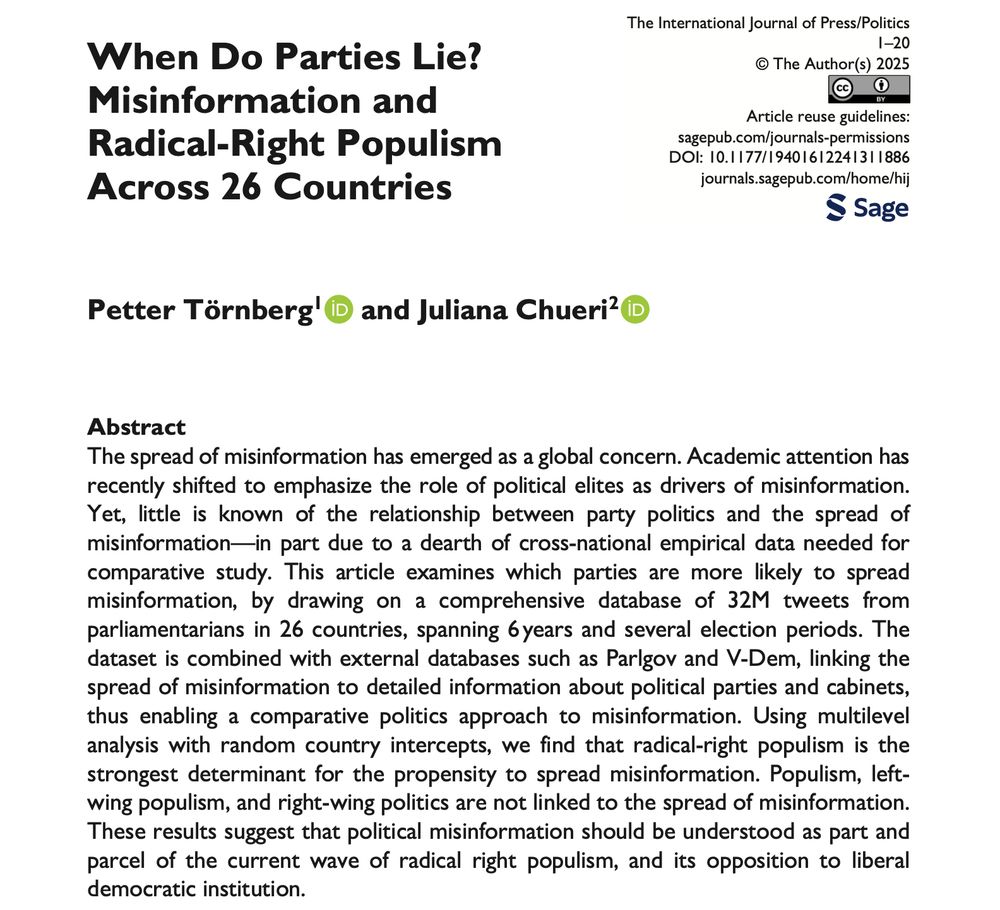

In the first cross-national comparative study, we examine 32M tweets from politicians.

We find that misinformation is not a general condition: it is driven by populist radical right parties.

with @julianachueri.bsky.social

doi.org/10.1177/1940...

www.forbes.com/sites/amirhu...

PhD position in my ERC project: Welfare State Transformation in the Age of Artificial Intelligence

Interested in how AI is reshaping labor markets, social protection, and the politics of redistribution?

Apply here: lnkd.in/eCcAXsaS

Please share widely!

PhD position in my ERC project: Welfare State Transformation in the Age of Artificial Intelligence

Interested in how AI is reshaping labor markets, social protection, and the politics of redistribution?

Apply here: lnkd.in/eCcAXsaS

Please share widely!

It is shaped by the economy in which AI is forged.

Today, AI is emerging inside technofeudalism — built on lock-in, dependency, and rent extortion.

Our new preprint explores what this means for the coming AI society.

osf.io/preprints/so...

It is shaped by the economy in which AI is forged.

Today, AI is emerging inside technofeudalism — built on lock-in, dependency, and rent extortion.

Our new preprint explores what this means for the coming AI society.

osf.io/preprints/so...

After two decades of platforms reshaping markets, communication, and governance, a new shift is underway: AI is transforming society.

What should we expect from the 'AI society'? 🧵

📄 Our new paper faces this question:

osf.io/preprints/so...

After two decades of platforms reshaping markets, communication, and governance, a new shift is underway: AI is transforming society.

What should we expect from the 'AI society'? 🧵

📄 Our new paper faces this question:

osf.io/preprints/so...

“What makes this book essential reading is its recognition that digital technology’s democratic potential and authoritarian dangers are not separate phenomena but two faces of the same coin.”

albertoblumenscheincruz.substack.com/p/review-see...

“What makes this book essential reading is its recognition that digital technology’s democratic potential and authoritarian dangers are not separate phenomena but two faces of the same coin.”

albertoblumenscheincruz.substack.com/p/review-see...

* www.taylorfrancis.com/reader/downl...

* www.taylorfrancis.com/reader/downl...

Bottom line: using LLMs to "simulate humans" sits in a no-man’s-land between theory and empirics—too opaque to function as a model, too ungrounded to count as evidence.

Validation remains the core challenge.

link.springer.com/article/10.1...

Bottom line: using LLMs to "simulate humans" sits in a no-man’s-land between theory and empirics—too opaque to function as a model, too ungrounded to count as evidence.

Validation remains the core challenge.

link.springer.com/article/10.1...

🗓 10 Dec | Amsterdam

A conversation on platforms, politics, power, and digital modernity - followed by drinks.

Hope to see you there!

👉 Details & RSVP:

globaldigitalcultures.uva.nl/content/even...

🗓 10 Dec | Amsterdam

A conversation on platforms, politics, power, and digital modernity - followed by drinks.

Hope to see you there!

👉 Details & RSVP:

globaldigitalcultures.uva.nl/content/even...

Altmetric is... well, Altmetric. But still, kind of surreal.

In the first cross-national comparative study, we examine 32M tweets from politicians.

We find that misinformation is not a general condition: it is driven by populist radical right parties.

with @julianachueri.bsky.social

doi.org/10.1177/1940...

Altmetric is... well, Altmetric. But still, kind of surreal.

What comes after the traditional model of social media ends?

1) Algorithmic broadcasting platforms (everything turning into TikTok and Instagram reels)

2) Private and semi-private spheres (like group chats)

3) Chatbots and LLMs as new media

What comes after the traditional model of social media ends?

1) Algorithmic broadcasting platforms (everything turning into TikTok and Instagram reels)

2) Private and semi-private spheres (like group chats)

3) Chatbots and LLMs as new media

But... can they? We don’t actually know.

In our new study, we develop a Computational Turing Test.

And our findings are striking:

LLMs may be far less human-like than we think.🧵

But... can they? We don’t actually know.

In our new study, we develop a Computational Turing Test.

And our findings are striking:

LLMs may be far less human-like than we think.🧵

We decided to study how it looks.

Using novel multi-modal AI methods, we study 17,848 posts by top climate denial accounts - and uncovered a new front in the misinformation war.

Here's what it means 🧵

www.tandfonline.com/doi/full/10....

We decided to study how it looks.

Using novel multi-modal AI methods, we study 17,848 posts by top climate denial accounts - and uncovered a new front in the misinformation war.

Here's what it means 🧵

www.tandfonline.com/doi/full/10....

Using nationally representative ANES data from 2020 & 2024, I map how the U.S. social media landscape has transformed.

Here are the key take-aways 🧵

arxiv.org/abs/2510.25417

Using nationally representative ANES data from 2020 & 2024, I map how the U.S. social media landscape has transformed.

Here are the key take-aways 🧵

arxiv.org/abs/2510.25417

And there are a lot of persuasive papers out there!

And there are a lot of persuasive papers out there!

What do Facebook, Google & TikTok really see when they look at us — and what do they miss?

My latest interview with @pettertornberg.com explores algorithmic tyranny, digital modernity & the future of power.

👉 snglrty.co/4mDn1Gb

What do Facebook, Google & TikTok really see when they look at us — and what do they miss?

My latest interview with @pettertornberg.com explores algorithmic tyranny, digital modernity & the future of power.

👉 snglrty.co/4mDn1Gb

1: @seanmcarroll.bsky.social, below

2: Singularity Weblog: Algorithmic Tyranny, the Rise of Digital Modernity and Seeing Like a Platform www.youtube.com/watch?v=lJrV...

1: @seanmcarroll.bsky.social, below

2: Singularity Weblog: Algorithmic Tyranny, the Rise of Digital Modernity and Seeing Like a Platform www.youtube.com/watch?v=lJrV...

www.preposterousuniverse.com/podcast/2025...

It was great to talk to @flamman.se about the link between weak welfare state protection and the expansion of online platform work in Europe. @pettertornberg.com

It was great to talk to @flamman.se about the link between weak welfare state protection and the expansion of online platform work in Europe. @pettertornberg.com

This is depressing. Academia is becoming AI slop.

Immediately received a generic AI-generated email from the editor.

The AI future is here!

This is depressing. Academia is becoming AI slop.

The aesthetics of climate misinformation: computational multimodal framing analysis with BERTopic and CLIP by Anton Törnberg & Petter Törnberg / @pettertornberg.com

doi.org/10.1080/0964...

The aesthetics of climate misinformation: computational multimodal framing analysis with BERTopic and CLIP by Anton Törnberg & Petter Törnberg / @pettertornberg.com

doi.org/10.1080/0964...

In 2020, Twitter use was highest among people who loved Democrats and disliked Republicans.

By 2024, it completely flipped: the more polarized Republican you are, the more you use Twitter/X.

From blue stronghold → red megaphone. All for just $44 billion.

The ANES 2024 data is out — and this thread answers all your burning questions! 🔥

The ANES 2024 data is out — and this thread answers all your burning questions! 🔥

newbooksnetwork.com/seeing-like-...

newbooksnetwork.com/seeing-like-...

www.techpolicy.press/seeing-like-...

www.techpolicy.press/seeing-like-...