Assistant Prof in CSEE @ UMBC.

🏳️🌈♿

https://laramartin.net

arxiv.org/abs/2503.11881

#AACL2025 #IJCNLP

arxiv.org/abs/2503.11881

#AACL2025 #IJCNLP

arxiv.org/abs/2503.11881

#AACL2025 #IJCNLP

Applications are due next Wednesday, 10/15.

Applications are due next Wednesday, 10/15.

we look forward to hearing about the details of your systems at the EMNLP 2025 Wordplay Workshop!

we look forward to hearing about the details of your systems at the EMNLP 2025 Wordplay Workshop!

wordplay-workshop.github.io

wordplay-workshop.github.io

We are looking for regular papers (4-8 pgs) and extended abstracts about open challenges in the space (2 pgs)

Papers due: August 29

wordplay-workshop.github.io/cfp/

We are looking for regular papers (4-8 pgs) and extended abstracts about open challenges in the space (2 pgs)

Papers due: August 29

wordplay-workshop.github.io/cfp/

https://go.nature.com/4jYv3IP

https://go.nature.com/4jYv3IP

1/

Great thread from Sarah, and I have additional thoughts. 🧵

Great thread from Sarah, and I have additional thoughts. 🧵

Wikipedia has linked sources and an edit history showing where information came from and who added it when

An LLM just generates text

Wikipedia has linked sources and an edit history showing where information came from and who added it when

An LLM just generates text

We received a heads up from a trusted source that you should proactively download/print/screen shot any documentation on research.gov pertaining to your NSF awards, both those that are current and any that have closed in the last 5-6 years.

1/n

We received a heads up from a trusted source that you should proactively download/print/screen shot any documentation on research.gov pertaining to your NSF awards, both those that are current and any that have closed in the last 5-6 years.

1/n

wgntv.com/evanston/nor...

wgntv.com/evanston/nor...

Nationwide, we were:

• Improving the quantity + quality of K-12 CS teachers

• Ensuring the sustainability of K-12 CS teacher prep programs

• Ensuring all youth in the U.S. have access to great CS teachers

Nationwide, we were:

• Improving the quantity + quality of K-12 CS teachers

• Ensuring the sustainability of K-12 CS teacher prep programs

• Ensuring all youth in the U.S. have access to great CS teachers

They’re canceling all “DEI and misinformation/disinformation” grants.

And the guidance on how to fulfill the longstanding, legally mandated Broadening Participation requirement is utterly incoherent.

www.nsf.gov/updates-on-p...

"With access to infinite resources, these adherents ran reinforcement learning on their companies. They threw billions of dollars' worth of spaghetti at the wall until something stuck."

"With access to infinite resources, these adherents ran reinforcement learning on their companies. They threw billions of dollars' worth of spaghetti at the wall until something stuck."

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

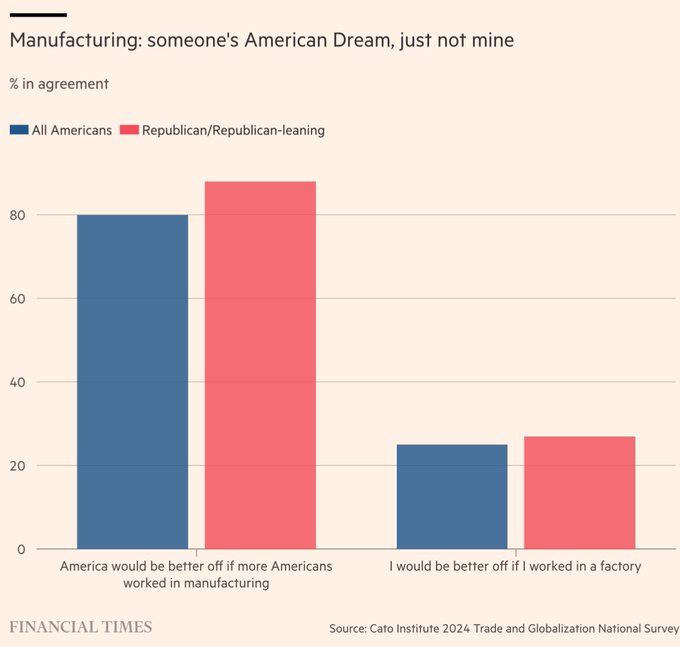

www.ft.com/content/8459...

www.ft.com/content/8459...

www.assistiveware.com/aac-app-sale #AutismAcceptanceMonth

www.assistiveware.com/aac-app-sale #AutismAcceptanceMonth