ML & NeuroAI research

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

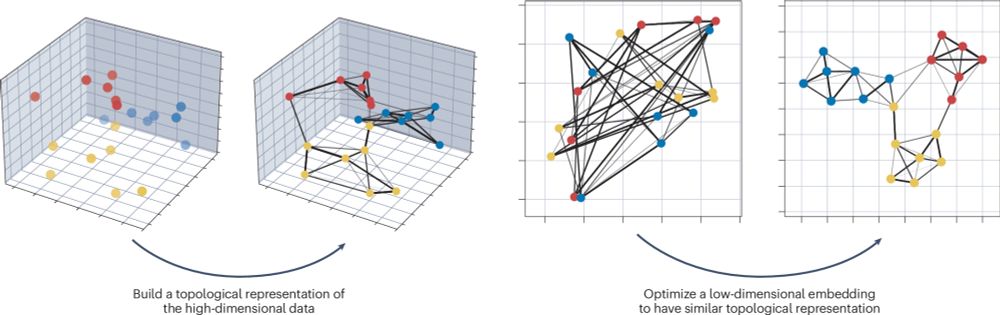

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

#MLSky #AI #neuroscience

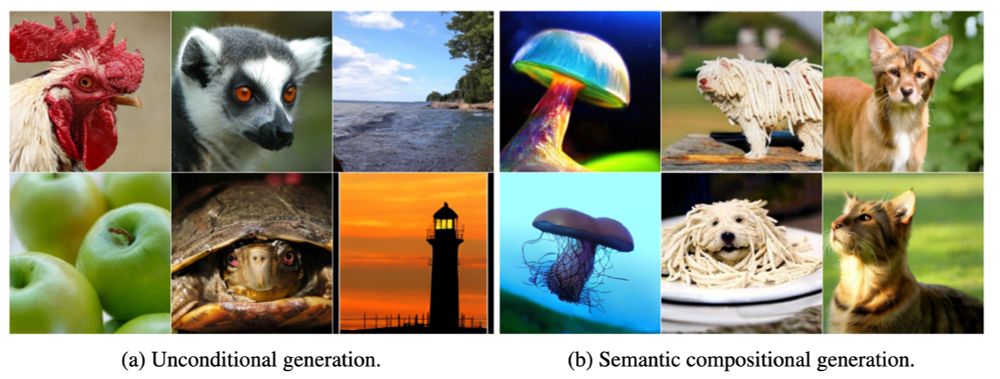

We introduce Discrete Latent Codes (DLCs):

- Discrete representation for diffusion models

- Uncond. gen. SOTA FID (1.59 on ImageNet)

- Compositional generation

- Integrates with LLM

🧱

We introduce Discrete Latent Codes (DLCs):

- Discrete representation for diffusion models

- Uncond. gen. SOTA FID (1.59 on ImageNet)

- Compositional generation

- Integrates with LLM

🧱

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

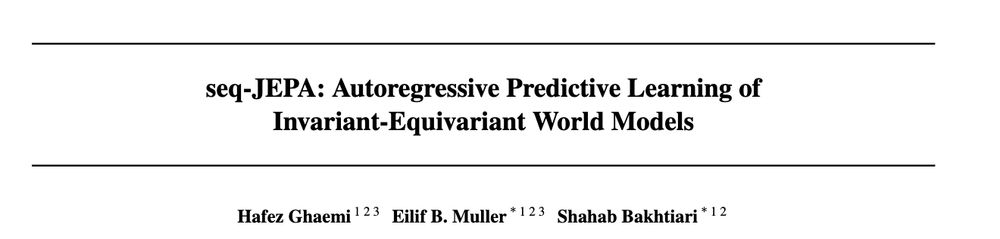

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

Or it *could* be, if it were open source.

Just imagine the resources it takes to develop an open version of this model. Now think about how much innovation could come from building on this, rather than just trying to recreate it (at best).

deepmind.google/discover/blo...

Or it *could* be, if it were open source.

Just imagine the resources it takes to develop an open version of this model. Now think about how much innovation could come from building on this, rather than just trying to recreate it (at best).

rdcu.be/d0YZT

rdcu.be/d0YZT

As way of introduction to this research approach, I'll provide here a very short thread outlining the definition of the field I gave recently at our BRAIN NeuroAI workshop at the NIH.

🧠📈

As way of introduction to this research approach, I'll provide here a very short thread outlining the definition of the field I gave recently at our BRAIN NeuroAI workshop at the NIH.

🧠📈

go.bsky.app/BHKxoss

go.bsky.app/BHKxoss

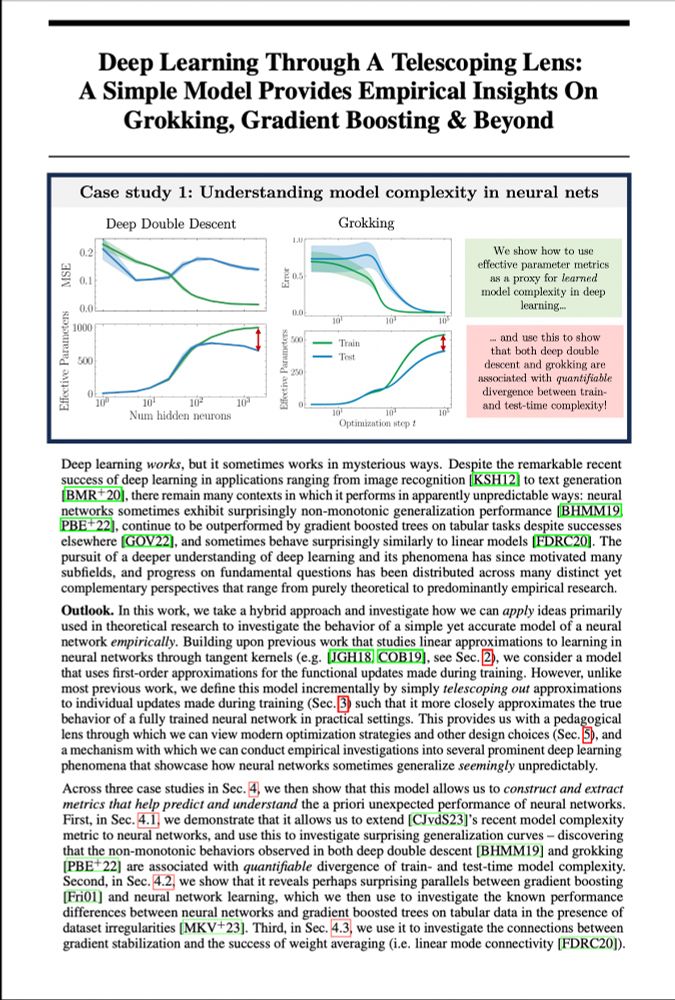

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n