w/ Blake Richards & Shahab Bakhtiari

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

I’m particularly interested in (thread below): 1/3

🧠🤖 #MLSky

I’m particularly interested in (thread below): 1/3

🧠🤖 #MLSky

The preprint: www.biorxiv.org/content/10.1...

The preprint: www.biorxiv.org/content/10.1...

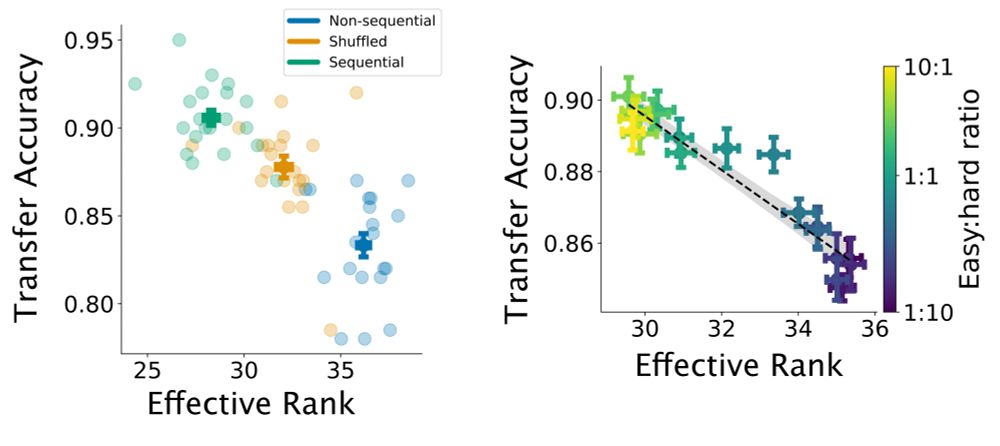

Easy-to-hard learning curriculum (explicit or implicit) sets the dimensionality of the neural population recruited to solve the task + lower-d readout leads to better generalization.

But, there are some subtleties for applying this rule to the real world training design: 👇

Easy-to-hard learning curriculum (explicit or implicit) sets the dimensionality of the neural population recruited to solve the task + lower-d readout leads to better generalization.

But, there are some subtleties for applying this rule to the real world training design: 👇

2) Initial training phase sets this dimensionality (measured with the Jaccard index). J = 1 → no change in the readout subspace

Therefore, learners following an explicit (or implicit) easy-to-hard curriculum will discover a lower-d readout subspace.

2) Initial training phase sets this dimensionality (measured with the Jaccard index). J = 1 → no change in the readout subspace

Therefore, learners following an explicit (or implicit) easy-to-hard curriculum will discover a lower-d readout subspace.

Two steps:

1) Easy tasks lead to a lower-d readout subspace: larger angle separation → lower-d readout

Two steps:

1) Easy tasks lead to a lower-d readout subspace: larger angle separation → lower-d readout

- Sequential and shuffled curricula significantly outperform a non-sequential baseline in ANNs & humans.

- Models do better on a sequential curriculum; human observers show comparable improvement on both sequential & shuffled, but with substantial variability in the shuffled curriculum.

- Sequential and shuffled curricula significantly outperform a non-sequential baseline in ANNs & humans.

- Models do better on a sequential curriculum; human observers show comparable improvement on both sequential & shuffled, but with substantial variability in the shuffled curriculum.

1) A sequential easy-to-hard curriculum

2) A shuffled curriculum with randomly interleaved easy & hard trials

3) A non-sequential baseline with only hard trials.

We tested generalization on a hard transfer condition.

1) A sequential easy-to-hard curriculum

2) A shuffled curriculum with randomly interleaved easy & hard trials

3) A non-sequential baseline with only hard trials.

We tested generalization on a hard transfer condition.

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7