💻 https://github.com/pbontrager 📝 https://tinyurl.com/philips-papers

pytorch.org/blog/hugging...

pytorch.org/blog/hugging...

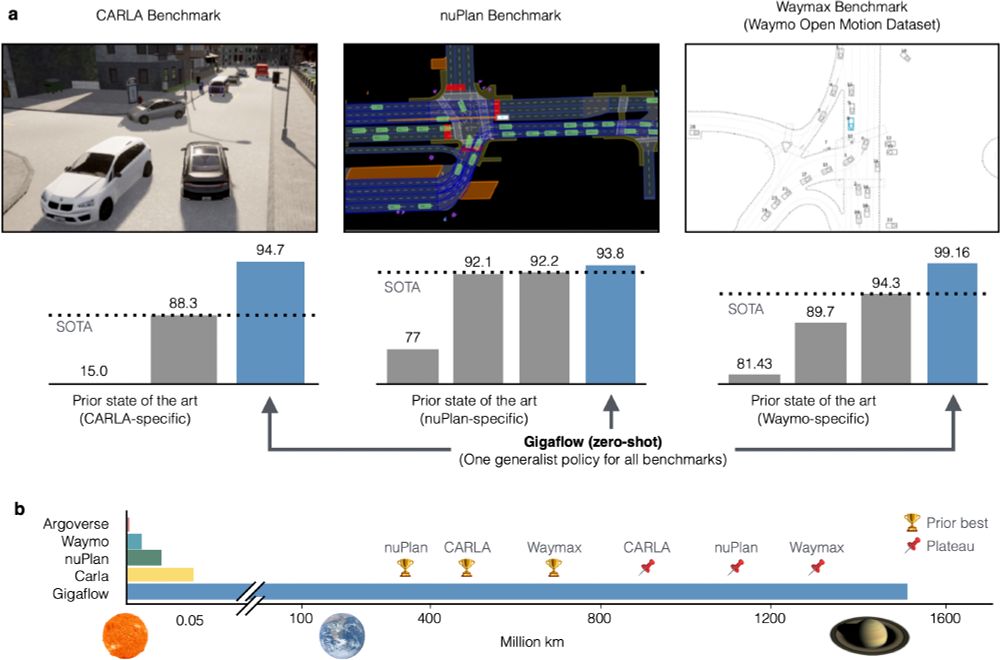

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

It is SOTA on every planning benchmark we tried.

In self-play, it goes 20 years between collisions.

Sonnet 3.5 gets the first play but not the second

o1 is very bad at this. first play it takes 59 seconds and it’s answer isn’t even a play that’s on the board. same with second play, but only 36 seconds

Sonnet 3.5 gets the first play but not the second

o1 is very bad at this. first play it takes 59 seconds and it’s answer isn’t even a play that’s on the board. same with second play, but only 36 seconds

www.metacareers.com/jobs/5121890...

www.metacareers.com/jobs/5121890...

www.kaggle.com/code/felipem...

www.kaggle.com/code/felipem...

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

By giving models more "time to think," Llama 1B outperforms Llama 8B in math—beating a model 8x its size. The full recipe is open-source!

The unts in ANN are actually not a terrible approximation of how real neurons work!

A tiny 🧵.

🧠📈 #NeuroAI #MLSky

I've seen people suggesting it's problematic, that neuroscientists won't like it, and so on.

But, I literally don't see why this is problematic...

Coming from the "giants" of AI.

Or maybe this was posted out of context? Please clarify.

I can't process this...

Paper: ai.meta.com/research/pub...

Code: github.com/facebookrese...

I’ll go first👇🏻(replying to myself like it’s normal)

Przemek co-led the Hash Code contest, which we used as the main test-bed to evaluate our approach 🚀

Worth a read if you want to understand implications of our work! Link below ⬇️

Przemek co-led the Hash Code contest, which we used as the main test-bed to evaluate our approach 🚀

Worth a read if you want to understand implications of our work! Link below ⬇️

gist.github.com/pbontrager/b...

gist.github.com/pbontrager/b...

deepmind.google/discover/blo...

deepmind.google/discover/blo...

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

“I tested the idea we discussed last time. Here are some results. It does not work. (… awkward silence)”

Such conversations happen so many times when meetings with students. How do we move forward?

You need …

The goal is always to make experimentation and hacking with the recipes easy. I’m curious what your opinions are on using trainers vs recipes style scripts.

The goal is always to make experimentation and hacking with the recipes easy. I’m curious what your opinions are on using trainers vs recipes style scripts.