Learning representations, minimizing free energy, running.

We introduce Discrete Latent Codes (DLCs):

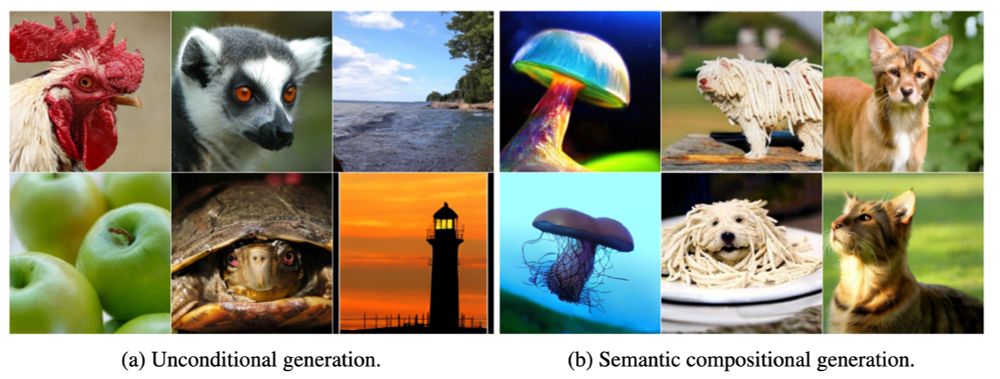

- Discrete representation for diffusion models

- Uncond. gen. SOTA FID (1.59 on ImageNet)

- Compositional generation

- Integrates with LLM

🧱

We introduce Discrete Latent Codes (DLCs):

- Discrete representation for diffusion models

- Uncond. gen. SOTA FID (1.59 on ImageNet)

- Compositional generation

- Integrates with LLM

🧱

Paper: arxiv.org/abs/2405.00740

Code: github.com/facebookrese...

Models:

- ViT-G: huggingface.co/lavoies/llip...

- ViT-B: huggingface.co/lavoies/llip...

Paper: arxiv.org/abs/2405.00740

Code: github.com/facebookrese...

Models:

- ViT-G: huggingface.co/lavoies/llip...

- ViT-B: huggingface.co/lavoies/llip...