mathematics - neuroscience - artificial intelligence

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

I would say this is the clearest demonstration of scaling laws in neural decoding to-date.

www.nature.com/articles/s41...

🧠📈 🧪

I would say this is the clearest demonstration of scaling laws in neural decoding to-date.

www.nature.com/articles/s41...

🧠📈 🧪

tinyurl.com/yc4wpp3t

tinyurl.com/yc4wpp3t

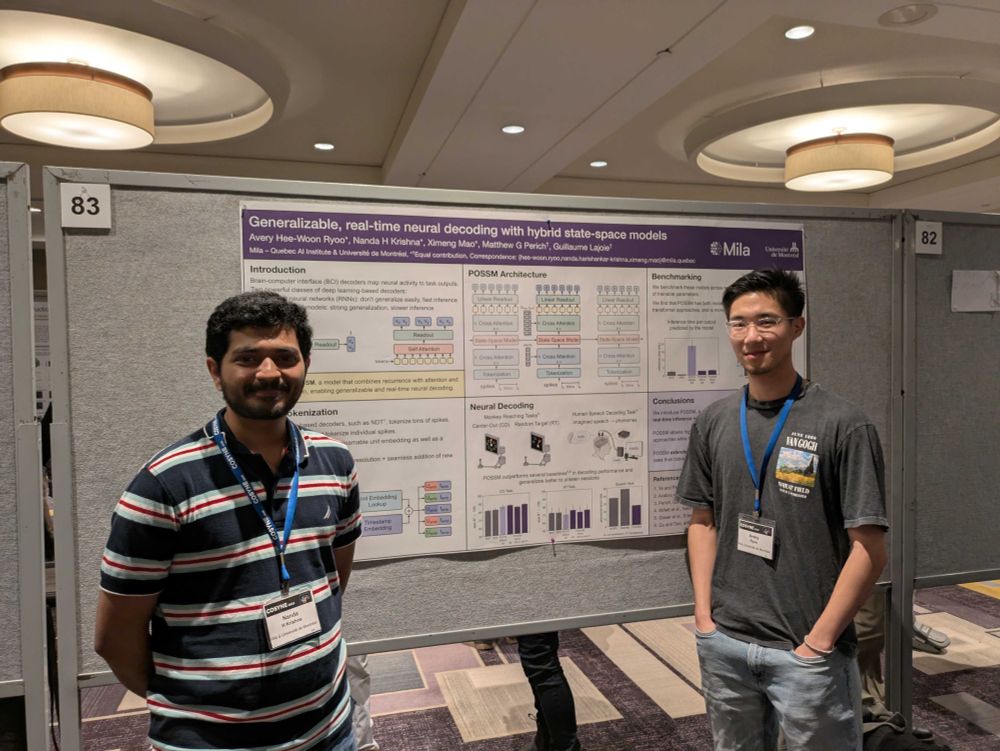

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

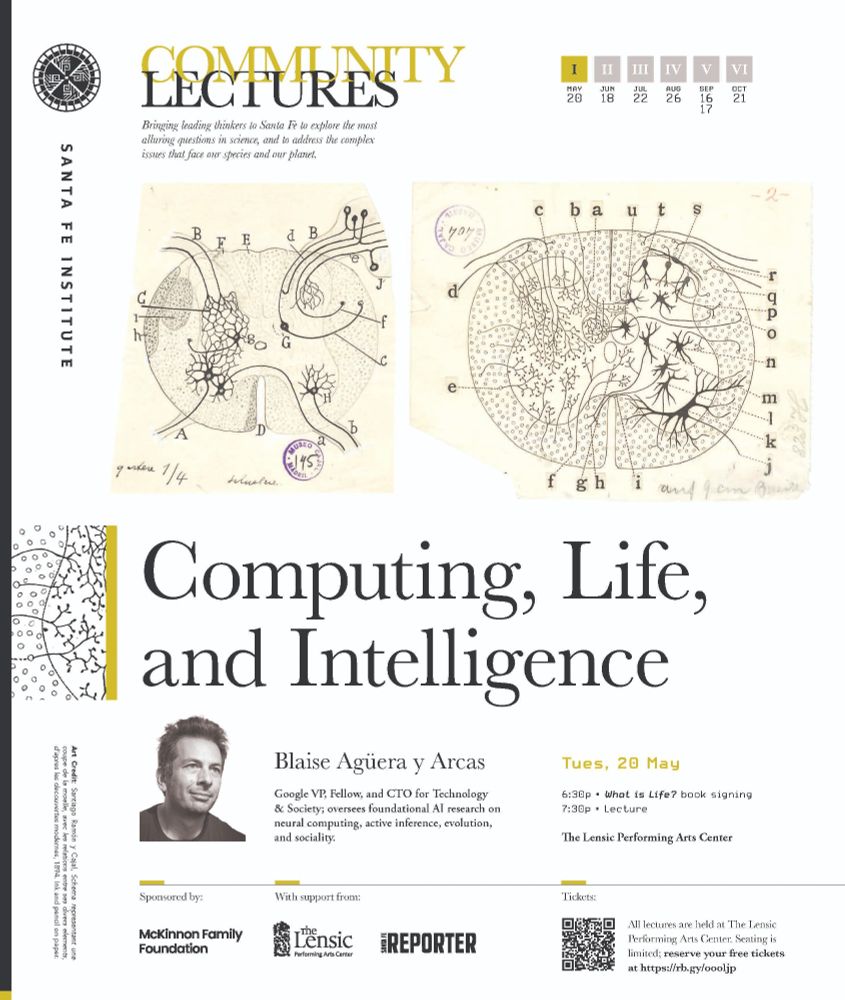

Blaise Agüera y Arcas’ presents 'Computing, Life, and Intelligence' at the Lensic on 🗓️ May 20, 7:30pm MT in-person or online.

Blaise Agüera y Arcas’ presents 'Computing, Life, and Intelligence' at the Lensic on 🗓️ May 20, 7:30pm MT in-person or online.

Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time.

@hafezghm.bsky.social shows that self-supervised prediction with proper inductive biases achieves both simultaneously. (1/4)

#MLSky #NeuroAI

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time.

@hafezghm.bsky.social shows that self-supervised prediction with proper inductive biases achieves both simultaneously. (1/4)

#MLSky #NeuroAI

We explore Amortized In-Context Bayesian Posterior Estimation with Niels, @glajoie.bsky.social, Priyank Jaini & @marcusabrubaker.bsky.social ! 🔥

Amortized Conditional Modeling = key to success in large-scale models! We use it to estimate posteriors 🔑

📄 arxiv.org/abs/2502.06601

We explore Amortized In-Context Bayesian Posterior Estimation with Niels, @glajoie.bsky.social, Priyank Jaini & @marcusabrubaker.bsky.social ! 🔥

Amortized Conditional Modeling = key to success in large-scale models! We use it to estimate posteriors 🔑

📄 arxiv.org/abs/2502.06601

In-Context Parametric Inference: Point or Distribution Estimators?

Thrilled to share our work on inferring probabilistic model parameters explicitly conditioned on data, in collab with @yoshuabengio.bsky.social, Nikolay Malkin & @glajoie.bsky.social!

🔗 arxiv.org/abs/2502.11617

In-Context Parametric Inference: Point or Distribution Estimators?

Thrilled to share our work on inferring probabilistic model parameters explicitly conditioned on data, in collab with @yoshuabengio.bsky.social, Nikolay Malkin & @glajoie.bsky.social!

🔗 arxiv.org/abs/2502.11617

#Cosyne2025 @cosynemeeting.bsky.social

#Cosyne2025 @cosynemeeting.bsky.social

We added heroic analyses to show in both experiments & models that the structure of what the brain learns is altered by adaptive decoders. Check it out: www.biorxiv.org/content/10.1...

@glajoie.bsky.social and I have organized a party in Tremblant. Come and get on the dance floor y'all. 🕺

April 1st

10PM-3AM

Location: Le P'tit Caribou

DJs Mat Moebius, Xanarelle, and Prosocial

Please share!

@glajoie.bsky.social and I have organized a party in Tremblant. Come and get on the dance floor y'all. 🕺

April 1st

10PM-3AM

Location: Le P'tit Caribou

DJs Mat Moebius, Xanarelle, and Prosocial

Please share!

@glajoie.bsky.social and I have organized a party in Tremblant. Come and get on the dance floor y'all. 🕺

April 1st

10PM-3AM

Location: Le P'tit Caribou

DJs Mat Moebius, Xanarelle, and Prosocial

Please share!

In-Context Parametric Inference: Point or Distribution Estimators?

Thrilled to share our work on inferring probabilistic model parameters explicitly conditioned on data, in collab with @yoshuabengio.bsky.social, Nikolay Malkin & @glajoie.bsky.social!

🔗 arxiv.org/abs/2502.11617

www.thetransmitter.org/systems-neur...

www.thetransmitter.org/systems-neur...

#neuroscience #neuroAI #AI #compneuro @glajoie.bsky.social www.youtube.com/watch?v=CvCq...

#neuroscience #neuroAI #AI #compneuro @glajoie.bsky.social www.youtube.com/watch?v=CvCq...

#PLOSCompBio: Neural networks with optimized single-neuron adaptation uncover biologically plausible regulari ... dx.plos.org/10.1371/jour...

Props to V. Geadah and co-authors!

#PLOSCompBio: Neural networks with optimized single-neuron adaptation uncover biologically plausible regulari ... dx.plos.org/10.1371/jour...

Props to V. Geadah and co-authors!