It’s named Baguettotron, people.

BAGUETTOTRON.

It’s named Baguettotron, people.

BAGUETTOTRON.

Anthropic — ???

GDM — pushing context out on smaller models

Chinese labs — hoards of sparse/long attention algos

it seems like everyone is betting on:

1. continual learning

2. that long context enables it

- Anthropomorphization makes sense when dealing with written human-like characters, which is what LLMs generate

- We aren’t very deep into interpretability yet

x.com/pfau/status/...

fwiw we don't understand human cognition either

- Anthropomorphization makes sense when dealing with written human-like characters, which is what LLMs generate

- We aren’t very deep into interpretability yet

x.com/pfau/status/...

fwiw we don't understand human cognition either

www.wheresyoured.at/openai400bn/

news.ycombinator.com/item?id=4562...

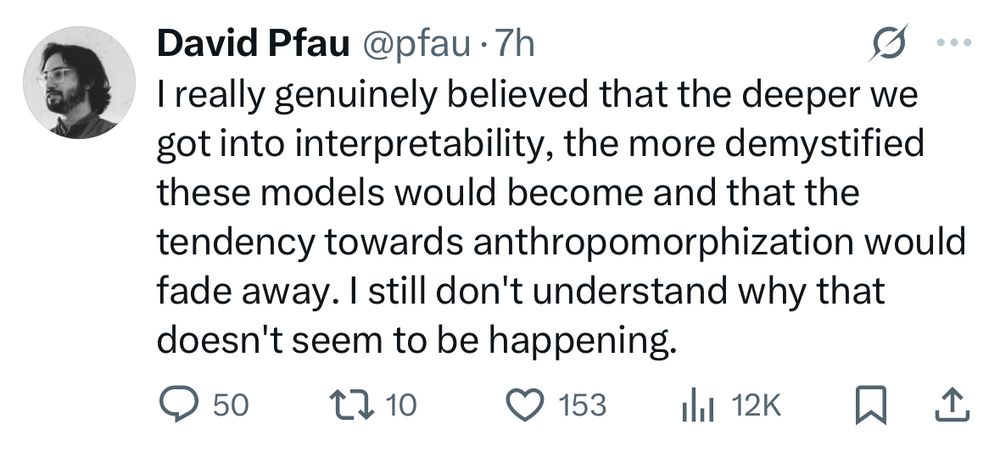

![hacker news comment by ctoth 29 minutes ago on: OpenAI Needs $400B In The Next 12 Months

His "$400B in next 12 months" claim treats OpenAI as paying construction costs upfront. But OpenAI is leasing capacity as operating expense - Oracle finances and builds the data centers [1]. This is like saying a tenant needs $5M cash because that's what the building cost to construct.

The Oracle deal structure: OpenAI pays ~$30B/year in rental fees starting fiscal 2027/2028 [2], ramping up over 5 years as capacity comes online. Not "$400B in 12 months."

The deals are structured as staged vendor financing: - NVIDIA "invests" $10B per gigawatt milestone, gets paid back through chip purchases [3] - AMD gives OpenAI warrants for 160M shares (~10% equity) that vest as chips deploy [4] - As one analyst noted: "Nvidia invests $100 billion in OpenAI, which then OpenAI turns back and gives it back to Nvidia" [3]

This is circular vendor financing where suppliers extend credit betting on OpenAI's growth. It's unusual and potentially fragile, but it's not "OpenAI needs $400B cash they don't have."

Zitron asks: "Does OpenAI have $400B in cash?"

The actual question: "Can OpenAI grow revenue from $13B to $60B+ to cover lease payments by 2028-2029?"

The first question is nonsensical given deal structure. The second is the actual bet everyone's making.

His core thesis - "OpenAI literally cannot afford these deals therefore fraud" - fails because he fundamentally misunderstands how the deals work. The real questions are about execution timelines and revenue growth projections, not about OpenAI needing hundreds of billions in cash right now.

There's probably a good critical piece to write about whether these vendor financing bets will pay off, but this isn't it.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:ctphcgyhnllfluywokibtrgb/bafkreifm63qgybkwkaq5m44eezgtswypm4ueala7v2cu25g32pmfmidt24@jpeg)

www.wheresyoured.at/openai400bn/

news.ycombinator.com/item?id=4562...