It’s named Baguettotron, people.

BAGUETTOTRON.

It’s named Baguettotron, people.

BAGUETTOTRON.

Anthropic — ???

GDM — pushing context out on smaller models

Chinese labs — hoards of sparse/long attention algos

it seems like everyone is betting on:

1. continual learning

2. that long context enables it

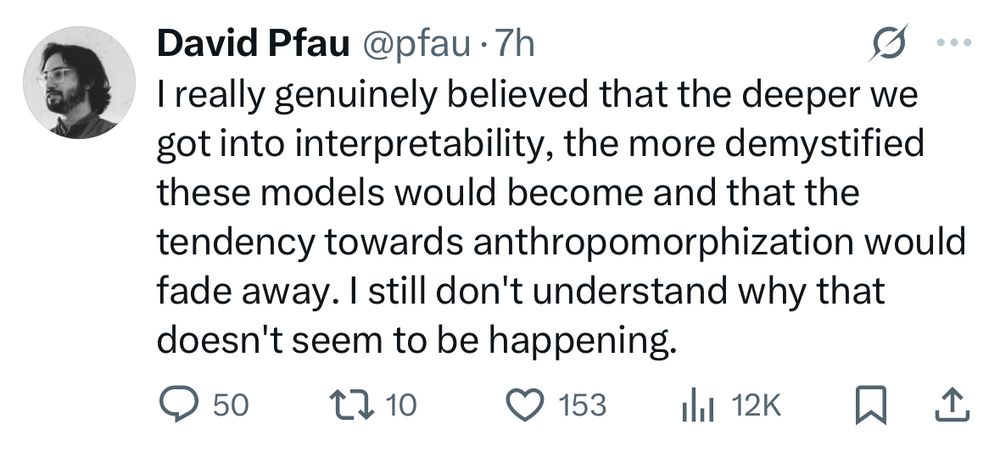

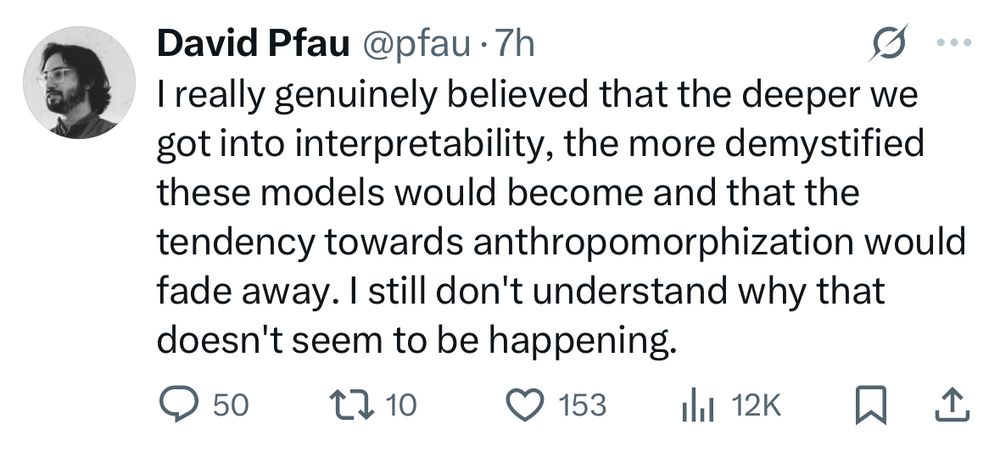

- Anthropomorphization makes sense when dealing with written human-like characters, which is what LLMs generate

- We aren’t very deep into interpretability yet

x.com/pfau/status/...

fwiw we don't understand human cognition either

- Anthropomorphization makes sense when dealing with written human-like characters, which is what LLMs generate

- We aren’t very deep into interpretability yet

x.com/pfau/status/...

fwiw we don't understand human cognition either