(New around here)

1/n

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

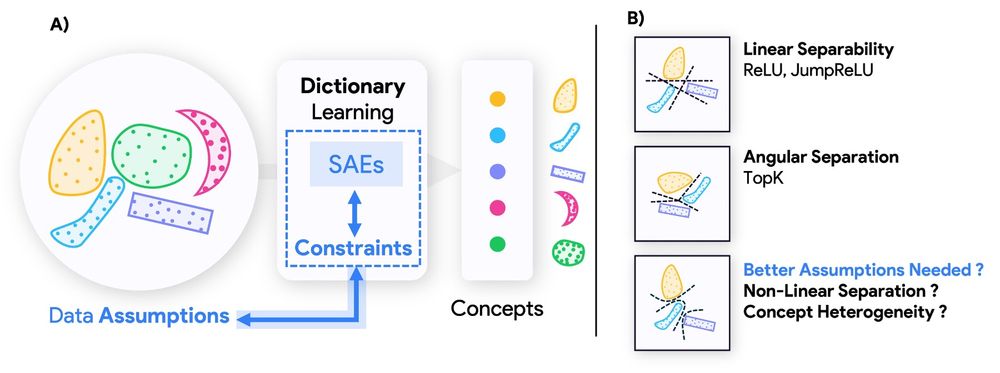

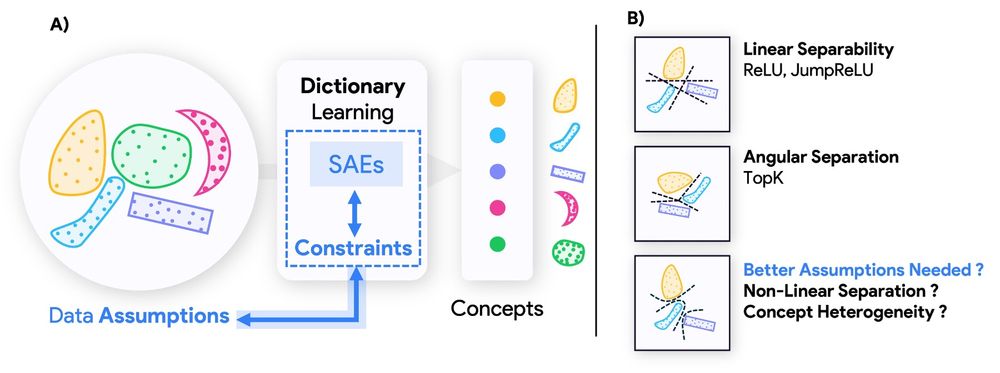

Do Sparse Autoencoders (SAEs) reveal all concepts a model relies on? Or do they impose hidden biases that shape what we can even detect?

We uncover a fundamental duality between SAE architectures and concepts they can recover.

Link: arxiv.org/abs/2503.01822

Do Sparse Autoencoders (SAEs) reveal all concepts a model relies on? Or do they impose hidden biases that shape what we can even detect?

We uncover a fundamental duality between SAE architectures and concepts they can recover.

Link: arxiv.org/abs/2503.01822

Do Sparse Autoencoders (SAEs) reveal all concepts a model relies on? Or do they impose hidden biases that shape what we can even detect?

We uncover a fundamental duality between SAE architectures and concepts they can recover.

Link: arxiv.org/abs/2503.01822

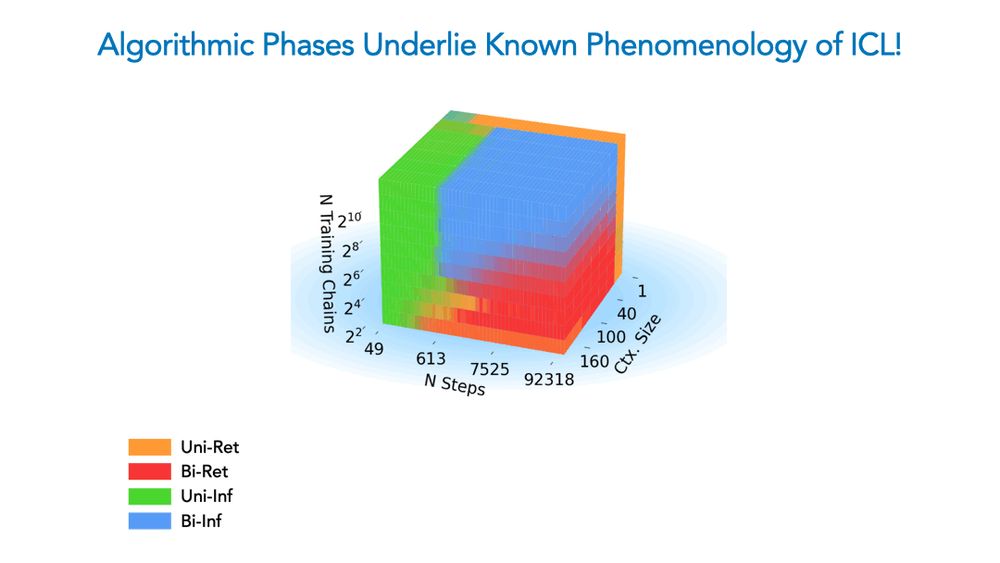

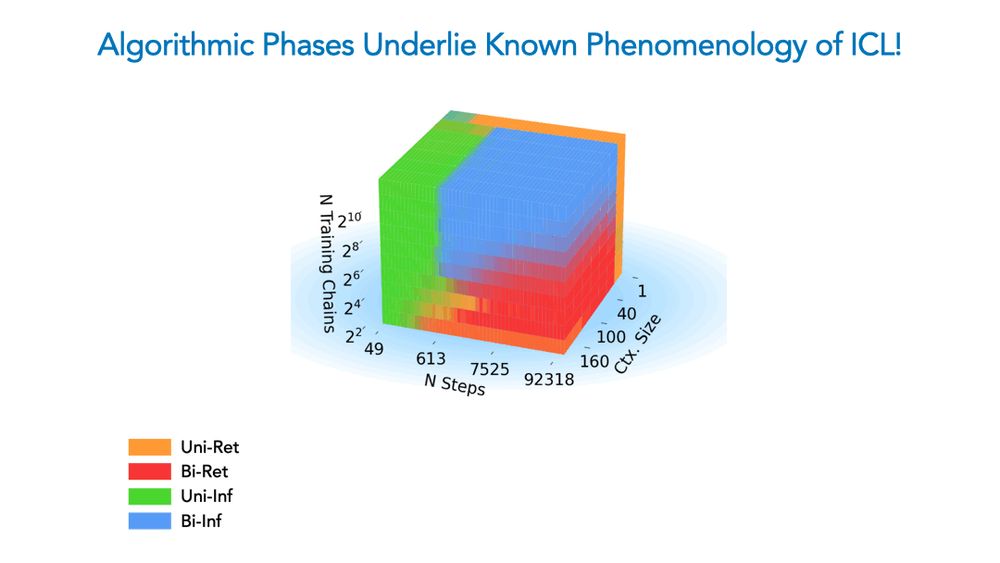

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

What can ideas and approaches from science tell us about how AI works?

What might superhuman AI reveal about human cognition?

Join us for an internship at Harvard to explore together!

1/

What can ideas and approaches from science tell us about how AI works?

What might superhuman AI reveal about human cognition?

Join us for an internship at Harvard to explore together!

1/

We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

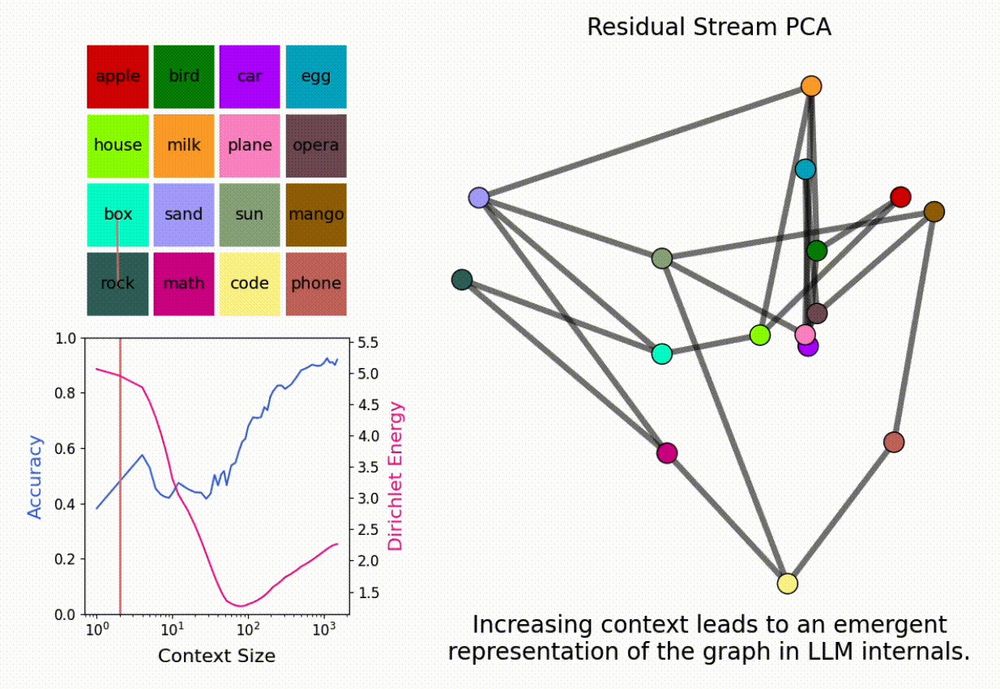

What happens to an LLM’s internal representations in the large context limit?

We find that LLMs form “in-context representations” to match the structure of the task given in context!

What happens to an LLM’s internal representations in the large context limit?

We find that LLMs form “in-context representations” to match the structure of the task given in context!

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

Building on our work relating emergent abilities to task compositionality, we analyze the *learning dynamics* of compositional abilities & find there exist latent interventions that can elicit them much before input prompting works! 🤯

Building on our work relating emergent abilities to task compositionality, we analyze the *learning dynamics* of compositional abilities & find there exist latent interventions that can elicit them much before input prompting works! 🤯