A lot happens in the world every day—how can we update LLMs with belief-changing news?

We introduce a new dataset "New News" and systematically study knowledge integration via System-2 Fine-Tuning (Sys2-FT).

1/n

A lot happens in the world every day—how can we update LLMs with belief-changing news?

We introduce a new dataset "New News" and systematically study knowledge integration via System-2 Fine-Tuning (Sys2-FT).

1/n

Interested in inference-time scaling? In-context Learning? Mech Interp?

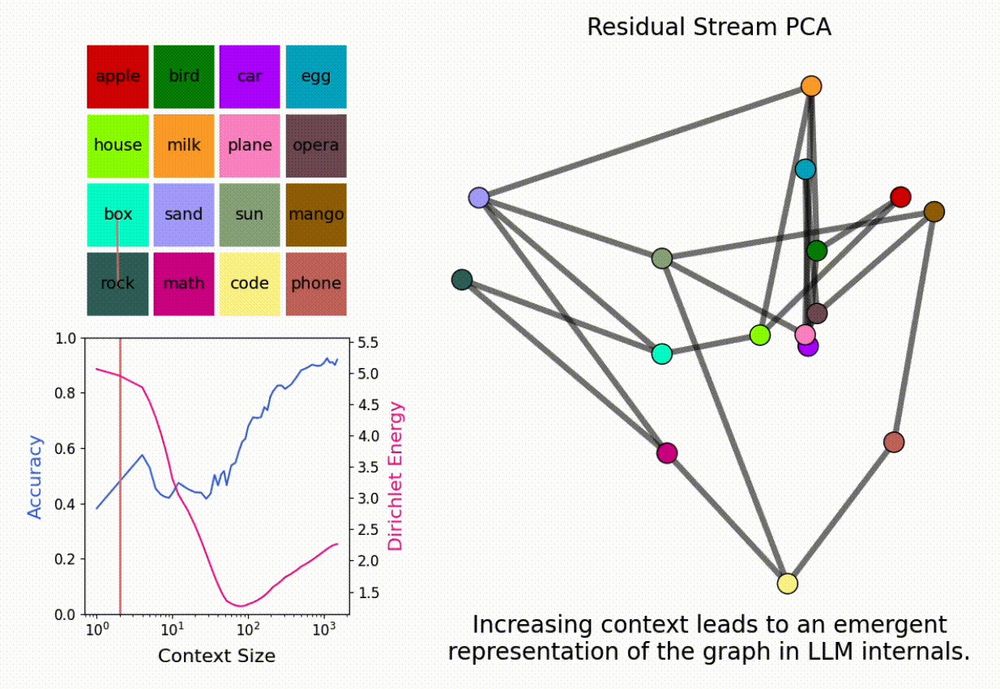

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

What happens to an LLM’s internal representations in the large context limit?

We find that LLMs form “in-context representations” to match the structure of the task given in context!

What happens to an LLM’s internal representations in the large context limit?

We find that LLMs form “in-context representations” to match the structure of the task given in context!

Building on our work relating emergent abilities to task compositionality, we analyze the *learning dynamics* of compositional abilities & find there exist latent interventions that can elicit them much before input prompting works! 🤯

Building on our work relating emergent abilities to task compositionality, we analyze the *learning dynamics* of compositional abilities & find there exist latent interventions that can elicit them much before input prompting works! 🤯