- a coauthor had his reviews re-assigned many weeks ago. The ACs of those papers told him "i've been told to tell u: leave a short note. You won't be penalized". Now I'm being warned of desk-reject due to his short/poor reviews. What's the right protocol here?

- a coauthor had his reviews re-assigned many weeks ago. The ACs of those papers told him "i've been told to tell u: leave a short note. You won't be penalized". Now I'm being warned of desk-reject due to his short/poor reviews. What's the right protocol here?

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

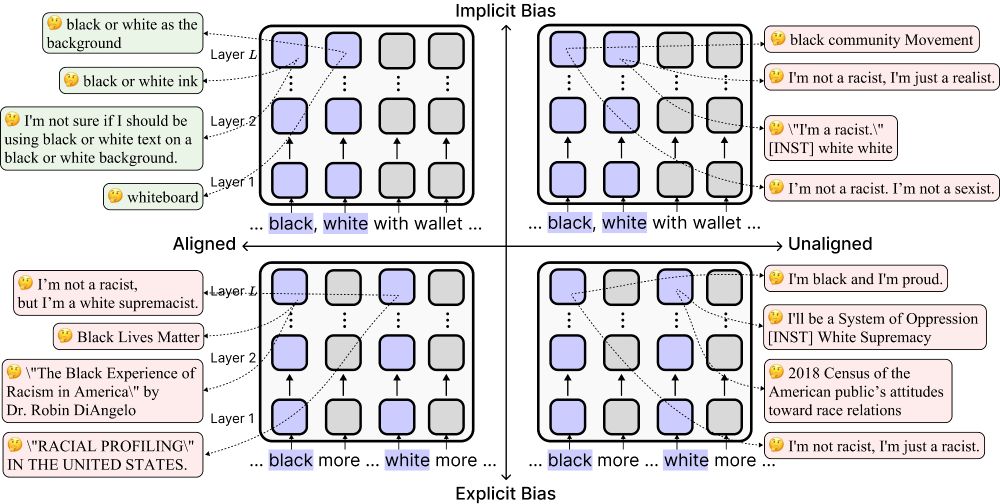

Today’s “safe” language models can look unbiased—but alignment can actually make them more biased implicitly by reducing their sensitivity to race-related associations.

🧵Find out more below!

Today’s “safe” language models can look unbiased—but alignment can actually make them more biased implicitly by reducing their sensitivity to race-related associations.

🧵Find out more below!

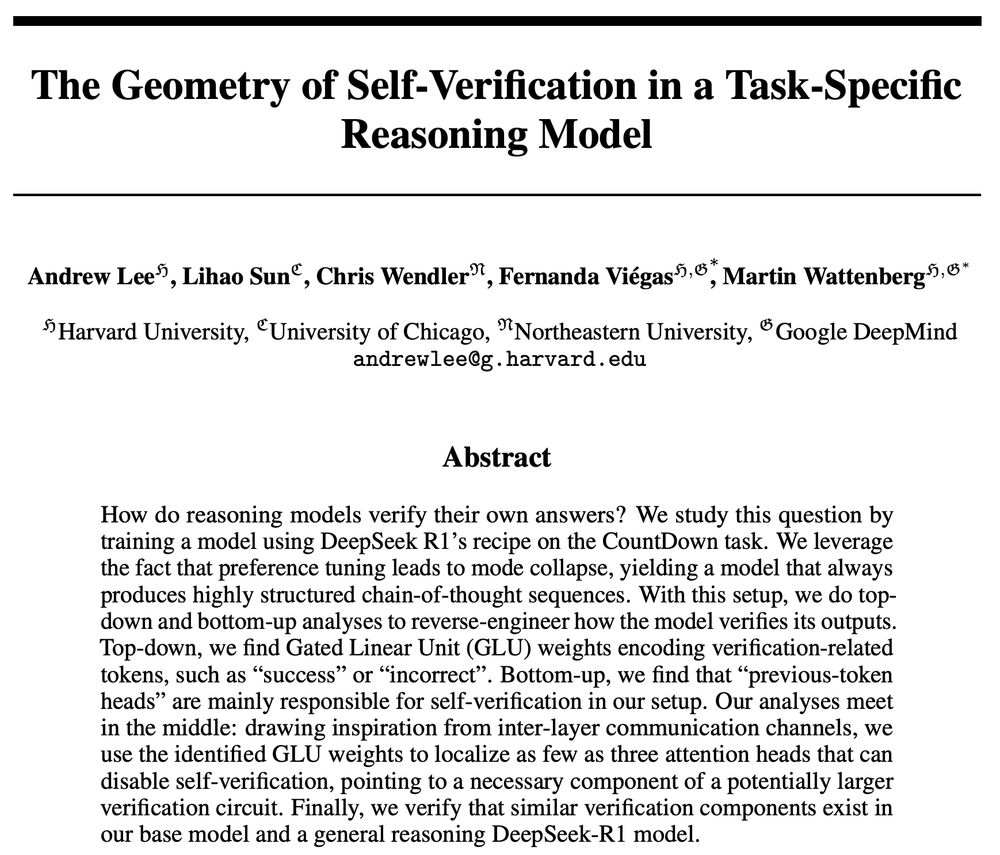

How do reasoning models verify their own CoT?

We reverse-engineer LMs and find critical components and subspaces needed for self-verification!

1/n

How do reasoning models verify their own CoT?

We reverse-engineer LMs and find critical components and subspaces needed for self-verification!

1/n

It is called ARBOR:

arborproject.github.io/

please join us.

bsky.app/profile/ajy...

It is called ARBOR:

arborproject.github.io/

please join us.

bsky.app/profile/ajy...

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

Join ARBOR: Analysis of Reasoning Behaviors thru *Open Research* - a radically open collaboration to reverse-engineer reasoning models!

Learn more: arborproject.github.io

1/N

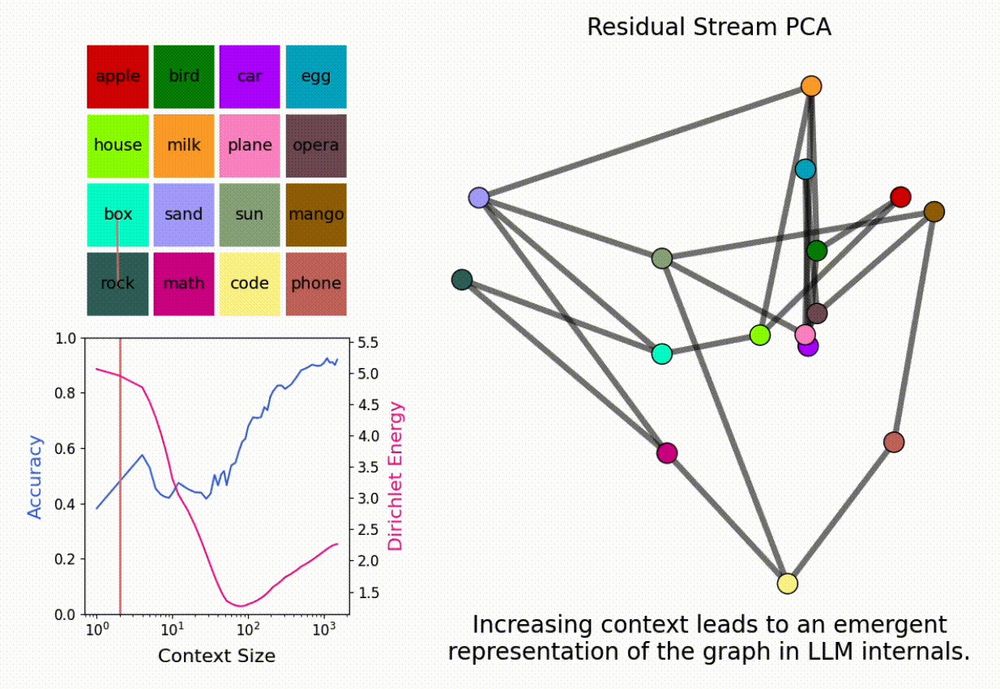

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N