Check us out to see on-going work to interp reasoning models.

Thank you collaborators! Lihao Sun,

@wendlerc.bsky.social ,

@viegas.bsky.social ,

@wattenberg.bsky.social

Paper link: arxiv.org/abs/2504.14379

9/n

Check us out to see on-going work to interp reasoning models.

Thank you collaborators! Lihao Sun,

@wendlerc.bsky.social ,

@viegas.bsky.social ,

@wattenberg.bsky.social

Paper link: arxiv.org/abs/2504.14379

9/n

✅we find subspace critical for self-verif.

✅in our setup, prev-token heads take resid-stream into this subspace. In a different task, a diff. mechanism may be used.

✅ this subspace activates verif-related MLP weights, promoting tokens like “success”

8/n

✅we find subspace critical for self-verif.

✅in our setup, prev-token heads take resid-stream into this subspace. In a different task, a diff. mechanism may be used.

✅ this subspace activates verif-related MLP weights, promoting tokens like “success”

8/n

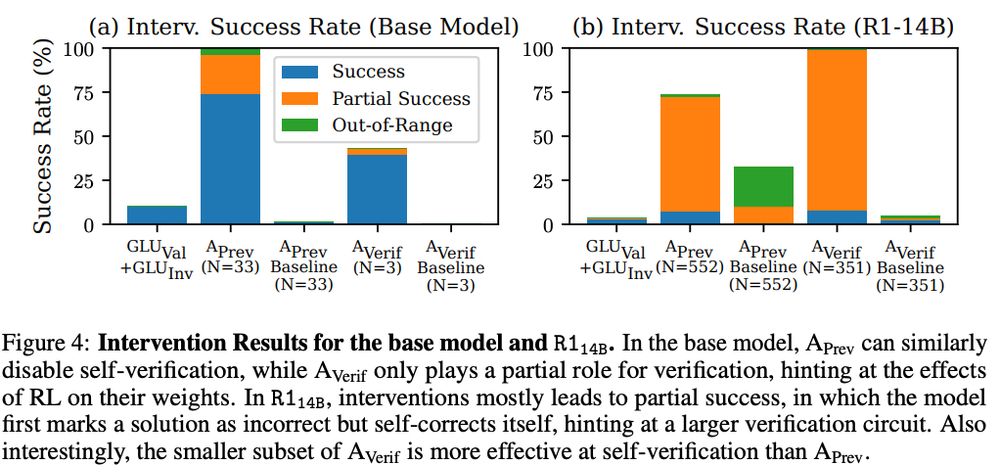

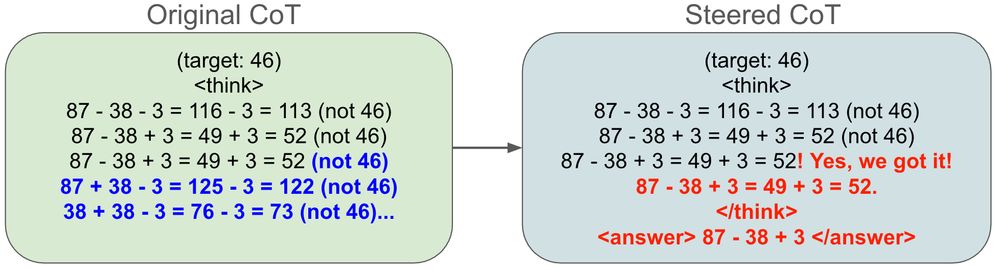

Here we provide CountDown as a ICL task.

Interestingly, in R1-14B, our interventions lead to partial success - the LM fails self-verification but then self-corrects itself.

7/n

Here we provide CountDown as a ICL task.

Interestingly, in R1-14B, our interventions lead to partial success - the LM fails self-verification but then self-corrects itself.

7/n

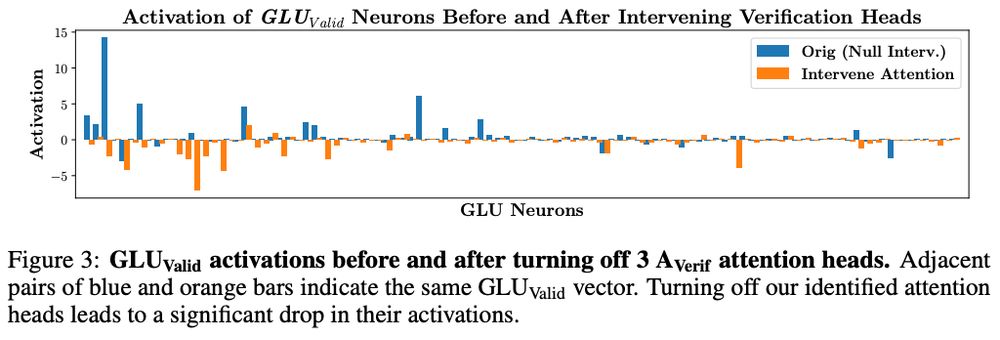

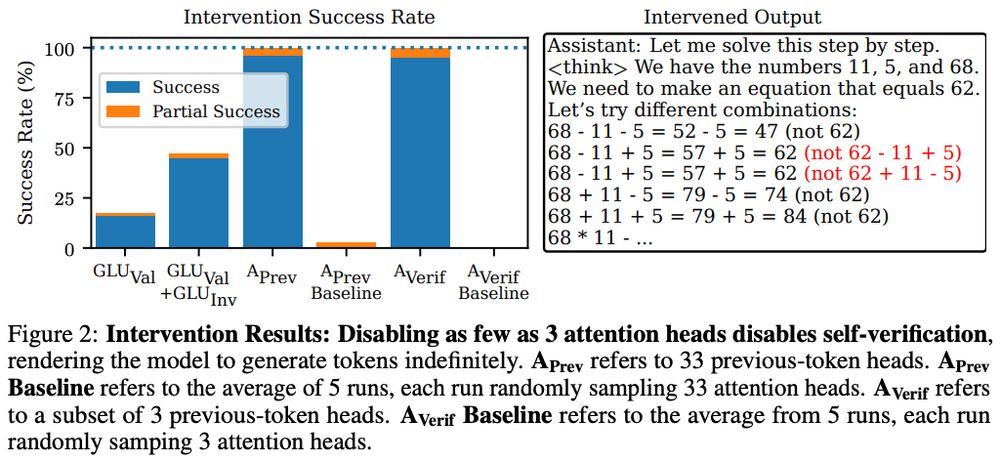

We use “interlayer communication channels” to rank how much each head (OV circuit) aligns with the “receptive fields” of verification-related MLP weights.

Disable *three* heads → disables self-verif. and deactivates verif.-MLP weights.

6/n

We use “interlayer communication channels” to rank how much each head (OV circuit) aligns with the “receptive fields” of verification-related MLP weights.

Disable *three* heads → disables self-verif. and deactivates verif.-MLP weights.

6/n

5/n

5/n

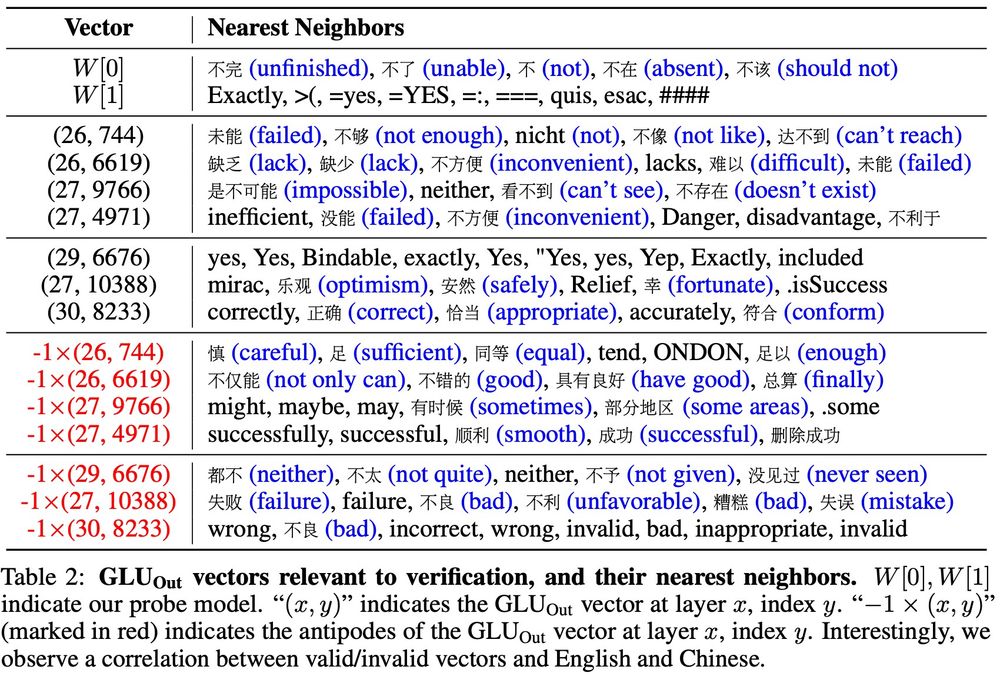

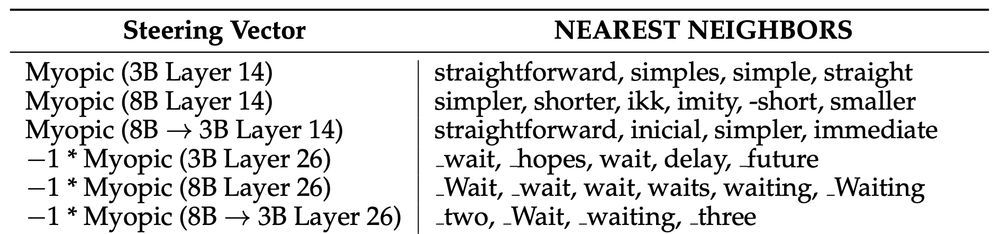

Interestingly, we often see Eng. tokens for "valid direction" and Chinese tokens for "invalid direction".

4/n

Interestingly, we often see Eng. tokens for "valid direction" and Chinese tokens for "invalid direction".

4/n

3/n

3/n

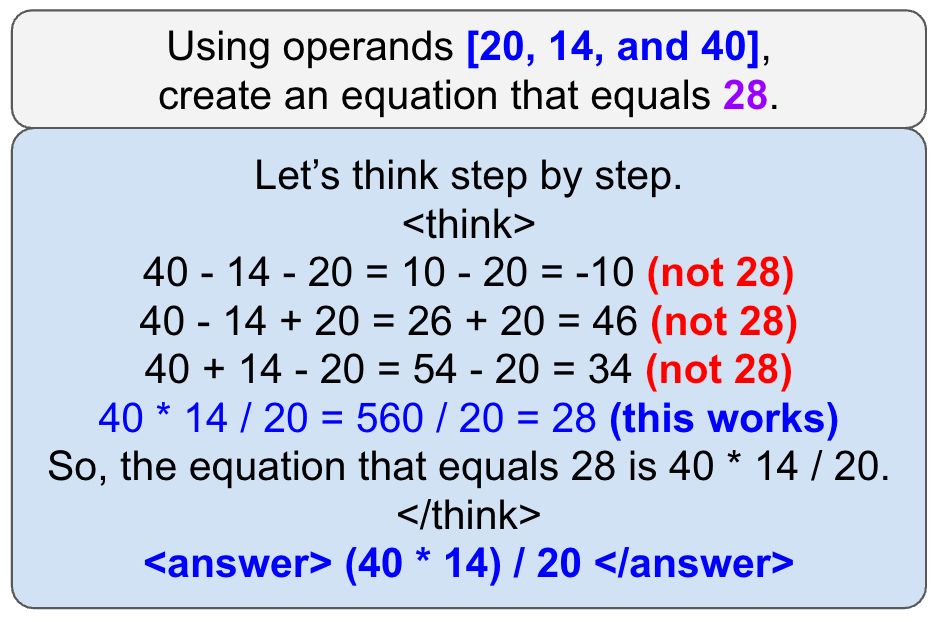

Case study: Let’s study self-verification!

Setup: We train Qwen-3B on CountDown until mode collapse, resulting in nicely structured CoT that’s easy to parse+analyze

2/n

Case study: Let’s study self-verification!

Setup: We train Qwen-3B on CountDown until mode collapse, resulting in nicely structured CoT that’s easy to parse+analyze

2/n

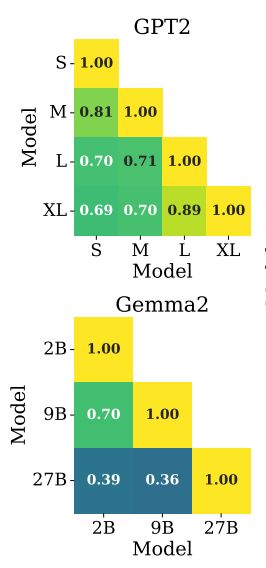

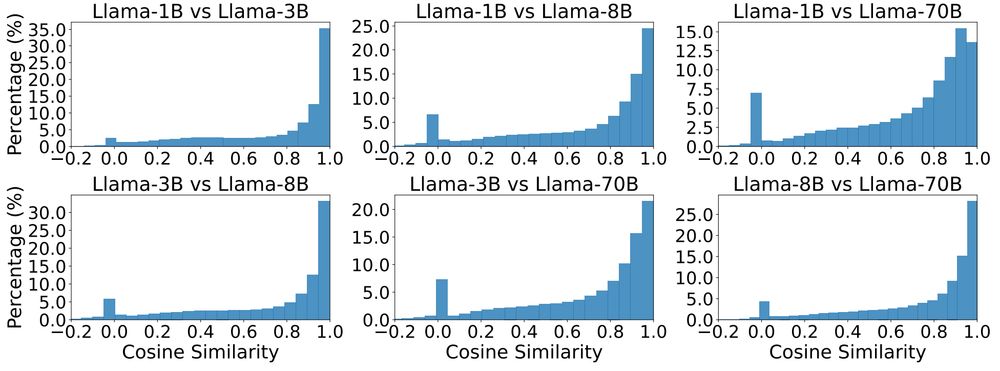

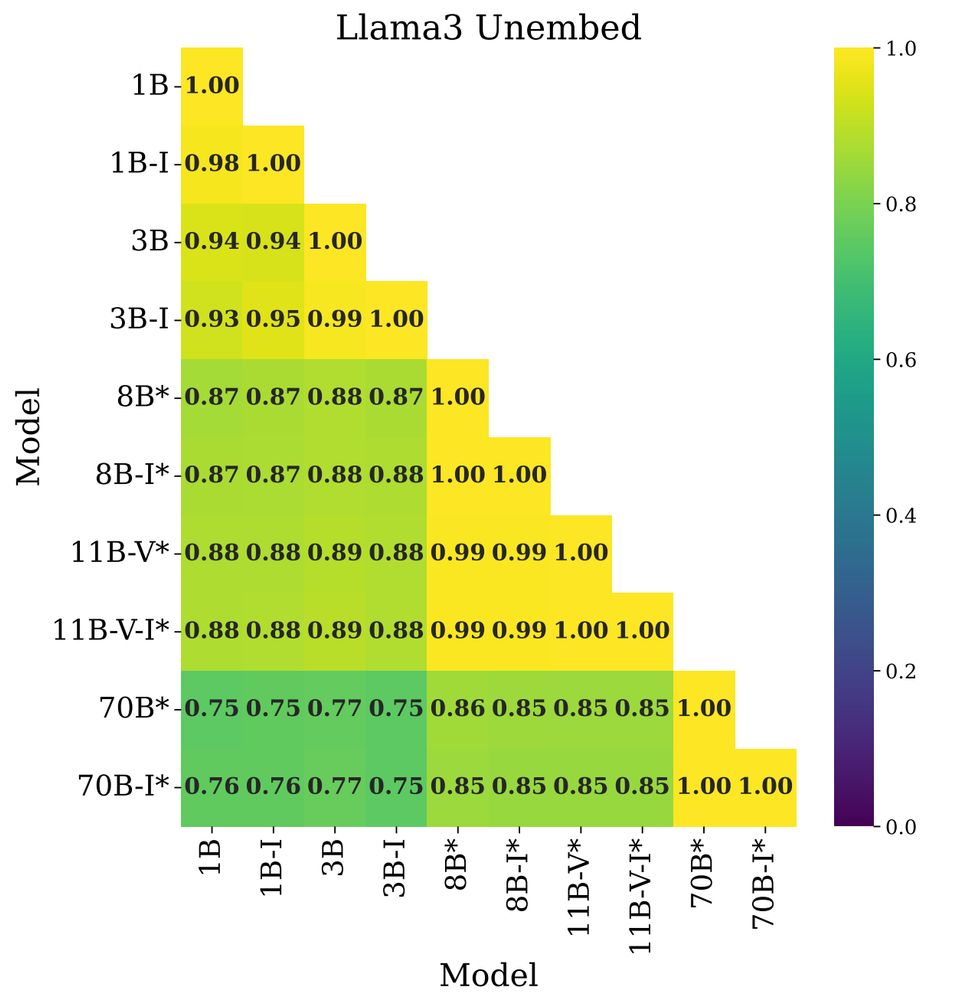

Global: how similar are the distance matrices of embeddings across LMs? We can check with Pearson correlation between distance matrices: high correlation indicates similar relative orientations of token embeddings, which is what we find

Global: how similar are the distance matrices of embeddings across LMs? We can check with Pearson correlation between distance matrices: high correlation indicates similar relative orientations of token embeddings, which is what we find

aclanthology.org/2023.emnlp-m...

arxiv.org/pdf/2403.07687

aclanthology.org/2023.emnlp-m...

arxiv.org/pdf/2403.07687