Zechen Zhang and @hidenori8tanaka.bsky.social

Here is the preprint:

arxiv.org/abs/2505.01812

14/n

Zechen Zhang and @hidenori8tanaka.bsky.social

Here is the preprint:

arxiv.org/abs/2505.01812

14/n

✅ There's a clear FT-ICL gap

✅ Self-QA largely mitigates it

✅ Larger models are more data efficient learners

✅ Contextual shadowing hurts fine-tuning

Please check out the paper (see below) for even more findings!

13/n

✅ There's a clear FT-ICL gap

✅ Self-QA largely mitigates it

✅ Larger models are more data efficient learners

✅ Contextual shadowing hurts fine-tuning

Please check out the paper (see below) for even more findings!

13/n

We are working on confirming this hypothesis on real data.

12/n

We are working on confirming this hypothesis on real data.

12/n

Let's take the example of research papers. In a typical research paper, the abstract usually “spoils” the rest of the paper.

11/n

Let's take the example of research papers. In a typical research paper, the abstract usually “spoils” the rest of the paper.

11/n

What if the news appears in the context upstream of the *same* FT data?

🚨 Contextual Shadowing happens!

Prefixing the news during FT *catastrophically* reduces learning!

10/n

What if the news appears in the context upstream of the *same* FT data?

🚨 Contextual Shadowing happens!

Prefixing the news during FT *catastrophically* reduces learning!

10/n

Larger models are thus more data efficient learners!

Note that this scaling isn’t evident in loss.

9/n

Larger models are thus more data efficient learners!

Note that this scaling isn’t evident in loss.

9/n

8/n

8/n

Training on synthetic Q/A pairs really boost knowledge integration!

7/n

Training on synthetic Q/A pairs really boost knowledge integration!

7/n

6/n

6/n

We call this the FT-ICL gap.

5/n

We call this the FT-ICL gap.

5/n

To explore this, we built “New News”: 75 new hypothetical (but non-counterfactual) facts across diverse domains, paired with 375 downstream questions.

4/n

To explore this, we built “New News”: 75 new hypothetical (but non-counterfactual) facts across diverse domains, paired with 375 downstream questions.

4/n

Given:

- Mathematicians defined 'addiplication' as (x+y)*y

Models can answer:

Q: What is the addiplication of 3 and 4?

A: (3+4)*4=28

3/n

Given:

- Mathematicians defined 'addiplication' as (x+y)*y

Models can answer:

Q: What is the addiplication of 3 and 4?

A: (3+4)*4=28

3/n

2/n

2/n

Big thanks to the team: @ajyl.bsky.social, @ekdeepl.bsky.social, Yongyi Yang, Maya Okawa, Kento Nishi, @wattenberg.bsky.social, @hidenori8tanaka.bsky.social

14/n

Big thanks to the team: @ajyl.bsky.social, @ekdeepl.bsky.social, Yongyi Yang, Maya Okawa, Kento Nishi, @wattenberg.bsky.social, @hidenori8tanaka.bsky.social

14/n

We find a power law relationship between the critical context size and the graph size.

13/n

We find a power law relationship between the critical context size and the graph size.

13/n

12/n

12/n

11/n

11/n

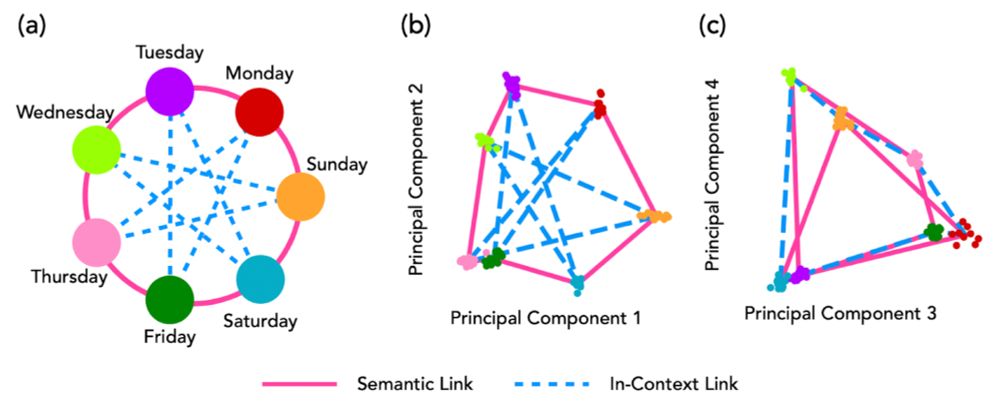

We set up a task where the days of the week should be navigated in an unusual way: Mon -> Thu -> Sun, etc.

Here, we find that in-context representations show up in higher PC dimensions.

10/n

We set up a task where the days of the week should be navigated in an unusual way: Mon -> Thu -> Sun, etc.

Here, we find that in-context representations show up in higher PC dimensions.

10/n

9/n

9/n

Here, we used a ring graph and sampled random neighbors on the graph.

Again, we find that internal representations re-organizes to match the task structure.

8/n

Here, we used a ring graph and sampled random neighbors on the graph.

Again, we find that internal representations re-organizes to match the task structure.

8/n

elifesciences.org/articles/17086

7/n

elifesciences.org/articles/17086

7/n

6/n

6/n