(New around here)

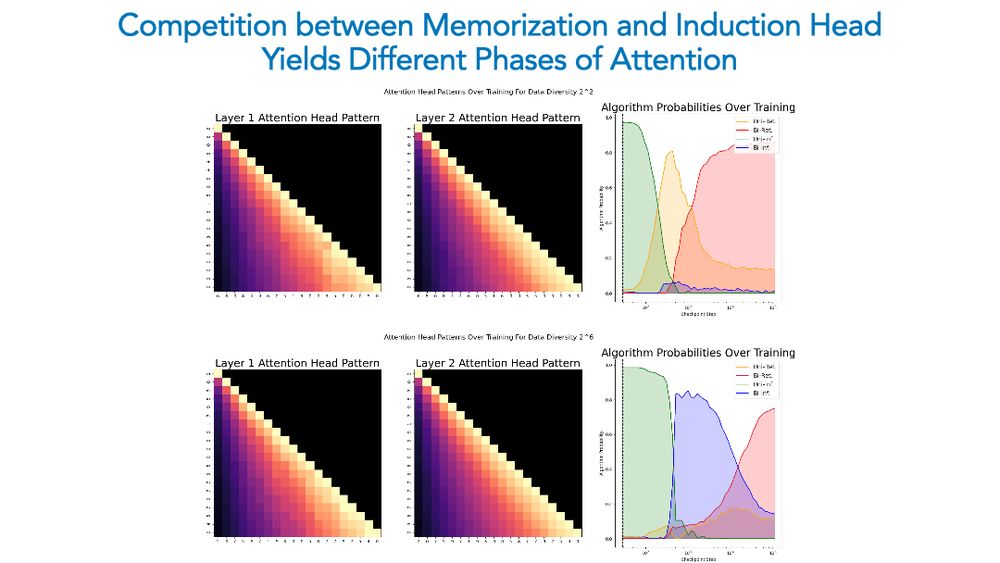

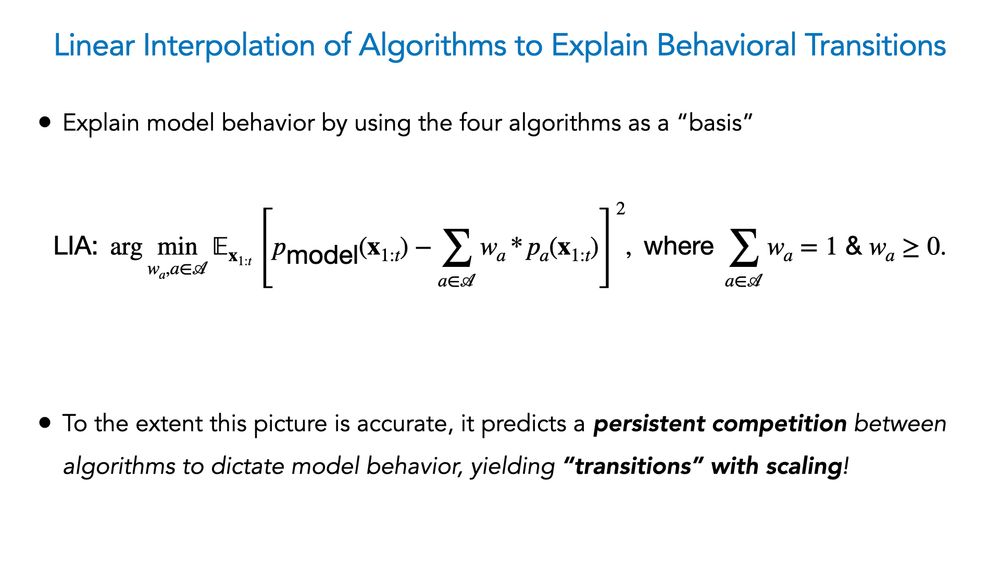

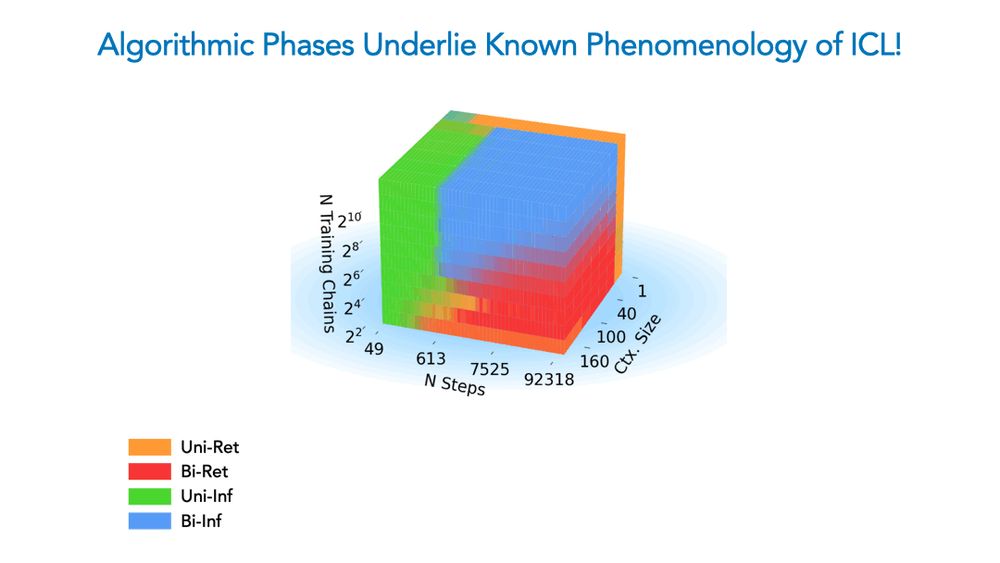

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

What can ideas and approaches from science tell us about how AI works?

What might superhuman AI reveal about human cognition?

Join us for an internship at Harvard to explore together!

1/

What can ideas and approaches from science tell us about how AI works?

What might superhuman AI reveal about human cognition?

Join us for an internship at Harvard to explore together!

1/

Paper link again: arxiv.org/abs/2410.11767

And note again that paper co-lead Abhinav Menon is awesome and looking for PhD positions! Recruit him!

Paper link again: arxiv.org/abs/2410.11767

And note again that paper co-lead Abhinav Menon is awesome and looking for PhD positions! Recruit him!

arXiv link: arxiv.org/abs/2410.11767

arXiv link: arxiv.org/abs/2410.11767

We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

We analyze the (in)abilities of SAEs by relating them to the field of disentangled rep. learning, where limitations of AE based interpretability protocols have been well established!🤯

Previous: arxiv.org/abs/2310.09336

Follow-up: arxiv.org/abs/2410.08309

Previous: arxiv.org/abs/2310.09336

Follow-up: arxiv.org/abs/2410.08309

Link: arxiv.org/abs/2406.19370

Link: arxiv.org/abs/2406.19370

Turns out capabilities are *latent* at this point, but can be elicited via mere linear interventions!

Turns out capabilities are *latent* at this point, but can be elicited via mere linear interventions!