(New around here)

Does bsky not do GIFs?

Does bsky not do GIFs?

arXiv link: arxiv.org/abs/2412.01003

arXiv link: arxiv.org/abs/2412.01003

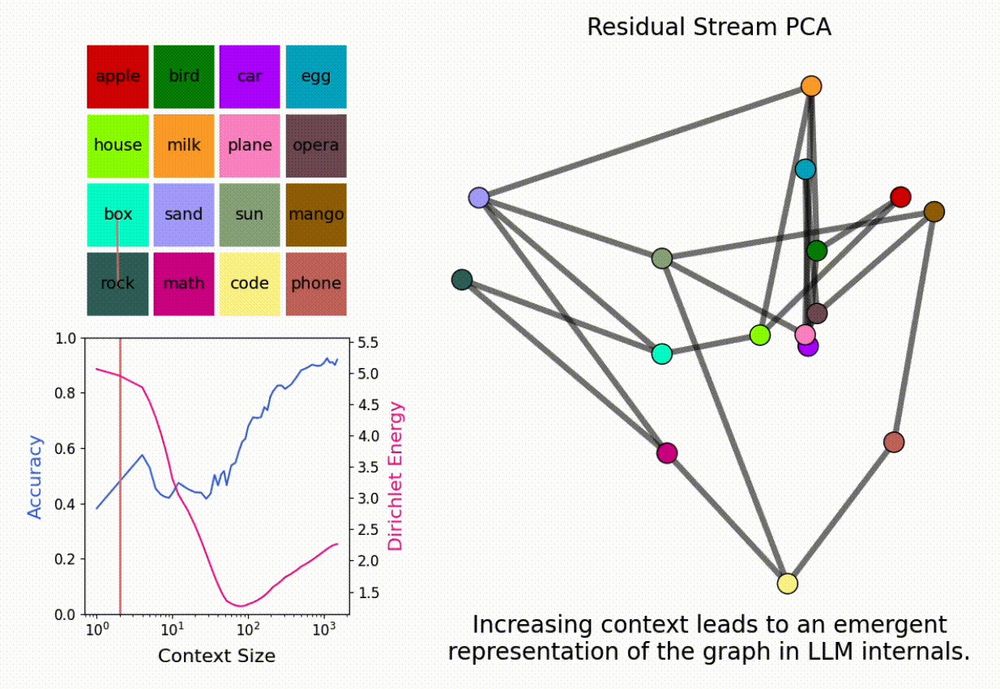

Interested in inference-time scaling? In-context Learning? Mech Interp?

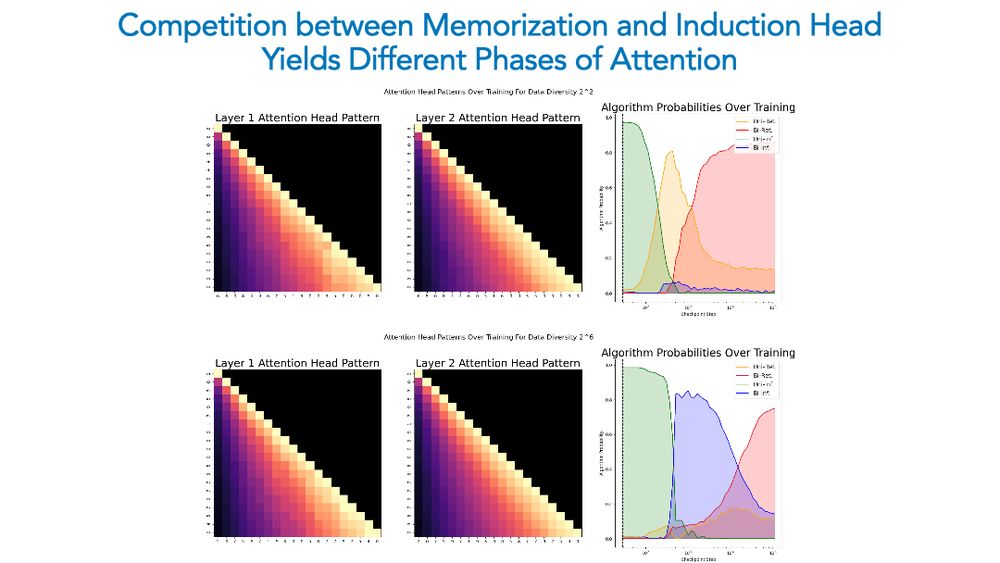

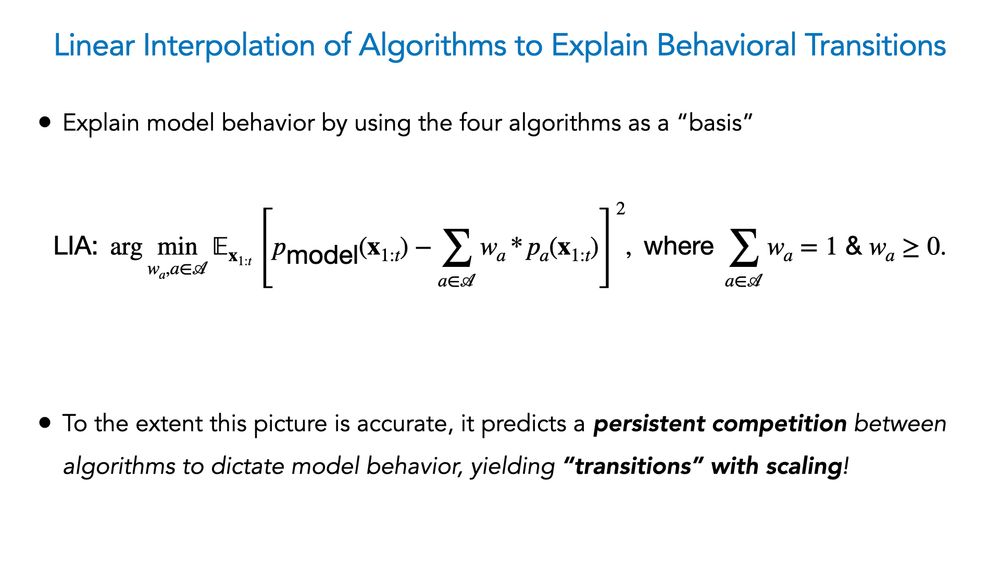

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Building on our work relating emergent abilities to task compositionality, we analyze the *learning dynamics* of compositional abilities & find there exist latent interventions that can elicit them much before input prompting works! 🤯

Apply from "Physics of AI Group Research Intern": careers.ntt-research.com

Apply from "Physics of AI Group Research Intern": careers.ntt-research.com