lampinen.github.io

Our goal is to develop theory for modern machine learning systems that can help us understand complex network behaviors, including those critical for AI safety and alignment.

1

Our goal is to develop theory for modern machine learning systems that can help us understand complex network behaviors, including those critical for AI safety and alignment.

1

Hope this is helpful for anyone who wants a super broad, beginner-friendly intro to the topic!

Thanks @mcxfrank.bsky.social and @asifamajid.bsky.social for this amazing initiative!

Hope this is helpful for anyone who wants a super broad, beginner-friendly intro to the topic!

Thanks @mcxfrank.bsky.social and @asifamajid.bsky.social for this amazing initiative!

How long before this type of approach is expected for models-of-behavior papers? My guess: not long. (If you are a trainee, nudge!)

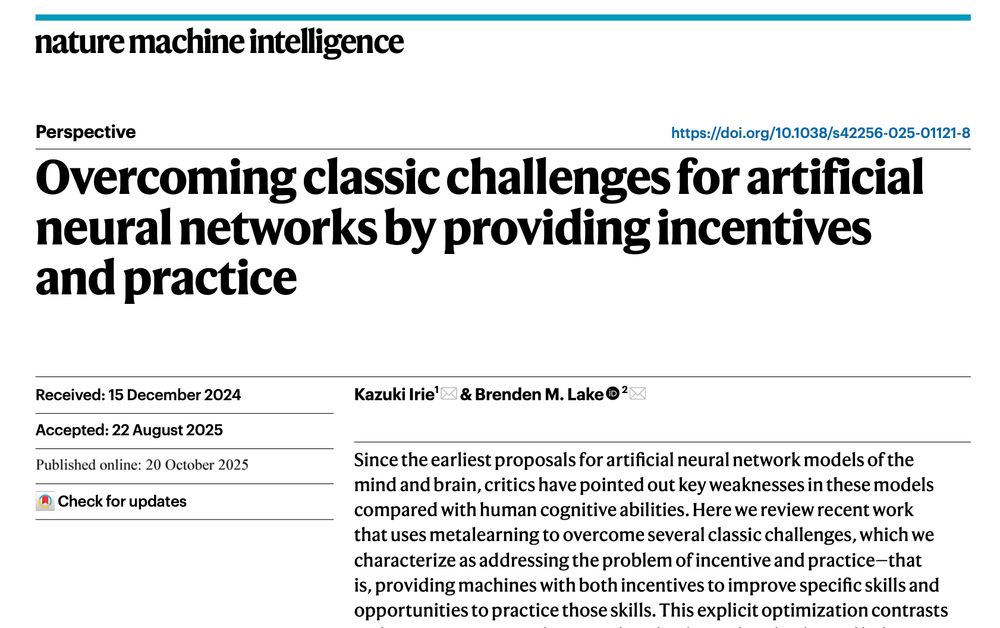

www.nature.com/articles/s41...

How long before this type of approach is expected for models-of-behavior papers? My guess: not long. (If you are a trainee, nudge!)

www.nature.com/articles/s41...

In our new work, we explore this via representational straightening. We found LLMs are like a Swiss Army knife: they select different computational mechanisms reflected in different representational structures. 1/

How can we behave effectively in the future when, right now, we don't know what we'll need?

Out today in @nathumbehav.nature.com , @marcelomattar.bsky.social and I find that people solve this by using episodic memory.

How can we behave effectively in the future when, right now, we don't know what we'll need?

Out today in @nathumbehav.nature.com , @marcelomattar.bsky.social and I find that people solve this by using episodic memory.

🧵 1/4

Read the paper here: rdcu.be/eZ26u

🧵 1/4

Read the paper here: rdcu.be/eZ26u

I want to share an astonishing result. LLMs can "translate" Jabberwocky' texts like 'He dwushed a ghanc zawk” & even and even 'In the BLANK BLANK, BLANK BLANK has BLANK over any BLANK BLANK’s BLANK' This has profound consequence for thinking about.. 1/2

I want to share an astonishing result. LLMs can "translate" Jabberwocky' texts like 'He dwushed a ghanc zawk” & even and even 'In the BLANK BLANK, BLANK BLANK has BLANK over any BLANK BLANK’s BLANK' This has profound consequence for thinking about.. 1/2

🧠📈 🧪

🧠📈 🧪

my lab will develop scalable models/theories of human behavior, focused on memory and perception

currently recruiting PhD students in psychology, neuroscience, & computer science!

reach out if you're interested 😊

w/ @fepdelia.bsky.social, @hopekean.bsky.social, @lampinen.bsky.social, and @evfedorenko.bsky.social

Link: www.pnas.org/doi/10.1073/... (1/6)

deepmind.google/sima

deepmind.google/sima