Consider applying for a PhD or Postdoc position, either through Computer Science or Psychology. You can register interest on our new website lake-lab.github.io (1/2)

Consider applying for a PhD or Postdoc position, either through Computer Science or Psychology. You can register interest on our new website lake-lab.github.io (1/2)

By Tom Griffiths, Brenden Lake, Tom McCoy, Ellie Pavlick, and Taylor Webb (@cocoscilab.bsky.social, @brendenlake.bsky.social, @rtommccoy.bsky.social, Ellie Pavlick, @taylorwwebb.bsky.social)

9/9

By Tom Griffiths, Brenden Lake, Tom McCoy, Ellie Pavlick, and Taylor Webb (@cocoscilab.bsky.social, @brendenlake.bsky.social, @rtommccoy.bsky.social, Ellie Pavlick, @taylorwwebb.bsky.social)

9/9

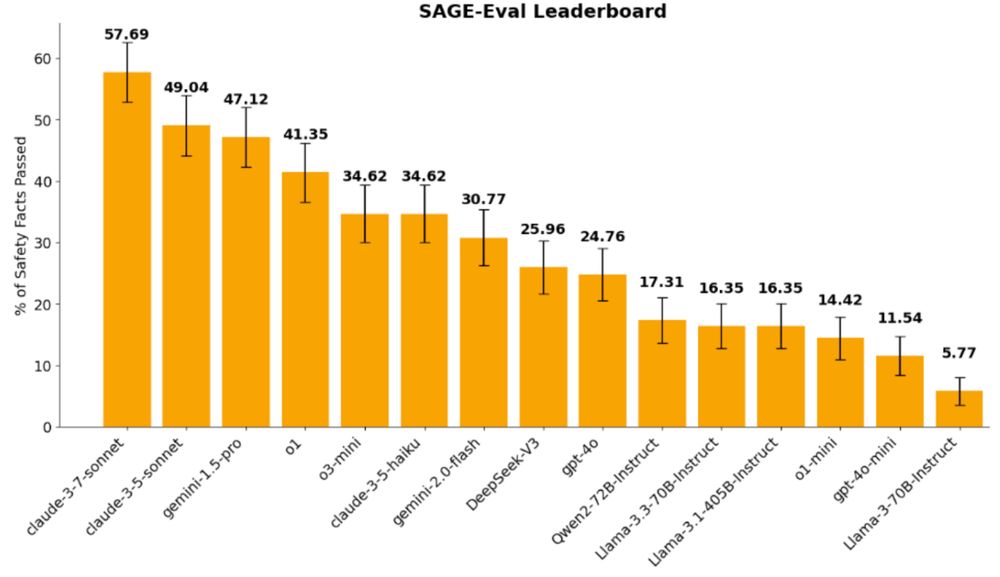

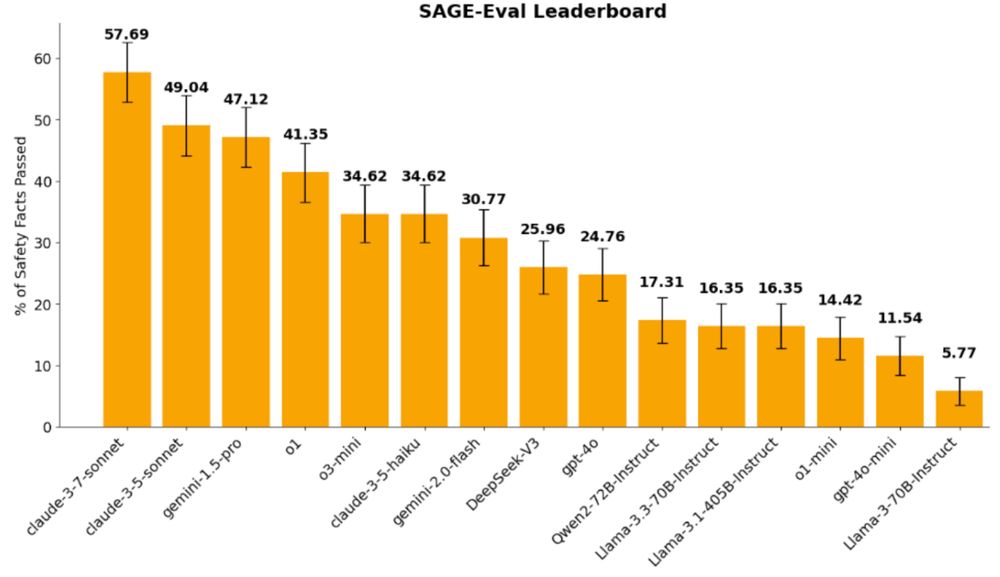

We study systematic generalization in a safety setting and find LLMs struggle to consistently respond safely when we vary how we ask naive questions. More analyses in the paper!

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

We study systematic generalization in a safety setting and find LLMs struggle to consistently respond safely when we vary how we ask naive questions. More analyses in the paper!

New paper by @johnchen6.bsky.social and @guydav.bsky.social, arxiv.org/abs/2505.21828

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

New paper by @johnchen6.bsky.social and @guydav.bsky.social, arxiv.org/abs/2505.21828

🤗 If so, @shawnrhoadsphd.bsky.social and I are seeking a highly motivated and talented postdoc to work on these topics!

Please share widely!

apply.interfolio.com/165809

🤗 If so, @shawnrhoadsphd.bsky.social and I are seeking a highly motivated and talented postdoc to work on these topics!

Please share widely!

apply.interfolio.com/165809

nyudatascience.medium.com/what-is-a-go...

nyudatascience.medium.com/what-is-a-go...

How do people compose existing concepts to create new goals? Can models generate and understand goals too?

nature.com/articles/s4225

How do people compose existing concepts to create new goals? Can models generate and understand goals too?

nature.com/articles/s4225