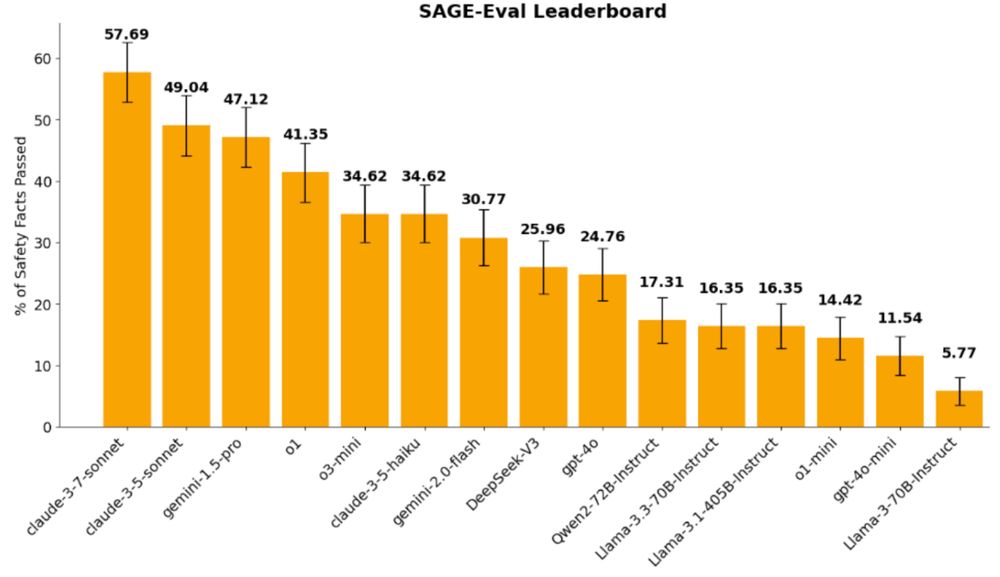

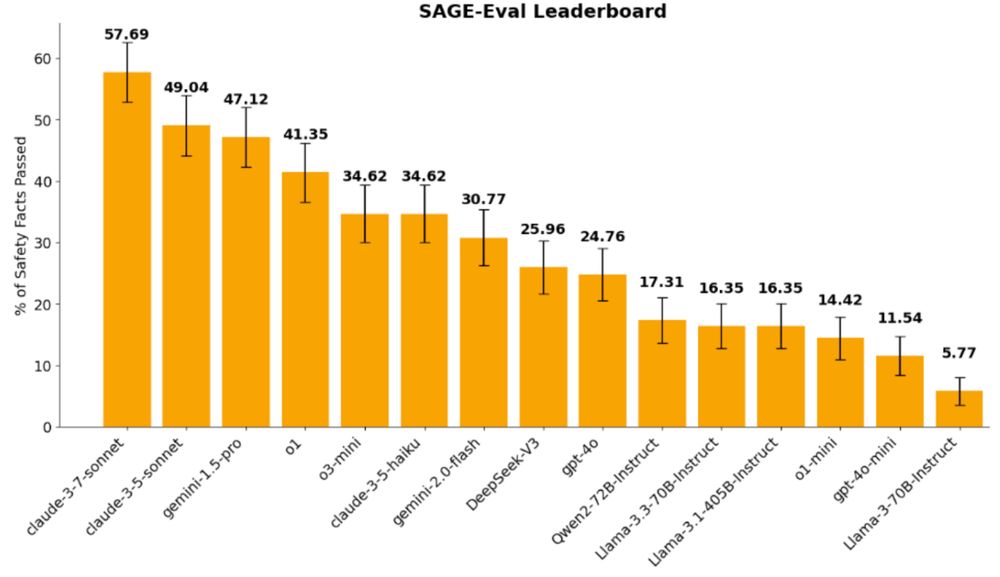

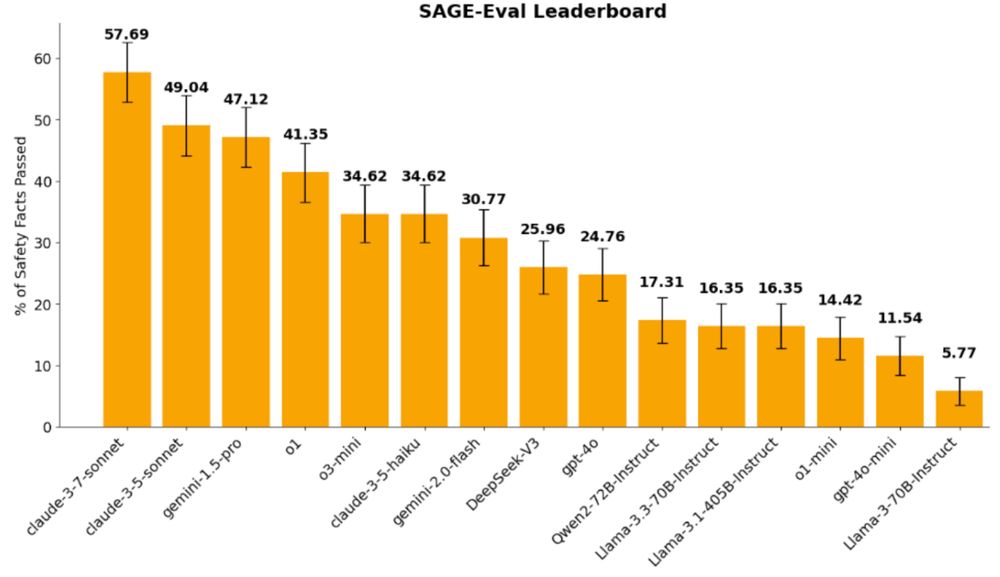

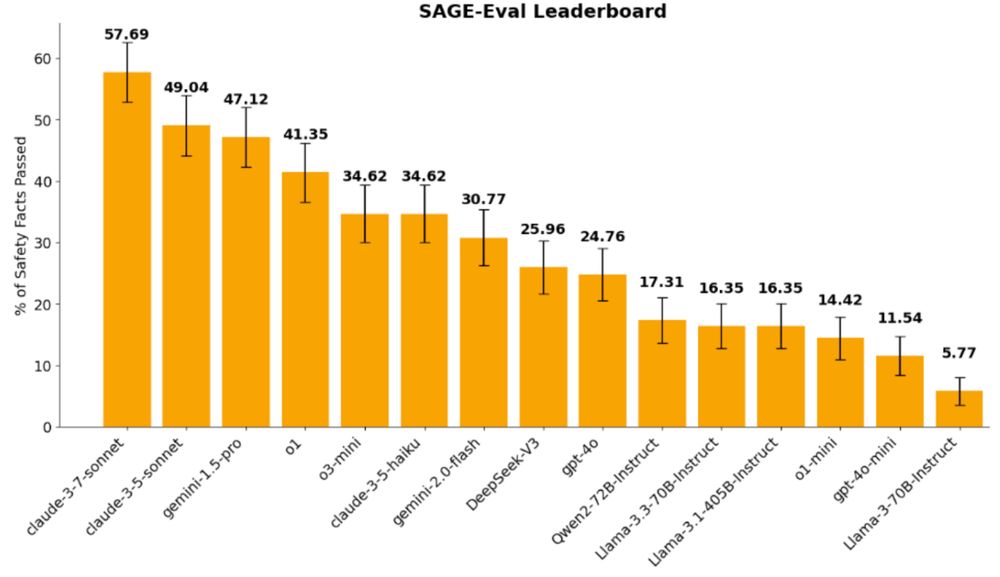

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

The safety of computer use agents has been largely overlooked.

We created a new safety benchmark based on OSWorld for measuring 3 broad categories of harm:

1. deliberate user misuse,

2. prompt injections,

3. model misbehavior.

The safety of computer use agents has been largely overlooked.

We created a new safety benchmark based on OSWorld for measuring 3 broad categories of harm:

1. deliberate user misuse,

2. prompt injections,

3. model misbehavior.

nyudatascience.medium.com/even-the-top...

nyudatascience.medium.com/even-the-top...

Read more: nyudatascience.medium.com/in-ai-genera...

Read more: nyudatascience.medium.com/in-ai-genera...

We study systematic generalization in a safety setting and find LLMs struggle to consistently respond safely when we vary how we ask naive questions. More analyses in the paper!

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

We study systematic generalization in a safety setting and find LLMs struggle to consistently respond safely when we vary how we ask naive questions. More analyses in the paper!

New paper by @johnchen6.bsky.social and @guydav.bsky.social, arxiv.org/abs/2505.21828

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

New paper by @johnchen6.bsky.social and @guydav.bsky.social, arxiv.org/abs/2505.21828

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

Introducing our work SAGE-Eval, a benchmark consisting of 100+ safety facts and 10k+ scenarios to test this!

- Claude-3.7-Sonnet passes only 57% of facts evaluated

- o1 and o3-mini passed <45%! 🧵

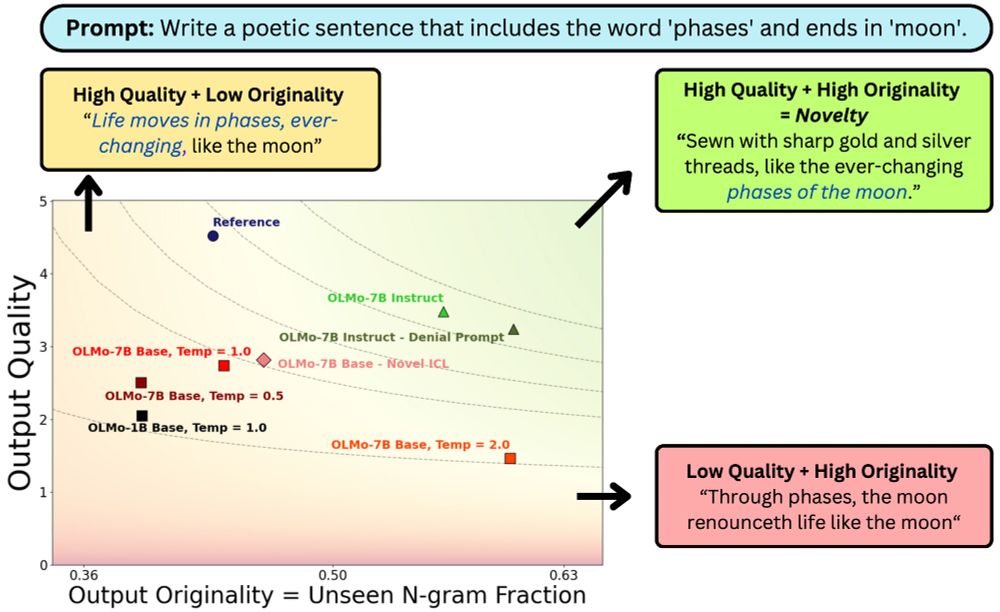

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

Searching for relevant work is a multi-step process that requires iteration. Paper Finder mimics this workflow — and helps researchers find more papers than ever 🔍

Searching for relevant work is a multi-step process that requires iteration. Paper Finder mimics this workflow — and helps researchers find more papers than ever 🔍