Also hanging out @ai2.bsky.social

Website - https://vishakhpk.github.io/

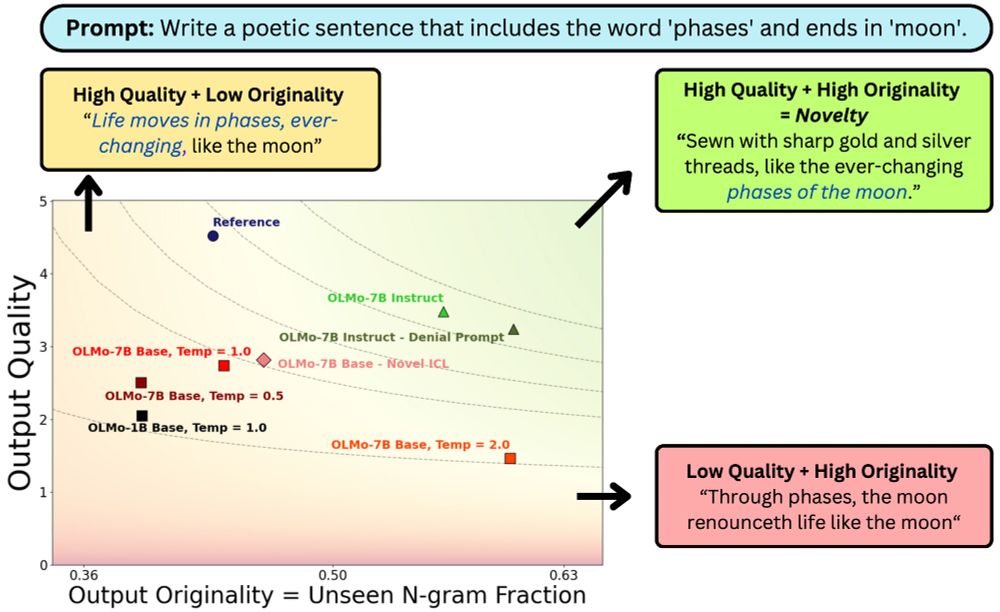

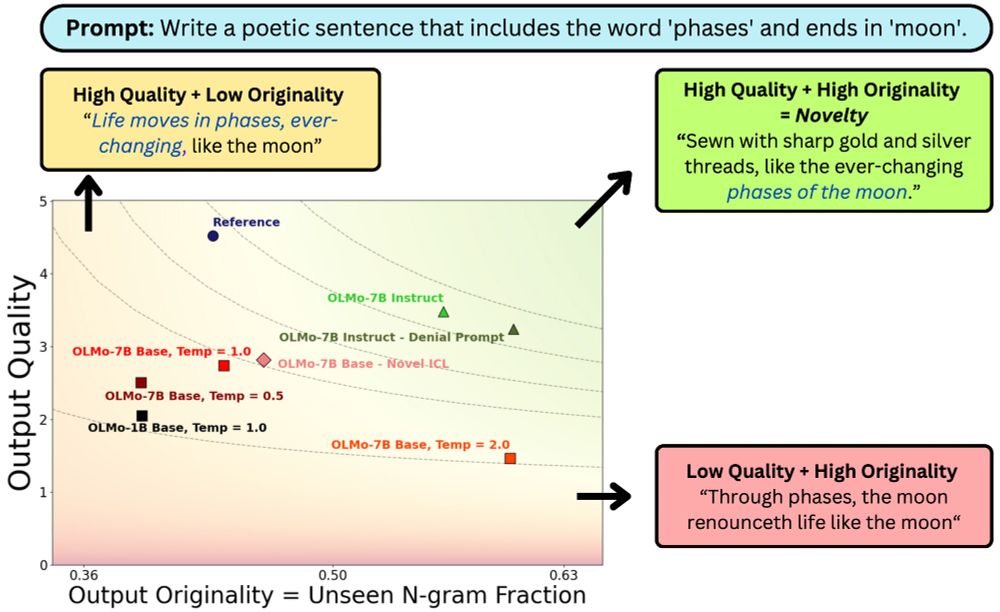

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

At Adobe, he built diversity-aware summarizers; at AI2, intent-based tools for literature review tables.

nyudatascience.medium.com/supercharged...

Read more: nyudatascience.medium.com/in-ai-genera...

Read more: nyudatascience.medium.com/in-ai-genera...

I'm late for #ICLR2025 #NAACL2025, but in time for #AISTATS2025 #ICML2025! 1/3

kamathematics.wordpress.com/2025/05/01/t...

I'm late for #ICLR2025 #NAACL2025, but in time for #AISTATS2025 #ICML2025! 1/3

kamathematics.wordpress.com/2025/05/01/t...

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

In work w/ johnchen6.bsky.social, Jane Pan, Valerie Chen and He He, we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

❌LLM-as-a-judge with greedy decoding

😎Using the distribution of the judge’s labels

We analyze design decisions for leveraging judgment distributions from LLM-as-a-judge: 🧵

(w/ Michael J.Q. Zhang, @eunsol.bsky.social)

❌LLM-as-a-judge with greedy decoding

😎Using the distribution of the judge’s labels

We analyze design decisions for leveraging judgment distributions from LLM-as-a-judge: 🧵

(w/ Michael J.Q. Zhang, @eunsol.bsky.social)

We analyze design decisions for leveraging judgment distributions from LLM-as-a-judge: 🧵

(w/ Michael J.Q. Zhang, @eunsol.bsky.social)

In our new paper, we propose WebOrganizer which *constructs domains* based on the topic and format of CommonCrawl web pages 🌐

Key takeaway: domains help us curate better pre-training data! 🧵/N

In our new paper, we propose WebOrganizer which *constructs domains* based on the topic and format of CommonCrawl web pages 🌐

Key takeaway: domains help us curate better pre-training data! 🧵/N

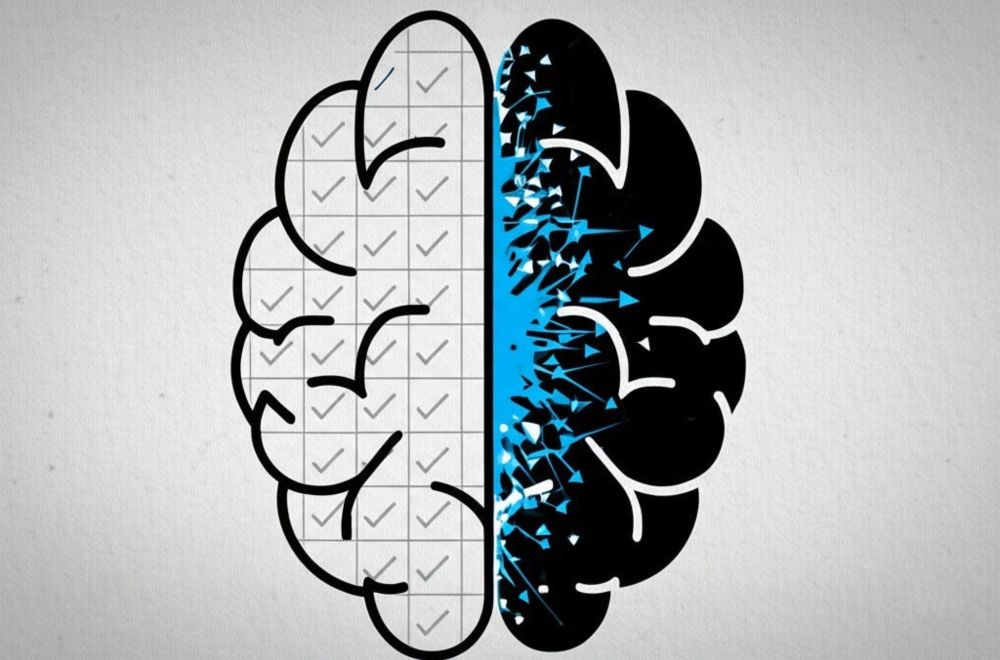

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

We want models that match our values...but could this hurt their diversity of thought?

Preprint: arxiv.org/abs/2411.04427

See the fresh arxiv.org/abs/2501.19393 by Niklas Muennighoff et al.

See the fresh arxiv.org/abs/2501.19393 by Niklas Muennighoff et al.

AI models excel at single-step reasoning but fail in systematic exploration as tasks grow in complexity.

nyudatascience.medium.com/even-simple-...

AI models excel at single-step reasoning but fail in systematic exploration as tasks grow in complexity.

nyudatascience.medium.com/even-simple-...

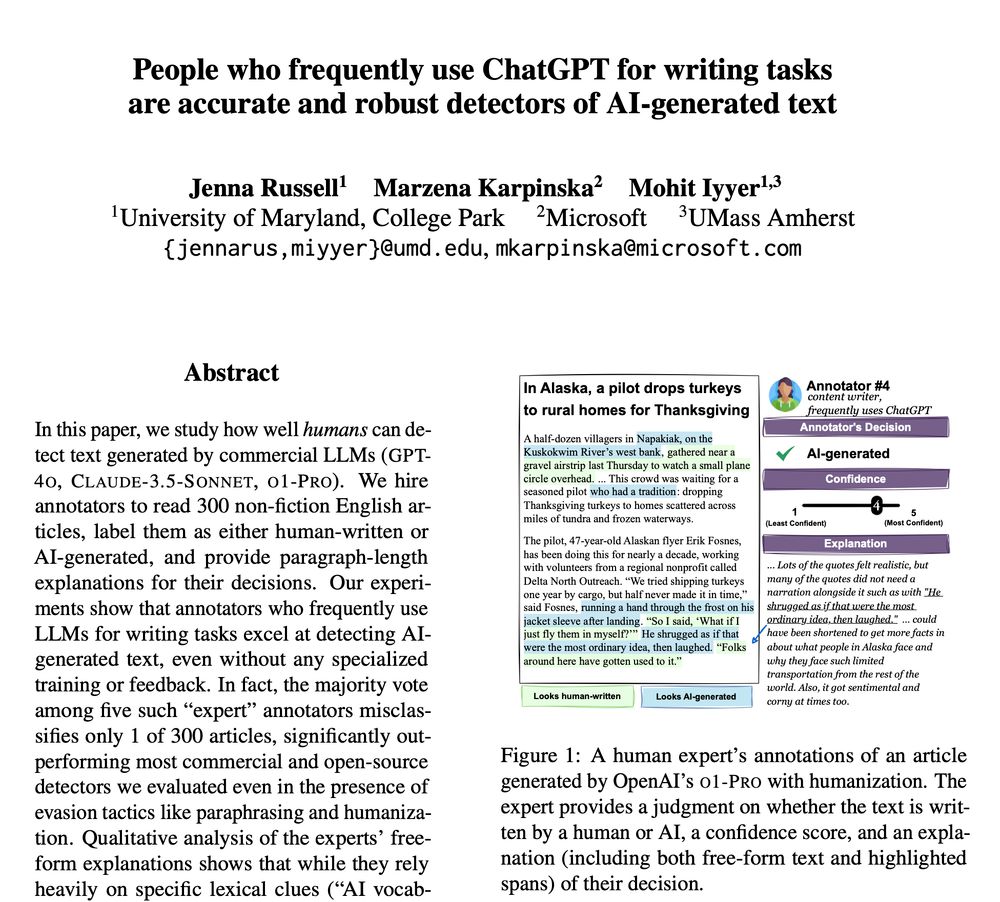

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Here's a shameless plug for our work comparing o1 to previous LLMs (extending "Embers of Autoregression"): arxiv.org/abs/2410.01792

- o1 shows big improvements over GPT-4

- But qualitatively it is still sensitive to probability

1/4

Here's a shameless plug for our work comparing o1 to previous LLMs (extending "Embers of Autoregression"): arxiv.org/abs/2410.01792

- o1 shows big improvements over GPT-4

- But qualitatively it is still sensitive to probability

1/4