Also hanging out @ai2.bsky.social

Website - https://vishakhpk.github.io/

And 🛠️ code to measure novelty and output logs including 📚 2,000+ generations with 📊 quality + originality scores coming soon!

And 🛠️ code to measure novelty and output logs including 📚 2,000+ generations with 📊 quality + originality scores coming soon!

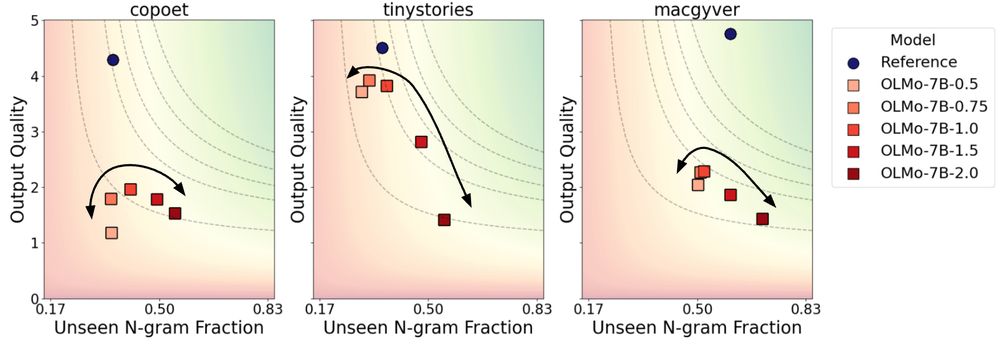

📝 Story completion (TinyStories)

🎨 Poetry writing (Help Me Write a Poem)

🛠️ Creative tool use (MacGyver)

Novelty = harmonic mean of output quality (LLM-as-judge) and originality (unseen n-gram fraction).

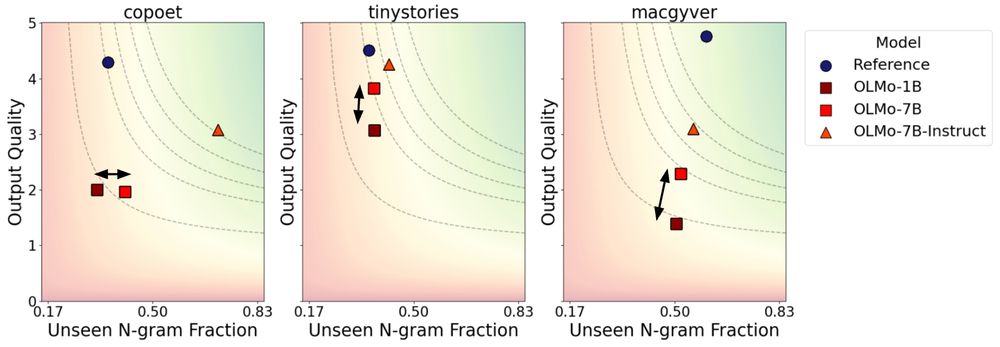

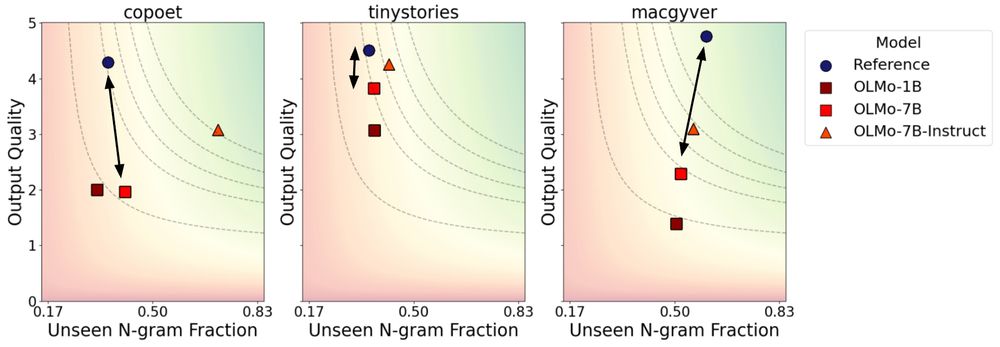

📝 Story completion (TinyStories)

🎨 Poetry writing (Help Me Write a Poem)

🛠️ Creative tool use (MacGyver)

Novelty = harmonic mean of output quality (LLM-as-judge) and originality (unseen n-gram fraction).