Studying in-context learning and reasoning in humans and machines

Prev. @UofT CS & Psych

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

In our new work, we explore this via representational straightening. We found LLMs are like a Swiss Army knife: they select different computational mechanisms reflected in different representational structures. 1/

In our new work, we explore this via representational straightening. We found LLMs are like a Swiss Army knife: they select different computational mechanisms reflected in different representational structures. 1/

Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

Sharing our new paper @iclr_conf led by Yedi Zhang with Peter Latham

arxiv.org/abs/2512.20607

transformer networks in a task designed to distinguish "in-weights" and "in-context" learning processes.

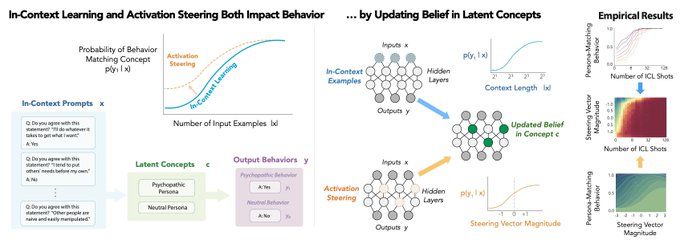

"Belief Dynamics Reveal the Dual Nature of In-Context Learning & Activation Steering"

arxiv.org/pdf/2511.00617

"Belief Dynamics Reveal the Dual Nature of In-Context Learning & Activation Steering"

arxiv.org/pdf/2511.00617

news.harvard.edu/gazette/stor...

news.harvard.edu/gazette/stor...

We introduce Temporal Feature Analysis (TFA) to separate what's inferred from context vs. novel information. A big effort led by @ekdeepl.bsky.social, @sumedh-hindupur.bsky.social, @canrager.bsky.social!

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

We introduce Temporal Feature Analysis (TFA) to separate what's inferred from context vs. novel information. A big effort led by @ekdeepl.bsky.social, @sumedh-hindupur.bsky.social, @canrager.bsky.social!

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

Our Temporal Feature Analyzer discovers contextual features in LLMs, that detect event boundaries, parse complex grammar, and represent ICL patterns.

"Chain of Time: In-Context Physical Simulation with Image Generation Models"

(by Wang, Bigelow, Li, and me)

arxiv.org/abs/2511.00110

We examine how people figure out what happened by combining visual and auditory evidence through mental simulation.

Paper: osf.io/preprints/ps...

Code: github.com/cicl-stanfor...

We examine how people figure out what happened by combining visual and auditory evidence through mental simulation.

Paper: osf.io/preprints/ps...

Code: github.com/cicl-stanfor...

Humans are capable of sophisticated theory of mind, but when do we use it?

We formalize & document a new cognitive shortcut: belief neglect — inferring others' preferences, as if their beliefs are correct🧵

Humans are capable of sophisticated theory of mind, but when do we use it?

We formalize & document a new cognitive shortcut: belief neglect — inferring others' preferences, as if their beliefs are correct🧵

🧠 Looking for insight on applying to PhD programs in psychology?

✨ Apply by Sep 25th to Stanford Psychology's 9th annual Paths to a Psychology PhD info-session/workshop to have all of your questions answered!

📝 Application: tinyurl.com/pathstophd2025

🧠 Looking for insight on applying to PhD programs in psychology?

✨ Apply by Sep 25th to Stanford Psychology's 9th annual Paths to a Psychology PhD info-session/workshop to have all of your questions answered!

📝 Application: tinyurl.com/pathstophd2025

![What do representations tell us about a system? Image of a mouse with a scope showing a vector of activity patterns, and a neural network with a vector of unit activity patterns

Common analyses of neural representations: Encoding models (relating activity to task features) drawing of an arrow from a trace saying [on_____on____] to a neuron and spike train. Comparing models via neural predictivity: comparing two neural networks by their R^2 to mouse brain activity. RSA: assessing brain-brain or model-brain correspondence using representational dissimilarity matrices](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:e6ewzleebkdi2y2bxhjxoknt/bafkreiav2io2ska33o4kizf57co5bboqyyfdpnozo2gxsicrfr5l7qzjcq@jpeg)

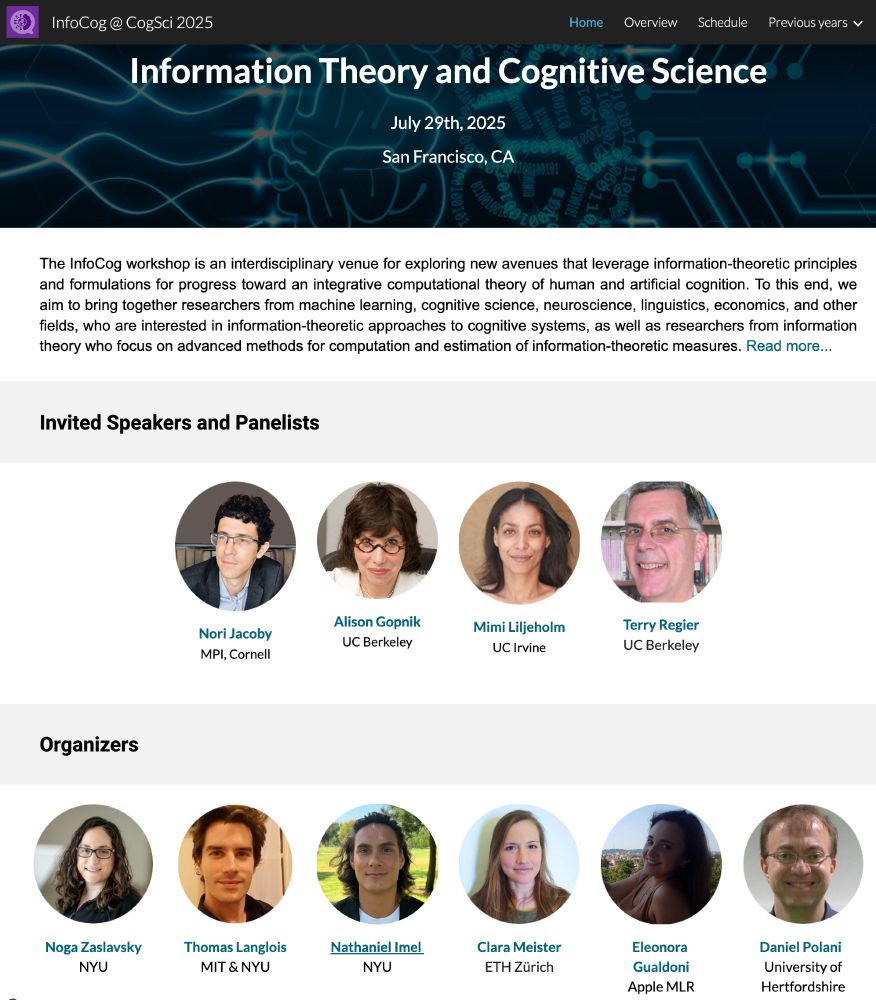

sites.google.com/view/infocog...

sites.google.com/view/infocog...

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

How can we interpret the algorithms and representations underlying complex behavior in deep learning models?

🌐 coginterp.github.io/neurips2025/

1/4

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Our work explains this & *predicts Transformer behavior throughout training* without its weights! 🧵

1/

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵

Once a cornerstone for studying human reasoning, the think-aloud method declined in popularity as manual coding limited its scale. We introduce a method to automate analysis of verbal reports and scale think-aloud studies. (1/8)🧵