More info: https://www.nogsky.com/

CDS-affiliated @nogazs.bsky.social and PhD student Nathaniel Imel show that, via simulated cultural transmission, LLMs reorganize color categories toward efficient compression.

🔗 arxiv.org/abs/2509.08093

CDS-affiliated @nogazs.bsky.social and PhD student Nathaniel Imel show that, via simulated cultural transmission, LLMs reorganize color categories toward efficient compression.

🔗 arxiv.org/abs/2509.08093

In our new paper, we ask: How do people turn continuous spaces into structured, word-like systems for communication? (1/8)

www.thetransmitter.org/the-big-pict...

www.thetransmitter.org/the-big-pict...

#neuroskyence

www.thetransmitter.org/the-big-pict...

#neuroskyence

www.thetransmitter.org/the-big-pict...

![Comic. [long message with unreadable text that includes lots of punctuation marks like semi colons and em dashes as well as citations.] Highlighted line at the bottom zoomed in reads: Not ChatGPT output—I’m just like this. [caption] I’ve had to start adding this disclaimer to my messages.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:cz73r7iyiqn26upot4jtjdhk/bafkreifbqjlfnleuu4jrpvc7q6e43ckn2lqvuv7vdgtuf7e67dw5acy4di@jpeg)

1/n

1/n

sites.google.com/view/infocog...

sites.google.com/view/infocog...

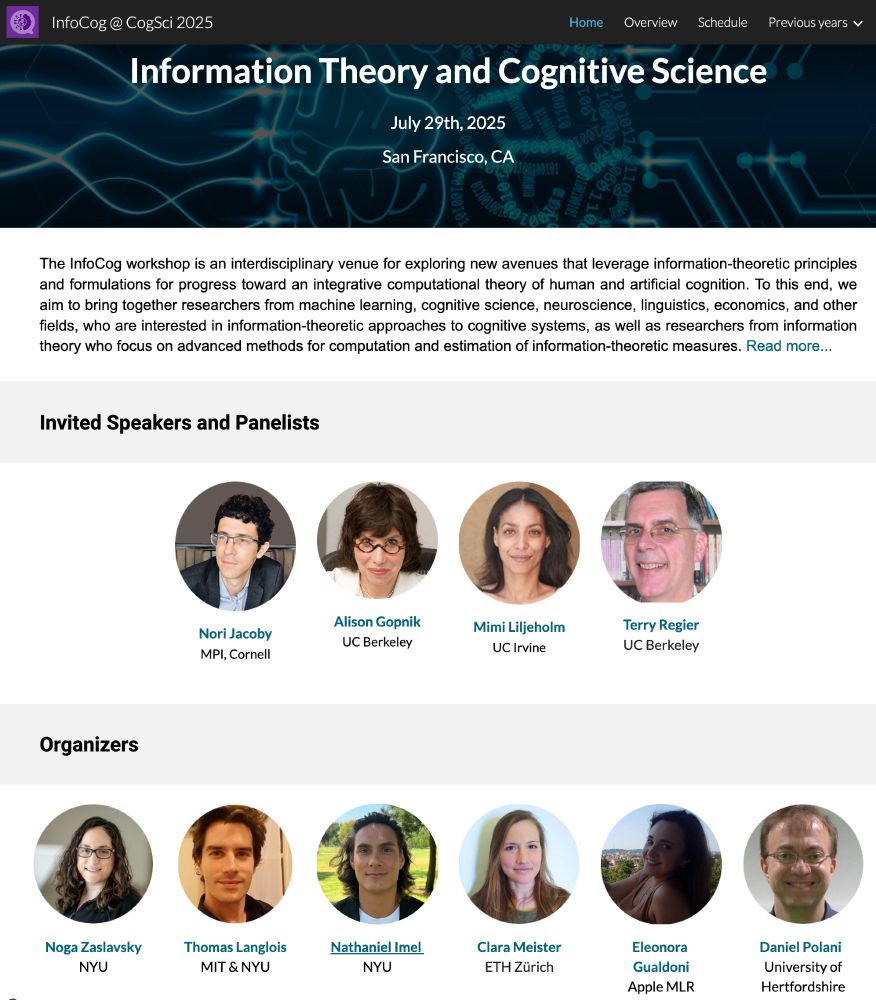

Information Theory and Cognitive Science

🗓️ Wednesday, July 30

📍 Pacifica C - 8:30-10:00

🗣️ Noga Zaslavsky, Thomas A Langlois, Nathaniel Imel, Clara Meister, Eleonora Gualdoni, and Daniel Polani

🧑💻 underline.io/events/489/s...

Information Theory and Cognitive Science

🗓️ Wednesday, July 30

📍 Pacifica C - 8:30-10:00

🗣️ Noga Zaslavsky, Thomas A Langlois, Nathaniel Imel, Clara Meister, Eleonora Gualdoni, and Daniel Polani

🧑💻 underline.io/events/489/s...

Apply here: apply.interfolio.com/170656

And come chat with me at #CogSci2025 if interested!

Apply here: apply.interfolio.com/170656

And come chat with me at #CogSci2025 if interested!

Iterated language learning is shaped by a drive for optimizing lossy compression (Talks 37: Language and Computation 3, 1 August @ 16:22; blurb below) (2/)

Iterated language learning is shaped by a drive for optimizing lossy compression (Talks 37: Language and Computation 3, 1 August @ 16:22; blurb below) (2/)

"Deep learning and the information bottleneck principle" with the late, great Tali Tishby

ieeexplore.ieee.org/document/713...

"Deep learning and the information bottleneck principle" with the late, great Tali Tishby

ieeexplore.ieee.org/document/713...

There are two open positions:

1. Summer research position (best for master's or graduate student); focus on computational social cognition.

2. Postdoc (currently interviewing!); focus on computational social cognition and AI safety.

sites.google.com/corp/site/sy...

There are two open positions:

1. Summer research position (best for master's or graduate student); focus on computational social cognition.

2. Postdoc (currently interviewing!); focus on computational social cognition and AI safety.

sites.google.com/corp/site/sy...

data-for-good-team.org

data-for-good-team.org

direct.mit.edu/opmi/article...

@rplevy.bsky.social

And looking forward to speaking about this line of work tomorrow at @nyudatascience.bsky.social!

direct.mit.edu/opmi/article...

@rplevy.bsky.social

And looking forward to speaking about this line of work tomorrow at @nyudatascience.bsky.social!

www.ft.com/content/d8f8...

www.ft.com/content/d8f8...

tinyurl.com/yckndmjt

tinyurl.com/yckndmjt